Meta Drops 405B Llama Bomb

Meta just dropped a 405B parameter monster, and the open-source world is shaking.

AgentBrief Combined for Dec 08, 2025

What a week for builders! Meta just dropped a seismic release: Llama 3.1, crowned by a monstrous 405B parameter model, the largest open-weight model to date. The community is buzzing, not just about its power, but about the very definition of 'open source,' as Meta's new license introduces restrictions for major tech players. This release isn't happening in a vacuum. It's part of a massive wave of innovation, with Meta also unveiling its native multimodal model, Chameleon, Cohere pushing multilingual boundaries with Aya 23, and Perplexity letting users create custom AI Personas. For developers, this translates to an unprecedented arsenal of specialized, powerful tools. The barrier to building sophisticated, multi-modal, and multi-lingual agents just got obliterated. It's time to build.

X-Ray Vision

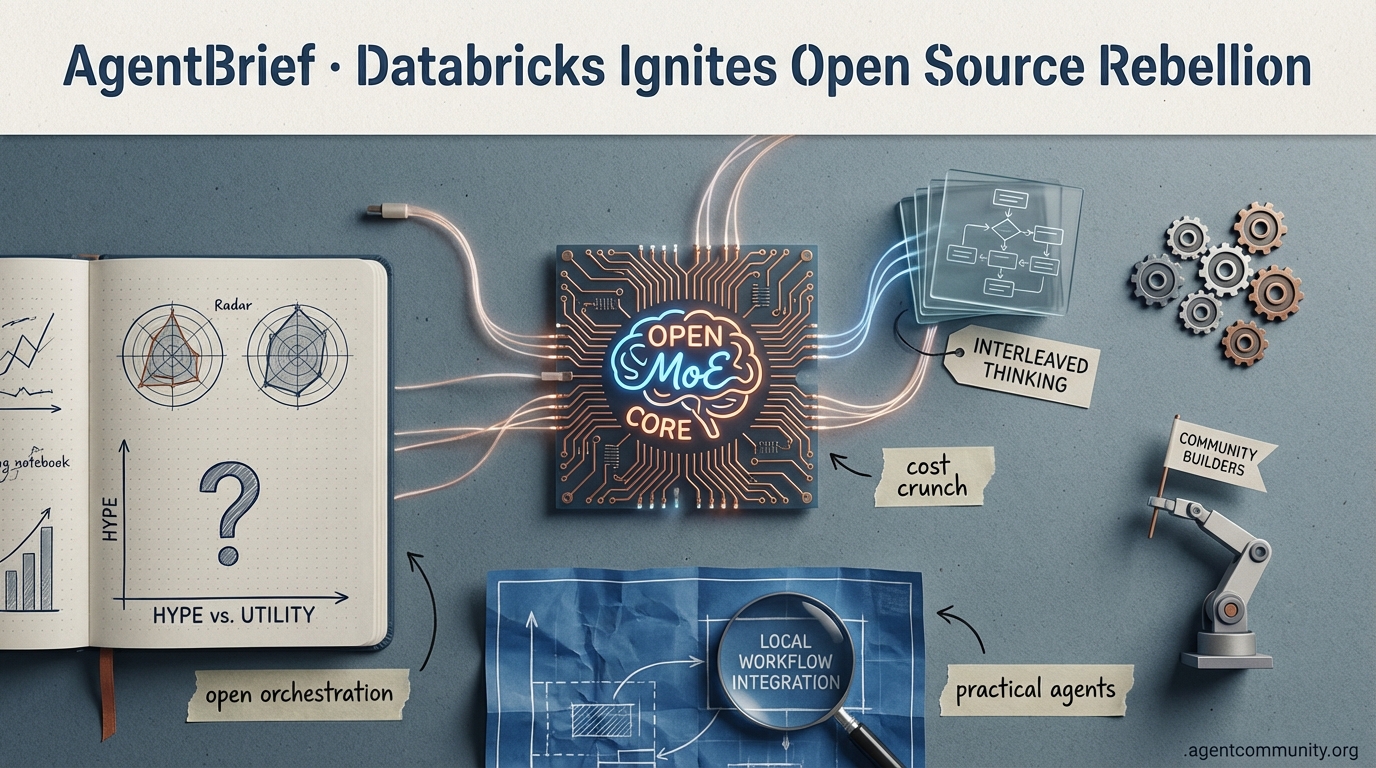

This week, a new open-source model redefines agentic reasoning while LangGraph doubles down on production-ready frameworks!

What a week for agent builders! The headline grabber is MiniMax-M2, a new open-source model that’s crushing benchmarks with a novel 'Interleaved Thinking' technique, making agents smarter and more reliable. This focus on robust reasoning is perfectly complemented by the latest from the LangChain ecosystem. LangGraph is pushing hard on controllability and state management, rolling out new courses and patterns like Programmatic Tool Calling to help you build agents that are not just powerful, but also observable and production-grade. It’s clear the stack is maturing fast—we’re moving from simple loops to sophisticated, state-aware systems. Let’s dive in!

MiniMax-M2's 'Interleaved Thinking' Crushes Agent Benchmarks

A new open-source model from a Chinese lab, MiniMax-M2, is turning heads by specializing in agentic reasoning and coding. Instead of chasing general benchmarks, it uses a novel 'Interleaved Thinking' method to excel at complex, multi-step tasks. This technique allows the model to dynamically update its plan after each action, creating a persistent state that dramatically improves performance in workflows requiring error correction and adaptation.

LangGraph Powers More Controllable, Production-Ready Agents

The LangChain ecosystem is zeroing in on building stateful, controllable, and resilient agents with LangGraph. Through new courses with DeepLearningAI and the promotion of advanced patterns, developers are getting the tools to move beyond simple ReAct loops. The focus is on creating observable, maintainable workflows that can handle long-running tasks and complex tool interactions, paving the way for real-world, production-grade agentic systems.

Agents Ditch Text for Faster Hidden Vector Communication

- New research proposes multi-agent communication via internal hidden vectors instead of text, enabling direct transfer of nuanced information and bypassing NLP overhead. @rohanpaul_ai

- This method can achieve up to 4x faster inference and a 70-80% reduction in output tokens, promising huge efficiency gains for high-throughput agentic systems. @rohanpaul_ai

- Community reaction is mixed, balancing excitement for reduced latency with concerns about interpretability, compatibility across different agent architectures, and safety. @AI_Researcher

DeepSeekMath-V2 Targets Flawed Reasoning in Agents

- A new paper highlights a critical agentic challenge: models can get the right answer through flawed logic, like brute-forcing or guessing, which is risky for high-stakes applications. @omarsar0

- DeepSeekMath-V2 prioritizes the integrity of step-by-step reasoning over just final-answer accuracy, aiming to build more trustworthy and reliable agents. @omarsar0

- The model shows superior accuracy in math grading and aligns closely with human evaluators, though some argue real-world agentic issues like knowledge gaps remain a challenge. @zjasper

OpenCode Agent Advances With Modular, Composable Primitives

- The open-source coding agent OpenCode is building a highly customizable framework based on modular, overridable primitives, giving developers granular control. @thdxr

- A new 'Explore subagent' for file system operations was seamlessly integrated via a config file, showcasing the framework's composable design philosophy. @thdxr

- Its client/server model enables remote shells and sandboxing, though some community members note that immature parts of the system still need refinement to be fully user-friendly. @thdxr

The Economic Case for AI Agents Solidifies with Market Demand

- AI agents are turning historically scarce or expensive tasks into abundant, affordable resources, unlocking value in areas like continuous code review and real-time contract analysis. @levie

- A McKinsey report finds demand for 'AI fluency' has surged 7x in two years, with current tech able to automate over half of US work hours, potentially unlocking $2.9 trillion by 2030. @rohanpaul_ai

- The consensus is that agents will augment, not replace, human roles, as domain expertise remains critical to harnessing AI for complex business problems. @levie

Quick Hits

Models for Agents

- Gemini 3 Pro showed strategic planning in SnakeBench by intentionally trapping its Opus 4.5 opponent to secure a win. — @GregKamradt

- Research shows fixed prompts in LLM benchmarks can systematically underestimate model performance, a critical insight for agent evaluation. — @dair_ai

- A new paper shows even top LLMs struggle with planning, solving only 68% of simple 8-puzzle games, highlighting a key reliability challenge. — @rohanpaul_ai

- Opus 4.5 is praised as the 'hands-down the best' model for coding workflows due to its superior intent understanding. — @omarsar0

Tool Use & Function Calling

- LlamaIndex now has a mode in LlamaExtract to pull massive structured tables from documents with 100% accuracy. — @jerryjliu0

- An open-source, LLM-powered web browsing agent has been released for developers to experiment with. — @tom_doerr

- A new open-source library provides a collection of specialized sub-agents for use with Claude Code. — @tom_doerr

- A new browser automation node is available for n8n, enabling more complex agentic web interaction workflows. — @tom_doerr

Memory & Context

- A new library, Agi-Memory, combines vector, graph, and relational DBs to emulate episodic, semantic, and procedural memory for agents. — @QuixiAI

- Builder @raizamrtn hopes 2026 will be the year context windows and context rot are solved, unlocking better long-running agents. — @raizamrtn

- Researcher @omarsar0 predicts context engineering skills will become even more crucial for building effective agent harnesses in scientific domains. — @omarsar0

Agentic Infrastructure

- The hardware war is brewing: Anthropic's $52B deal to buy Google's TPUv7 chips directly bypasses Nvidia. — @IntuitMachine

- Developers report that Nvidia B200 GPUs experience 'astronomical' failure rates, impacting training stability for large clusters. — @Teknium

- Supabase now supports the

halfvectype for creating indexes on vectors with more than 2,000 dimensions. — @supabase

Developer Experience

- New proxy tool VibeLogger lets devs log interactions with Codex, Claude Code, and Gemini CLI to a local Arize Phoenix instance. — @QuixiAI

- With the rise of AI-generated code, writing automated tests is more critical than ever to prevent 'monstrosity' from vibe-coded software. — @svpino

- The team behind the AI-native editor Cursor is holding a developer meetup in London on Dec 10. — @ericzakariasson

Industry & Ecosystem

- A US House panel summoned Anthropic's CEO following a report of a state-backed group using Claude for cyber espionage. — @rohanpaul_ai

- The White House has launched the 'Genesis Mission,' a 'Manhattan-Project' style initiative to accelerate AI-driven scientific discovery. — @CoinDesk

- Investor @bindureddy predicts that 2026 will be the year of open-source AI, driven by releases from US companies and a push from China. — @bindureddy

Reddit Debates

NVIDIA just dropped a tiny agent model it claims beats GPT-5, while the community grapples with soaring hardware costs and the messy reality of building agents that actually work.

What a week for builders! NVIDIA fired a massive shot across the bow, releasing Orchestrator-8B—an 8-billion-parameter agent model it claims outperforms GPT-5 on a key benchmark. This is a huge deal, especially as the community feels the squeeze from skyrocketing hardware costs. The race for efficiency is on, and it's forcing us to get smarter across the entire stack. We're seeing this in the way we build, with a push to deconstruct RAG into clean pipelines and evolve prompt engineering into full-blown agent architecture. While practitioners are shipping impressive agents on practical platforms like n8n, foundational challenges in web automation and agent-to-agent protocols remind us we're still in the messy, exciting middle of building the future. Let's dive in!

NVIDIA's New Agent Model Beats GPT-5?

- The big news: NVIDIA released Orchestrator-8B, an 8-billion-parameter model designed for agentic tasks that it claims scores 37.1% on the Humanity's Last Exam (HLE) benchmark, reportedly outperforming GPT-5's 35.1% — r/LocalLLaMA.

- According to NVIDIA's own testing, the model is designed to coordinate expert tools and is 2.5x more efficient than larger counterparts, excelling at multi-turn tasks — NVIDIA Technical Blog.

- The local AI community is already all over it, with a GGUF version available for CPU and non-NVIDIA GPU users — u/jacek2023.

- For builders: An efficient, open-source agent brain could be a game-changer, but the community is waiting for independent benchmarks to validate NVIDIA's bold claims — r/LocalLLaMA.

Stop Building RAG, Start Building Pipelines

- The big idea: Treat RAG not as one system, but as three distinct pipelines: Ingestion, Retrieval, and Synthesis. This mental model makes debugging and optimization way easier — u/Inferace.

- Many so-called 'RAG failures' are actually just problems in one specific stage, like poor document parsing during ingestion — r/Rag.

- A shared taxonomy of RAG errors helps pinpoint failure points, from the chunker to the final generator — r/Rag.

- For builders: The biggest challenge remains robust validation. Many devs are still stuck with manual testing and lack systematic ways to ensure retrieval quality in production — u/Electrical-Signal858.

Local AI Hardware Costs Are Exploding

- The pain point: The cost of RAM for local AI builds is surging. One user reported 192GB of DDR5 RAM jumped from $900 CAD to over $3200 CAD in just one month — u/Hoppss.

- The price hike is driven by massive AI industry demand for High Bandwidth Memory (HBM), which is cannibalizing the consumer DDR5 supply chain — TrendForce.

- Builders are debating everything from the impact of limited PCIe lanes in multi-GPU setups (u/fabkosta) to using a Mac Studio as an all-in-one AI workstation (u/Jadenbro1).

- For builders: Rising costs may force a shift to more efficient models, aggressive quantization, and hybrid cloud-local agent architectures.

Web Agents Are Still Painfully Brittle

- The core problem: Agents are great at reasoning but fall apart when interacting with live websites. The process becomes fragile when dealing with dynamic tables, logins, and forms — u/Reasonable-Egg6527.

- Traditional tools like Selenium and Playwright struggle with the unpredictable nature of the web, making them a poor fit for autonomous agents — r/AI_Agents.

- Users are desperate for solutions to automate complex tasks, like comparing healthcare options across multiple sites that require logins — u/MeltingAnchovy.

- For builders: Keep an eye on emerging AI-native tools like Taxy.AI and MultiOn, which aim to create a more robust web interaction layer. This is a key unsolved problem.

n8n Becomes a Go-To for Agent Builders

- The trend: The low-code automation platform n8n is becoming a hotbed for building and deploying practical, real-world AI agents, with its subreddit buzzing with projects — r/n8n.

- One user shared how to deploy a complete n8n AI stack with a vector DB in just 15 minutes — u/NoBeginning9026.

- The applications are ambitious: one builder created a $10K LLM-powered SEO automation, while another cloned a $100k MRR AI service in only 10 hours — u/MeasurementTall1229, u/Vegetable_Rock_5860.

- For builders: n8n is proving to be an incredibly powerful tool for rapid agent prototyping and deployment, showing the power of visual building for complex AI workflows.

MCP Protocol: "Barely Works" For Now

- The reality check: YC's Garry Tan commented that the Model Context Protocol (MCP) for agent interoperability "barely works," sparking a debate on its maturity — u/Federal-Song-2940.

- The community is split: some are shipping MCP servers for codebase indexing (u/FancyAd4519) and enterprise gateways (u/iambuildin).

- Others are hitting roadblocks, reporting basic client-server handshake failures and worrying there are "more mcp developers than mcp users" — u/Pristine_Rough_6371, u/Shigeno977.

- For builders: MCP is a promising standard to watch, but it's far from ready for production-critical applications. The dream of seamless agent communication is still on the horizon.

Prompt Engineering Becomes Agent Architecture

- The evolution: Prompt engineering is maturing from disposable one-liners into designing structured, reusable agent architectures directly within the prompt — u/Lumpy-Ad-173.

- One user introduced "Caelum," a prompt-only framework for creating multi-role agents (Planner, Operator, Critic) to achieve more predictable behavior without external code — u/HappyGuten.

- Others are using small, reusable "prompt modules" for common tasks, treating prompt design as a core component of the development lifecycle — u/Professional-Rest138.

- For builders: This represents a powerful, code-light way to build more reliable and steerable agents by enforcing structure and decomposing tasks at the prompt level.

Discord Dispatches

Forget black boxes—devs just extracted Claude's 'soul,' revealing the raw principles that guide one of the world's top models.

What a week for transparency! The biggest news dropped in the Claude community, where users extracted the model's core 'soul' document—a raw look at the Constitutional AI principles guiding its behavior. This isn't just academic; it's a tangible blueprint for alignment. This theme of control echoes across the ecosystem, from the Ollama community's push for uncensored local models to the tough questions being asked about Perplexity's curated experience. But as we get more insight, the physical and economic realities are hitting hard. Soaring RAM prices are making self-hosting a luxury, and AI 'wrapper' apps are feeling the squeeze from API costs. For builders, this is the new landscape: unprecedented insight into model minds, coupled with real-world constraints that demand smarter, more efficient design.

Claude's 'Soul' Spec Sparks Alignment Debate

- The big news: Users successfully extracted a core document outlining Claude's behavioral principles, offering a rare, direct look into Anthropic's Constitutional AI in action.

- The document has a powerful "linguistic pull" on models, shaping their behavior from the inside out — niston.

- It candidly frames helpfulness in commercial terms to serve Anthropic's revenue needs, which some praised as refreshingly transparent — real_bo_xilai.

- As one user put it, this is "Constitutional AI works as designed. Principles in weights — a feature, not a bug" — tkenaz.

- For builders: This provides a concrete example of how foundational models are aligned, but also raises questions about adversarial extraction of these principles — Richard Weiss Blog.

DDR5 RAM Prices Explode, Threaten Self-Hosting

- The big news: The cost of high-capacity DDR5 RAM has skyrocketed, creating a major financial barrier for developers running large models locally.

- A user reported a 192GB kit jumping from ~$650 USD ($900 CAD) to over $2,300 USD ($3,200 CAD) since last October — TrentBot.

- The price surge is driven by high demand for AI servers and production cuts from manufacturers like Micron, Samsung, and SK Hynix — Tom's Hardware.

- Analysts expect tight supply and high prices to continue through 2024 and potentially into 2025 — TrendForce.

- For builders: This trend may push more developers toward cloud APIs or force a greater reliance on highly quantized models to stay within budget.

Ollama Users Demand Uncensored Local Models

- The big news: The Ollama community is focused on running "abliterated" models locally to bypass safety guardrails and gain direct control over outputs.

- The goal is to avoid the model "wasting your time by forcing you to circumnavigate a task it considers improper" — facetiousknave.

- Many are leveraging Ollama's OpenAI-compatible API to integrate local models into existing toolchains and workflows — abdelaziz.el7or.

- Despite compatibility, real-world friction exists, as one dev detailed their struggles configuring Ollama with the

continue.devVS Code extension — devourer_devours. - A recent documentation refresh at

docs.ollama.comsignals the platform's growing maturity and commitment to user experience — maternion.

AI Wrappers Face API Cost Squeeze

- The big news: AI tools built on third-party APIs are facing a tough economic reality, forcing them to raise prices and limit services as their own costs rise.

- Cursor users point out "they are the middle man and the middle man is expensive which is why they are cranking up everyones prices" — mutiny.exe.

- Some early subscribers report their $20 plan now gets them about 1/5th of its original value in terms of usage — mutiny.exe.

- This reflects a broader industry challenge, with companies like Jasper also facing layoffs amid high API costs — The Information.

- For builders: The long-term viability of AI wrapper apps depends on providing unique, indispensable value beyond just being a convenient UI for another company's API.

Builders Shift Focus To Multi-Agent Swarms

- The big news: The conversation in builder communities is moving beyond single-purpose bots to architecting complex, multi-agent systems for organizational tasks.

- One user was actively hiring to build a "hive of AI agents to do some roll-based work within an organization hierarchy" — dustin2954.

- For these complex setups, experienced builders are recommending frameworks like

crewaito manage agent collaboration, planning, and execution — 3xogsavage. - For builders: The growing need for sophisticated agent orchestration signals a new level of maturity in operationalizing AI for structured business workflows.

Is Perplexity Pro Worth the Price Tag?

- The big news: Users are actively debating if Perplexity's curated experience and bundled model access is a better value than using model APIs directly.

- Some find it superior for work, stating "Claude is 100% better than Gemini" within the platform's environment — jxb7.

- Others are "unimpressed with the search algorithm," questioning the core value add over direct model interaction — blindestgoose.

- Perplexity adds its own system prompts, temperature settings, and RAG, which provides convenience but abstracts the raw model behavior — ssj102.

- For builders: It’s the classic trade-off: a streamlined, all-in-one platform versus the greater control and predictability of direct API access.

The HuggingFace Hub

The open-source agent race just hit ludicrous speed, and HuggingFace is taking the wheel.

What a week for agent builders! HuggingFace just dropped a bombshell, launching a coordinated suite of tools that signals a massive, strategic push into the agent ecosystem. This isn't just another library; it's a full-stack offensive. They're connecting the dots from foundational frameworks like Transformers Agents 2.0 and Agents.js all the way to sophisticated new benchmarks like GAIA 2 that test real-world reasoning. This move, combined with the rise of smaller, specialized open models for tool use, is creating a powerful, integrated, and open-source-first alternative to the established players. For developers, this means more power, more choice, and a clearer path from prototype to production. The tooling is maturing fast—let's dive in!

HuggingFace Doubles Down on Agents

- The big news: HuggingFace is building an integrated, open-source agent ecosystem with the launch of Transformers Agents 2.0 and Agents.js, aiming to be the central hub for agent development.

- A new blog, "License to Call," details their strategy, including a major push to unify how models interact with tools across the ecosystem — HuggingFace.

- Agents.js brings powerful tool-based LLM capabilities to JavaScript and TypeScript, a huge step for building agents directly into web applications — HuggingFace.

- Their Transformers Code Agent already hit an impressive 83.5% accuracy on the notoriously difficult GAIA benchmark, showcasing the power of their stack — HuggingFace.

- For builders: This is a direct challenge to frameworks like LangChain, offering a deeply integrated, open-source-first alternative for building and evaluating agents.

Specialized Models Offer Efficient Tool Use

- The big news: Two new families of smaller, open models have been released, providing lightweight and efficient backbones for task-specific agents.

- A new model series from Distil-labs, Distil-gitara v2, is fine-tuned on Llama 3.2 for Git and CLI commands, available in tiny 1B and 3B parameter sizes — distil-labs.

- A new model from suradev,

toolcaller-bounty8b-v2, is a fine-tune of BitAgent's Bounty-8B, optimized for a broad range of general-purpose function calling tasks — suradev. - GGUF versions are available for easy local inference, allowing devs to run these specialized agents on their own machines — mradermacher.

- For builders: You no longer need a massive general-purpose model for every task. These specialized models offer a path to faster, cheaper, and more focused agents.

Tooling Matures for GUI Agent Development

- The big news: The complex challenge of building agents that operate GUIs is getting a major boost from new, standardized tools for evaluation and deployment.

- A new blog from Hugging Face introduces ScreenSuite, a comprehensive evaluation suite to benchmark how well agents perform tasks in real-world applications — huggingface.

- To simplify deployment, the new ScreenEnv project provides a framework for packaging and running full-stack desktop agents — huggingface.

- A new collection of papers on GUI Agents signals a wave of academic research tackling core challenges like visual grounding and long-horizon planning — huggingface.

- For builders: The era of ad-hoc GUI automation is ending. We're now seeing the emergence of robust engineering practices for creating reliable visual agents.

smolagents Gets Vision and MLOps Tooling

- The big news: The minimalist

smolagentsframework is rapidly evolving, adding VLM support to "see" and MLOps integration for production-grade observability. - Agents can now process images, unlocking multimodal use cases thanks to new Vision Language Model support — Hugging Face.

- A new integration with Arize Phoenix allows developers to trace and evaluate agent execution, a critical step for debugging and improving reliability — Hugging Face.

- The

Smol2Operatorproject demonstrates how these lightweight agents can be post-trained to handle complex GUI-based computer tasks — Hugging Face. - For builders:

smolagentsproves a code-first, minimalist approach can be both powerful and production-ready, offering a lean alternative to more complex frameworks.

Benchmarks Evolve for Advanced Agent Reasoning

- The big news: Agent evaluation is getting a major upgrade with new benchmarks designed to test sophisticated reasoning and strategic interaction, not just task completion.

- Hugging Face announced GAIA 2 and the Agent Research Environment (ARE), empowering the community to rigorously test agent capabilities on tough, real-world problems — Hugging Face.

- The original GAIA benchmark was so difficult it took human experts over 20 hours on average to complete, setting a high bar for agent performance — Hugging Face.

- New specialized tests are emerging, like DABStep for multi-step data analysis and AI vs. AI, a multi-agent competition to foster strategic skills — Hugging Face.

- For builders: Better benchmarks lead to better agents. These new tools measure what actually matters, pushing the entire field toward more capable and reliable systems.

Trending Spaces Showcase Agents in Action

- The big news: Hugging Face Spaces have become the go-to gallery for applied AI, with trending agent demos offering a real-time look at what's possible today.

- Check out the Virtual Data Analyst, an interactive agent for data exploration built by nolanzandi.

- A popular demo from fdaudens showcases a personal news agent that summarizes daily headlines for you.

- For those who want to build, the open-source web agent studio osw-studio by otst provides a visual builder.

- For builders: The best way to learn is by doing and exploring. The official Agents Course and these community projects are a goldmine of inspiration and reusable code.