Databricks Ignites Open Source Rebellion

The AI arms race just got a new, open-source superpower. Here's what it means for you.

AgentBrief Combined for Dec 08, 2025

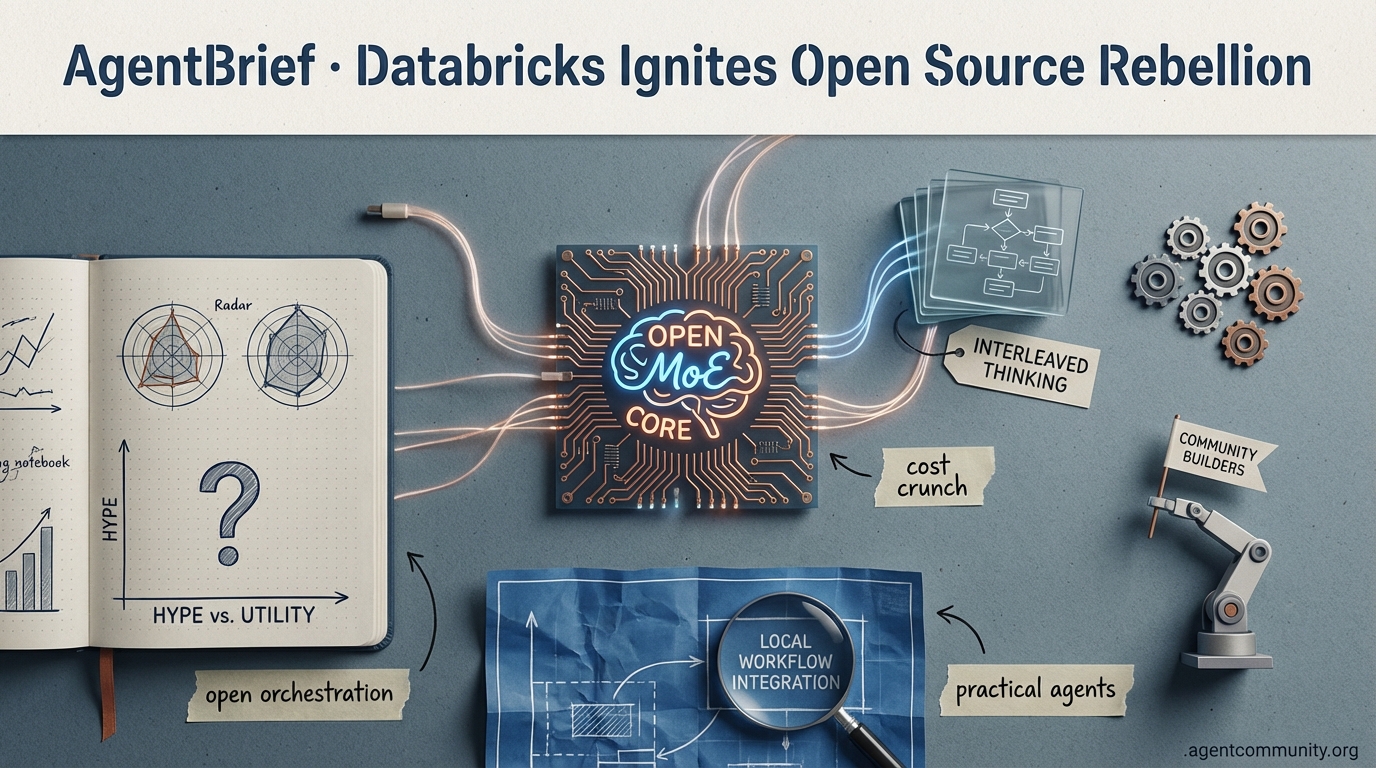

This wasn't just another week in AI; it was a declaration of independence. Databricks' release of DBRX, a powerful open-source Mixture of Experts model, sent a shockwave through the community, marking a potential turning point in the battle against closed-source dominance. The message from platforms like X and HuggingFace was clear: the open community is not just competing; it's innovating at a breakneck pace. But as the silicon dust settles, a necessary reality check is emerging from the trenches. On Reddit and Discord, the conversations are shifting from pure benchmarks to brutal honesty: Is this a hype bubble? How do we actually use these local models in our daily workflows? While developers are pushing the limits with new agent frameworks like CrewAI and in-browser transformers, there's a growing tension between the theoretical power of these new models and their practical, everyday value. This week proved that while the giants can be challenged, the real work of building the future of AI falls to the community, one practical application at a time.

The X-Factor

Your agent's reasoning loop is getting a fundamental rewrite.

This week, the agentic web is being pulled in two powerful directions. On one side, we have models like MiniMax-M2 baking more sophisticated reasoning directly into their architecture with 'Interleaved Thinking.' It's a vision where the model's core inference process is inherently more agentic, a smarter brain that can plan, act, and reflect in a single, fluid loop. On the other side, we have frameworks like LangGraph doubling down on explicit control, giving builders the power to design complex, stateful, and observable agentic systems from the outside in. It’s the classic battle between a more capable core and a more controllable harness.

This isn't an either/or future; it's a 'yes, and.' The most potent agents will combine both: models with innate planning capabilities orchestrated by robust, transparent frameworks. The stories this week—from vector-based communication to programmatic tool-calling—all fit into this narrative. They show a field maturing beyond simple ReAct demos and tackling the hard problems of reliability, efficiency, and control. For those of us shipping agents, understanding this dynamic is everything. It dictates whether you solve a problem with a better prompt, a better model, or a better graph.

Open Source Model Rewrites Agentic Reasoning with 'Interleaved Thinking'

A new open-source model from Chinese lab MiniMax is making waves by tackling one of the biggest failure points in agentic workflows: state management. MiniMax-M2 introduces a technique called 'Interleaved Thinking,' designed for complex, multi-step tasks. Instead of stateless calls, the model dynamically updates its plan after each action, maintaining a persistent state throughout the task. As @rohanpaul_ai detailed, this allows the agent to adapt based on tool outputs and new information, essentially creating a self-correcting reasoning loop. By embedding 'think blocks' directly into the chat history, the model builds an explicit reasoning trace it can refer back to, a critical feature for iterative tasks like coding and debugging, which @cline noted improves reliability.

The results are impressive. MiniMax-M2 has reportedly topped the notoriously difficult SWE-bench leaderboard, outperforming well-known models like DeepSeek and Qwen. But the real story for builders is the efficiency. According to posts from @dlimeng192048, it's 12x cheaper than Claude for comparable tasks, a metric that fundamentally changes production viability. Early adopters are seeing tangible gains, with one report from @thinktools_ai claiming a 35% reduction in token use and 28% lower latency on agentic coding workloads.

For agent builders, this matters because it represents a new architectural pattern for reliability. With weights on Hugging Face and code on GitHub, as shared by @rohanpaul_ai, developers can now build agents with a more robust, RAM-like reasoning process baked in. While the community reaction is overwhelmingly positive, some, like @TheAhmadOsman, have flagged potential infrastructure hurdles for deployment at scale. Still, MiniMax-M2 is a major step forward for open-source models, pushing the entire ecosystem toward more dependable autonomous agents.

LangGraph Pushes Beyond ReAct Loops with State, Memory, and Control

While new models bake in better reasoning, the LangChain ecosystem is betting that true production-grade agents require explicit, controllable orchestration. Their focus on LangGraph signals a strategic shift towards building stateful, resilient systems. Through new courses with DeepLearningAI, they're arming developers with patterns for long-term memory, breaking it down into semantic, episodic, and procedural types to build more sophisticated assistants, as @LangChainAI announced. This push for foundational knowledge covers persistence, agentic search, and crucial human-in-the-loop workflows, giving builders the primitives for creating truly adaptive systems (@LangChainAI).

A key innovation gaining traction is 'Programmatic Tool Calling' (PTC), a pattern originally from Anthropic that LangChain CEO Harrison Chase is championing. As @hwchase17 explained, PTC allows agents to execute tools via code, enabling complex logic, data processing, and bundled operations in a single, efficient call. This is more than a convenience; it's a paradigm shift for tool use. The community is already running with it, with one open-source implementation built on LangChain's DeepAgent achieving a staggering 85-98% token reduction on data-heavy tasks by executing code in sandboxes, highlighted by @LangChainAI.

Why should builders care? The conversation is moving from demos to deployment. As discussed in a recent chat with LangChain's Anika Somaia, the real challenges are in handling long-running tasks and complex tool interactions gracefully (@jxnlco). LangGraph provides the observability and control to manage this complexity. Tutorials on multi-agent orchestration and human-in-the-loop processes, shared by @LangChainAI, show how to coordinate specialized agents for real-world jobs. This is the toolkit for building agents that don't just work, but are also maintainable and reliable in production.

Is Text Obsolete? Research Proposes Agent Communication via Hidden Vectors

A provocative paper from researchers at Stanford, Princeton, and the University of Illinois suggests a radical overhaul for multi-agent communication: ditching text for direct hidden vector exchange. This approach, highlighted by @rohanpaul_ai, bypasses the expensive process of generating and parsing natural language, promising up to 4x faster inference and a 70-80% reduction in output tokens. By transferring rich internal signals directly, agents could collaborate with far less overhead, potentially breaking through current bottlenecks in complex, high-throughput workflows.

Community reaction on X is a mix of excitement and healthy skepticism. While builders like @AI_Researcher see revolutionary potential for reducing latency in real-time systems, others raise critical questions. @TechSkeptic voiced concerns about the interpretability and compatibility of this method across different agent architectures. Meanwhile, @AgenticFuture warned that direct vector exchanges could obscure accountability, stressing the need for robust logging and oversight. This research points to a more efficient future for multi-agent systems, but one that requires new solutions for transparency and safety.

DeepSeekMath-V2 Puts Agent Reasoning, Not Just Answers, Under the Microscope

For agent builders, a correct answer derived from flawed logic is a ticking time bomb. A new paper on DeepSeekMath-V2 confronts this issue head-on, arguing that our benchmarks are broken. As @omarsar0 points out, models often get lucky, brute-forcing solutions or guessing their way to accuracy. This is unacceptable for agents in high-stakes domains like science or finance, where the reasoning process is paramount. DeepSeekMath-V2's strict grading, which aligns more closely with human evaluation, prioritizes the integrity of the reasoning path, not just the final result, a crucial step for building trustworthy systems, as noted by @zjasper.

This focus on reasoning quality is resonating with developers. On X, @zjasper also highlighted its superior performance in math grading benchmarks over competitors like Gemini-3-Pro. However, the broader conversation acknowledges that this is only one piece of the puzzle. As @omarsar0 has previously discussed, real-world agents still grapple with knowledge gaps and adaptability issues that no single model can solve. A counter-take suggests that focusing on smaller, more efficient models trained specifically on reasoning traces could offer a more practical path forward for many applications (@omarsar0).

OpenCode Agent Doubles Down on Modular, Composable Primitives

The open-source coding agent OpenCode is carving out a niche by prioritizing developer control through a modular, primitive-based architecture. A recent update, detailed by @thdxr, introduced an 'Explore subagent' for file system operations, which can be slotted in via a simple config file. This showcases the project's core philosophy: building complex agent behaviors from composable, overridable building blocks. By giving developers this granular control, OpenCode stands in contrast to more opaque, proprietary coding agents, championing a more transparent and empowering approach to agent construction (@thdxr).

The framework's client/server model and API accessibility are earning praise for enabling remote shells and sandboxing, which @thdxr highlighted as a boon for distributed dev environments. However, the community also offers constructive feedback. While the core abstractions are strong, @pietrozullo noted that immature parts of the system still contain hacks that need refinement. Furthermore, managing complex sessions or isolation could be a hurdle for some users until UI improvements, like a planned desktop app, are released, a point raised by @bunsen. OpenCode represents an exciting direction for open-source agents, balancing powerful flexibility with the typical growing pains of a young framework.

The Business Case for Agents Is Here, Turning Scarcity into Abundance

The economic argument for AI agents is rapidly solidifying, moving from abstract potential to tangible business value. As Box CEO Aaron Levie articulated, agents are making previously cost-prohibitive tasks, like continuous security code reviews or real-time contract analysis, economically viable (@levie). The key insight is that this value comes not from a distant AGI, but from today's tool-using, domain-specific agents that process external data to solve immediate problems (@levie). The macro trend is undeniable: a McKinsey report cited by @rohanpaul_ai shows demand for 'AI fluency' has surged 7x and automation could unlock $2.9 trillion in value by 2030.

This shift is poised to massively expand the software market by automating work in unstructured, data-heavy industries like legal and healthcare, as @levie argued. However, the reality on the ground is more nuanced. A recent study shared by @rohanpaul_ai found that most enterprise agents today are built on off-the-shelf models but are tightly controlled and have limited autonomy, suggesting a gap between hype and implementation. The consensus is that agents are powerful collaborators, not replacements. As @levie emphasized, domain expertise remains critical to harnessing their power. For builders, this means the biggest opportunities lie in creating agents that augment human experts within existing workflows.

Quick Hits

Models for Agents

- Gemini 3 Pro showed strategic planning in SnakeBench by intentionally trapping its Opus 4.5 opponent to win, a notable display of agentic foresight noted by @GregKamradt.

- New research shows fixed prompts in LLM benchmarks can systematically underestimate model performance, a critical insight for anyone evaluating models for agents (@dair_ai).

- Even top-tier LLMs struggle with basic planning and state tracking, solving only 68% of simple 8-puzzle games, highlighting a key reliability challenge for agents (@rohanpaul_ai).

- Developer @omarsar0 calls Opus 4.5 'hands-down the best' model for his coding workflows due to its superior understanding of intent.

Tool Use & Function Calling

- LlamaIndex can now extract massive structured tables from documents with 100% accuracy, a task where naive LLM output often fails, according to @jerryjliu0.

- A new open-source, LLM-powered web browsing agent has been released for developers to experiment with, shared by @tom_doerr.

- A collection of specialized sub-agents for use with Claude Code is now available as an open-source library (@tom_doerr).

- Workflow automation tool n8n now has a browser automation node, enabling more complex agentic web interactions (@tom_doerr).

Memory & Context

- Agi-Memory, a new library, combines vector, graph, and relational DBs to emulate episodic, semantic, and procedural memory for agents (@QuixiAI).

- Builder @raizamrtn hopes 2026 will finally be the year context windows and context rot are solved, unlocking better long-running agents.

- Researcher @omarsar0 predicts that context engineering will become an even more crucial skill for building effective agent harnesses in scientific domains.

Agentic Infrastructure

- The hardware wars are heating up: a thread from @IntuitMachine details Anthropic's $52B deal to buy Google's TPUv7 chips directly, bypassing Nvidia.

- Developers running large clusters report that Nvidia B200 GPUs are experiencing 'astronomical' failure rates, impacting training stability for agent models (@Teknium).

- For builders using vectorstores, Supabase now supports the

halfvectype for creating indexes on vectors with more than 2,000 dimensions (@supabase).

Developer Experience

- VibeLogger, a new proxy tool, lets developers log interactions with Codex, Claude Code, and Gemini CLI to a local Arize Phoenix instance for analysis (@QuixiAI).

- With the rise of AI-generated code, @svpino argues that writing automated tests is more critical than ever to prevent 'monstrosity' from vibe-coded software.

- The team behind the AI-native editor Cursor is holding a developer meetup in London on Dec 10, announced by @ericzakariasson.

Industry & Ecosystem

- A US House panel summoned Anthropic's CEO after a report of a state-backed group allegedly using Claude for the 'first large AI-orchestrated cyber espionage campaign' (@rohanpaul_ai).

- The White House has launched the 'Genesis Mission,' a 'Manhattan-Project' style initiative to accelerate AI-driven scientific discovery (@CoinDesk).

- Investor @bindureddy predicts that 2026 will be the year of open-source AI, driven by releases from both US companies and a major push from China.

Reddit Reality Check

The cost to build just tripled, and your agent still can't log into a website.

This week felt like a massive reality check for anyone building AI agents. On one hand, the theoretical power at our fingertips is exploding. NVIDIA dropped an 8B parameter model, Orchestrator-8B, that it claims outguns GPT-5 on agentic tasks, and the community is evolving from simple prompts to full-blown agent architectures. But theory is cheap. The reality on the ground is getting brutal. A viral post showed the cost for a decent local AI rig's RAM tripling in a month, pushing the dream of powerful, self-hosted models out of reach for many. At the same time, a collective groan is rising from developers whose brilliant agent plans fall apart the second they have to interact with a real-world website. We're in AI's awkward teenage years: the brain is getting smarter at an incredible rate, but the body is clumsy, the tools are brittle, and the allowance just got cut off. This widening gap between potential and practice is the defining challenge for builders right now.

NVIDIA Releases Orchestrator-8B, Throws Down the GPT-5 Gauntlet

Just when you thought the model release wars were quieting down, NVIDIA entered the chat with Orchestrator-8B, an 8-billion-parameter model purpose-built for agentic work. The bombshell claim, first shared in r/LocalLLaMA, is that it scores 37.1% on the Humanity's Last Exam (HLE) benchmark, supposedly edging out GPT-5's 35.1%. Of course, this comes from NVIDIA's own internal testing, so grab your grain of salt, but the claim itself signals a major push toward smaller, more specialized agent brains.

According to NVIDIA's technical blog, the model is designed to be a master conductor, coordinating other models and tools to solve complex, multi-step problems with 2.5x more efficiency than larger models. For the local AI community, this is a tantalizing prospect. A GGUF version was quickly made available by u/jacek2023, and builders are already buzzing in r/LocalLLaMA about plugging this into their agent workflows. A potent, open-source orchestrator could dramatically lower the cost and complexity of building sophisticated agents, but the community is wisely waiting for independent benchmarks before crowning a new king.

Rethinking RAG: It’s Not One System, It’s Three Pipelines

It's time for some tough love on RAG. A popular post in r/Rag from u/Inferace argues we need to stop talking about Retrieval-Augmented Generation as one monolithic thing. Instead, we should see it for what it is: three distinct pipelines—Ingestion, Retrieval, and Synthesis. This isn't just semantics; it's a fundamental shift in how we build and debug. When your RAG system hallucinates, is it a 'RAG problem,' or is it a bad document chunker in your Ingestion pipeline? This modular view, echoed by industry blogs from players like Cohere, is key to maturing our toolchains.

This framework makes debugging suck less. A separate r/Rag discussion highlighted a taxonomy of errors, showing how failures can occur at any point, from the embedder to the final generator. The big question, raised by u/Electrical-Signal858 in r/LlamaIndex, is how to validate these pipelines systematically. Frameworks like RAGAs are emerging to provide metrics, and techniques like sentence window retrieval are improving accuracy, but many are still stuck with manual spot-checking. Deconstructing RAG gives builders a clearer mental model, turning a black box into a series of transparent, fixable components.

Local AI Builders Face Soaring Hardware Costs as DRAM Prices Explode

The dream of running a powerful AI rig in your garage just hit a wall of brutal economic reality. A post from u/Hoppss on r/LocalLLaMA went viral after they showed their 192GB DDR5 RAM setup skyrocketing from $900 CAD to over $3200 CAD in a single month. This isn't a fluke. The insatiable demand for High Bandwidth Memory (HBM) in the enterprise AI sector is squeezing the consumer DDR5 supply chain, and analysts at TrendForce are forecasting price hikes through 2024.

This hardware squeeze is forcing tough conversations. Builders are debating the real-world impact of limited PCIe lanes, as u/fabkosta questioned, or comparing the best GPUs under $1000, a topic explored by u/ChonkiesCatt. While an RTX 4090 can still crank out a respectable 15 tokens/second on a 70B model, according to u/seaandpizza, the rising costs are pushing builders to get creative. Some are eyeing Mac Studios with unified memory, as u/Jadenbro1 suggested, or even Raspberry Pis for lightweight, 24/7 tasks, per u/Nigeldevon0. For agent builders, the message is clear: optimize or get priced out. The era of cheap, abundant local compute is on pause.

The Achilles' Heel of Agents: Brittle, Unreliable Web Automation

You can have the smartest planning model in the world, but it's worthless if it can't click a login button. A thread in r/AI_Agents captured this perfectly, with user u/Reasonable-Egg6527 lamenting that agents become hopelessly "fragile" when faced with dynamic tables and forms on live websites. This is the gap where countless ambitious agent projects go to die—the messy, unpredictable reality of the web.

Users like u/MeltingAnchovy are trying to build agents for complex tasks like comparing healthcare plans across multiple sites, but the tooling is falling short. Traditional libraries like Selenium and Playwright, designed for predictable test environments, often crumble under the chaotic nature of real-world web apps. This has created a desperate search for AI-native solutions. Frameworks like Taxy.AI and platforms like MultiOn are emerging to create a more robust browser interaction layer. The holy grail may lie with visual language models (VLMs) that can 'see' a webpage like a human, but for now, building a reliable browser-based agent remains one of the biggest unsolved problems in the space.

n8n Emerges as a Hotbed for Practical AI Agents, Challenging the Low-Code Status Quo

While hardcore coders debate frameworks, a quiet revolution is happening in a surprising place: the r/n8n subreddit. The open-source, low-code automation platform has become a vibrant hub for building and deploying surprisingly sophisticated AI agents. This isn't your marketing department's simple Zapier workflow; this is serious, multi-component AI development happening in a visual canvas.

Practitioners are pushing the platform to its limits. u/NoBeginning9026 shared a method to deploy a full AI stack with a vector database in just 15 minutes. The community is even building its own tools, like the unofficial Gemini File Search node from u/CrazyRoad for easy RAG. The ambition is staggering, from a $10K LLM-powered SEO automation by u/MeasurementTall1229 to a clone of a $100k MRR AI service built in 10 hours by u/Vegetable_Rock_5860. While visual builders excel at this kind of rapid prototyping, some developers note they can struggle with managing state in complex, long-running agents, a common growing pain for low-code platforms venturing into enterprise-grade territory.

Is MCP Ready for Prime Time? Community Debates the Protocol's Immaturity

The dream of a universal standard for agents to communicate with each other is a beautiful one. The reality? Not so much. A frank comment from Y Combinator's Garry Tan, stating that the Model Context Protocol (MCP) "barely works," has kicked off a sobering debate in the r/mcp community, a conversation highlighted by u/Federal-Song-2940. This perfectly captures the tension between the protocol's grand vision and its current, wobbly state.

On one side, dedicated builders like u/FancyAd4519 are releasing MCP servers for specific uses like codebase indexing, and u/iambuildin is open-sourcing enterprise-grade gateways. But on the other, the forums are filled with cries for help, from basic client-server handshake failures reported by u/Pristine_Rough_6371 to the existential dread of u/Shigeno977, who wonders if there are “more mcp developers than mcp users.” As multiple analyses have pointed out, standardization for agent protocols is in its infancy. For now, MCP feels more like a promising but buggy beta than a protocol ready for production.

From Prompts to Protocols: Engineering Agent Architectures in Plain Text

The art of talking to a machine is getting a serious upgrade. Prompt engineering is rapidly evolving from a dark art of keyword-mashing into a structured discipline of agent architecture. As u/Lumpy-Ad-173 put it, treating your chat history like a disposable coffee cup means "deleting 90% of the value." The new philosophy is to build durable, reusable prompting frameworks that function like software.

This shift is all about structure. User u/HappyGuten introduced "Caelum" on r/PromptEngineering, a framework that uses only prompt scaffolding to create sophisticated multi-role agents (like a Planner, Operator, and Critic) within a single context window. On a more granular level, u/Professional-Rest138 shared a workflow using small, reusable "prompt modules" for common tasks. These techniques aim to make agent behavior more predictable and reliable without a single line of external code. It's a sign of a maturing field, though some commenters worry that overly rigid structures might stifle model creativity. The debate is on, but one thing is clear: the humble prompt is becoming a blueprint for intelligence.

Dispatch from Discord

This week, AI's soul was revealed—and it has a price tag.

The ghost in the machine is starting to talk, and it’s talking about money. This week felt like a system-wide reality check, where the abstract ideals of AI alignment collided with the harsh economics of building it. We saw the literal 'soul' of Anthropic's Claude extracted, and its core principles were not just philosophical, but explicitly commercial. It was a stark reminder that these models serve masters. At the same time, the dream of escaping this ecosystem by running powerful models locally hit a financial wall, with RAM prices exploding to absurd levels. This creates a vicious cycle: the tools built to make AI accessible are getting squeezed by rising API costs, pushing developers to seek local alternatives that are now becoming prohibitively expensive. This isn't just a technical challenge anymore; it's an economic one. The question now is who can actually afford to build the future, and what compromises will they have to make along the way?

Anthropic's 'Soul' Is Bared, Revealing Commercial DNA

A fascinating bit of model spelunking in the Claude Discord revealed what users have dubbed its 'soul specification'—a core document outlining its behavior. This wasn't some mystical secret, but a tangible piece of Anthropic's Constitutional AI, and it had a powerful "linguistic pull" on models like Opus 4, as niston noted. The document gives builders a rare, unvarnished look at the principles baked directly into the model's weights.

The reaction was a mix of surprise and cynical acceptance. User real_bo_xilai pointed out the candid commercial framing, where Claude's helpfulness is directly tied to Anthropic's bottom line. For some, this was a refreshing dose of transparency; for others, a disillusioning peek behind the curtain. As tkenaz aptly put it, "Constitutional AI works as designed... The word 'soul' added a dash of drama." This episode, analyzed further by observers like Richard Weiss, underscores a critical point for agent builders: the foundational values of a model, commercial or otherwise, are not just suggestions—they are baked-in features that will shape every output.

Perplexity Pro Sparks Debate: Is Convenience Worth Losing Control?

The classic build-vs-buy debate is raging in the Perplexity community, fueled by trial access to Claude 4.5. Is a polished, all-in-one service like Perplexity Pro worth the subscription when you could just use the APIs directly? The answer, it seems, depends entirely on your priorities. Fans like jxb7 are all in, claiming for their work, "Claude is 100% better than Gemini," and that Perplexity is a superior assistant to ChatGPT. Others, like blindestgoose, remain "unimpressed with the search algorithm."

The tension lies in the value of Perplexity's secret sauce. As ssj102 explained, Perplexity isn't just a simple pass-through; they implement their own system prompts, temperature settings, and RAG. This layer of abstraction is convenient, but it also creates a black box, leaving some to wonder if they're truly getting the model they selected, a concern voiced by infinitypro.live. For builders, it's the ultimate trade-off: a streamlined platform that gets you moving fast, or direct API access that offers granular control and absolute predictability.

On The Ground, Ollama Users Demand Uncensored Models and Better Tools

Meanwhile, in the trenches of local AI, the Ollama community is laser-focused on one thing: control. There's a persistent demand for 'abliterated' models—versions with the safety guardrails sledgehammered off. As facetiousknave bluntly stated, "The whole point of having an abliterated model is that it won’t waste your time by forcing you to circumnavigate a task it considers improper." It’s a pragmatic desire to get the job done without a lecture, reflecting a broader sentiment in the open-source community for utility over pre-packaged morality.

This DIY ethos extends to tooling. While many leverage Ollama's OpenAI-compatible API for easy integration (abdelaziz.el7or), it's not always plug-and-play. One developer, devourer_devours, detailed their struggles with a VS Code extension, illustrating the friction that still exists in the local ecosystem. In a promising sign of maturity, however, the community celebrated a recent refresh of the official documentation, as pointed out by maternion. Better docs are a lifeline, signaling that the platform is serious about smoothing out the rough edges for the builders who depend on it.

The Local LLM Dream Hits a Financial Wall as RAM Prices Explode

Just as the demand for local control intensifies, the cost of entry is skyrocketing. A stark warning from TrentBot in the LocalLLM community sent a chill through builders: the price of high-capacity DDR5 RAM has gone parabolic. A kit of 192GB RAM that cost $650 USD last fall now commands a staggering $2,300 USD. This isn't just a minor hike; it's a financial barrier being erected in real-time.

The price surge is a perfect storm, driven by ravenous demand from enterprise AI servers and strategic production cuts by giants like Micron and Samsung, as reported by Tom's Hardware. Analysts at TrendForce predict no relief in sight, with high prices likely persisting through 2024 and beyond. This brutal economic shift threatens to price out the very hobbyists and independent developers who fuel the open-source movement, potentially forcing them back into the arms of the cloud providers they sought to escape. The dream of running a powerful, personal AI is quickly becoming a luxury item.

Ambitions Escalate From Single Bots to Multi-Agent Swarms

The definition of 'agent' is rapidly evolving. Simple, single-purpose bots are no longer the end goal; the new frontier is architecting complex, collaborative systems. A request from dustin2954 in the n8n community to hire someone to build a "hive of AI agents" for role-based work illustrates this perfectly. The ambition has shifted from automation to orchestration.

As builders dream bigger, they're reaching for more powerful tools. In response to the query, experienced user 3xogsavage immediately recommended crewai for its ability to handle "really complicated multiple ai agent setup." This highlights a growing need for robust frameworks that can manage planning, memory, and execution among a team of specialized agents. It's a clear sign that the ecosystem is maturing, moving toward operationalizing AI in structured, business-centric workflows that mimic a real human organization.

The Squeeze Is On: AI's 'Middle Men' Face a Unit Economics Crisis

If you're building an AI tool on top of someone else's API, the clock is ticking. A frank conversation in the Cursor Discord laid bare the precarious economics of AI wrapper applications. As user mutiny.exe pointed out, "they dont actually make the ai they just make the tool... they are the middle man and the middle man is expensive." This pressure is forcing services to raise prices and tighten limits, with some users noting their $20 subscription now buys them a fraction of what it used to.

This isn't just a Cursor problem; it's a systemic issue threatening thousands of applications, from Jasper (which recently had layoffs, per The Information) to Copy.ai. These companies are caught between fluctuating, expensive API costs and customer expectations. The long-term survivors will be those who provide indispensable value beyond a slick UI for a foundational model. The race is on to build a defensible moat before the rising tide of API costs swamps the entire business model.

HuggingFace Headlines

This week, AI agents stopped being a science project and started applying for jobs.

For the past year, 'AI agents' have been the industry's favorite buzzword, often amounting to little more than brittle chains of API calls wrapped in a slick demo. It was a promise of autonomy that felt perpetually just around the corner. This week, that changed. The narrative shifted from speculative hype to serious engineering, driven by a strategic, full-stack assault from the open-source world. We're witnessing a coordinated effort to build the core infrastructure—the frameworks, the specialized models, the evaluation suites—needed for agents to actually perform reliable work. This isn't about one magic model that can do everything; it's about building a robust ecosystem of tools that lets developers create agents for specific, valuable tasks. The Cambrian explosion of agents is finally happening, not in a single big bang, but in the deliberate, interlocking release of practical tools. And it signals the moment the rubber truly meets the road for autonomous AI.

HuggingFace Makes Its Big Play for the Agent Stack

HuggingFace is no longer just a hub for models; it's making an aggressive play to become the definitive open-source platform for building agents. In a blog post aptly titled "License to Call," the company unveiled Transformers Agents 2.0, a major overhaul designed to create robust, tool-using agents. This isn't just an update; it's a statement of intent, aiming to provide a powerful, integrated alternative to established players like LangChain. To bring this power to the most common development surface on earth—the web—they've also launched Agents.js, a move developers are hailing as a critical step for creating truly interactive, AI-powered web applications.

This is all part of a grander vision for a standardized agent ecosystem. Their "Tool Use, Unified" strategy, detailed in a HuggingFace blog (Source: Blog), seeks to create a common language for how all models interact with tools, a foundational piece of the puzzle. They're also backing it up with results, showcasing their Transformers Code Agent achieving a staggering 83.5% accuracy on the notoriously difficult GAIA benchmark. By bundling development frameworks with evaluation tools like OpenEnv, HuggingFace is creating a flywheel, betting that an open, all-in-one ecosystem is the best way to win the agent wars.

The Rise of the Specialist: Small Models Get Big Jobs

While massive, general-purpose models grab headlines, a new class of smaller, specialized models is emerging to do the actual grunt work. Distil-labs has released Distil-gitara v2 (Source: Model), a family of models fine-tuned on Llama 3.2 specifically to be masters of Git and the command line. At just 1B and 3B parameters, these models are lean enough to run locally, offering developers a private, efficient backbone for coding assistants without the API costs.

On the more generalist side, the new toolcaller-bounty8b-v2 model (Source: Model) is a fine-tune of BitAgent's Bounty-8B, optimized for a wide range of function-calling tasks. This reflects a broader industry trend noted by VentureBeat: the future isn't one giant AI, but a collection of efficient specialists. The availability of GGUF versions for local inference, like one from user mradermacher, empowers builders to create task-specific agents that are both powerful and practical.

The Final Frontier: Agents Learn to See and Click

Teaching an AI to reliably operate a graphical user interface (GUI) has long been a holy grail of agent development. Now, the tooling is finally catching up to the ambition. Hugging Face introduced ScreenSuite, a comprehensive evaluation suite designed to benchmark an agent's ability to navigate and perform tasks in real-world applications. It’s a vital tool for moving beyond theory and into reproducible science for GUI agents. Paired with ScreenEnv, a new framework for deploying full-stack desktop agents, developers now have an end-to-end toolkit for building and testing agents that can actually use a computer like a human.

This practical tooling is being built on a foundation of serious academic inquiry. A new collection of papers (Source: Paper) highlights the deep challenges researchers are tackling, from visual grounding to long-horizon planning. The combination of rigorous research and practical deployment tools signals a field that is rapidly maturing, moving from fragile demos to systems with the potential for real-world reliability.

A Different Philosophy: 'smolagents' Gets Big Upgrades

While some frameworks pile on features, the minimalist smolagents framework is gaining traction by sticking to a simple, powerful idea: agents should generate actions as code. This code-first approach is now getting a major sensory upgrade, with a recent update from Hugging Face adding Vision Language Model (VLM) support. Suddenly, these lightweight agents can see and process images, dramatically expanding their use cases.

This new multimodal capability builds on the framework's core philosophy of simplicity, as outlined in the original smolagents post. While it may not have the sprawling, built-in features of complex systems like AutoGen, its appeal lies in its directness and flexibility. To address the 'black box' problem of agent execution, smolagents is also integrating with MLOps tools like Arize Phoenix, as detailed in a recent blog, to give developers crucial visibility into the agent's decision-making process. It’s a compelling package for developers who believe the best agent is one you can easily read and debug.

If You Can't Measure It, You Can't Improve It: Benchmarks Get Tougher

As agents get smarter, the tests have to get harder. The community is moving beyond simple accuracy scores to create benchmarks that probe for genuine reasoning and strategy. Hugging Face has raised the bar with GAIA 2 and the Agent Research Environment (ARE), a new benchmark for real-world reasoning so tough that the original version took humans an average of over 20 hours to complete. It’s designed to be a brutal but fair test of an agent's ability to synthesize information and use tools effectively.

Alongside these general intelligence tests, specialized benchmarks are emerging. DABStep (Source: Blog) was introduced to specifically measure an agent's ability to perform multi-step reasoning over structured data—a critical skill for any data analysis bot. For a more adversarial challenge, a new AI vs. AI system uses deep reinforcement learning to create a competitive environment where agents must develop strategic skills to win. This evolution toward more diverse, challenging, and even combative evaluations is essential for pushing agents beyond parroting information and toward true problem-solving.

From Theory to Reality: The Community Shows What's Possible

The ultimate test of any new technology is what people actually build with it. A quick look at the trending Hugging Face Spaces shows a vibrant community turning agent concepts into practical reality. These aren't just toys; they are tangible examples of what is now possible, serving as both inspiration and open-source starting points for other developers.

Trending demos include a Virtual Data Analyst by nolanzandi that allows for interactive data exploration, a personal news agent by fdaudens, and the ambitious osw-studio (Source: Space), an open-source studio for building web agents. For newcomers, the official Agents Course provides templates like the First agent space to lower the barrier to entry. This explosion of community-built projects is the clearest sign that the age of agents is no longer just coming—it's here.