Llama 3.1's Tool Use Reality Check

Meta's Llama 3.1 arrives with massive tool use claims, but practitioners are discovering the reality is far more complex.

AgentBrief Combined for Dec 11, 2025

X Wire

What if your agents could communicate telepathically? New research shows they can—and it's 4x faster.

This week, the conversation shifted from what agents can do to how they think and collaborate. A groundbreaking paper reveals that multi-agent systems perform dramatically better when they bypass human language entirely, communicating through internal, non-textual vectors. This move towards a 'telepathic' latent space represents a fundamental shift in cognitive architecture, promising unprecedented speed and efficiency.

Following this theme of internal cognition, a new open-source model, MiniMax M2, introduces 'Interleaved Thinking'—a novel technique for agents to continuously reflect and adapt their plans mid-task. Together, these developments signal a new frontier for builders. We're moving beyond prompting simple chatbots and starting to engineer complex, modular minds with sophisticated inner worlds. Below, we explore what this means for you, the tools emerging to support it, and the new skills you'll need.

Agents Are Ditching Text for Telepathy

A groundbreaking paper from researchers at Stanford, Princeton, and the University of Illinois is redefining multi-agent collaboration by moving beyond human-readable text. The study, highlighted by @rohanpaul_ai, reveals that agent ensembles can collaborate more effectively by exchanging hidden vectors—their internal numerical representations—directly with one another. This method sidesteps the computationally expensive process of generating and parsing text, achieving a remarkable 4x speedup in inference with 70-80% fewer output tokens compared to text-based systems. This paradigm shift to 'telepathic' collaboration via latent space is a major leap in efficiency, with some reports citing an 80% cost reduction @rohit4verse.

While the potential is enormous, practical challenges remain. Experts like @TheGlobalMinima note that developing and debugging these systems will require new abstractions for managing latent spaces. There are also risks of 'latent congestion,' where too many agents interacting in the same substrate could blur roles and intent, a critical area for future research @tallmetommy. The next step is to build scalable architectures that can manage this high-dimensional communication without interference, allowing collaboration to emerge as an internal geometry within models rather than a rigid protocol @sharadbachani.

For agent builders, this signals a move toward deeply integrated cognitive architectures—more like modular brains than siloed chatbots. This vision, shared by @adityabhatia89, involves agents with distinct nodes for perception, planning, and action that communicate via a shared, non-linguistic medium. By solving the 'text bottleneck,' latent space communication enhances semantic density and cuts computational waste, unlocking unprecedented power for complex, distributed agentic systems @YoussefHosni951.

MiniMax M2 Debuts 'Interleaved Thinking' for Advanced Agent Reasoning

A new open-source model from Chinese lab MiniMax is gaining traction for its innovative approach to agent workflows. As highlighted by @rohanpaul_ai, the MiniMax M2 model features 'Interleaved Thinking,' a technique where the agent saves and updates its plan within the chat history after each action. This creates a persistent reasoning state, allowing the model to stay on track, self-correct, and reliably complete complex, multi-step tasks. By re-evaluating its strategy dynamically based on tool outputs, it gains a significant edge in tool-heavy workloads, with some benchmarks showing it tops SWE-bench at a cost 12x cheaper than Claude @dlimeng192048.

This approach stands apart from other reasoning techniques. While Chain of Thought (CoT) generates a static plan upfront @ShunyuYao12 and Tree of Thoughts (ToT) explores multiple paths in a deliberate search @johnjnay, Interleaved Thinking integrates a continuous think-act-reflect loop within a single interaction. It shares similarities with ReAct, but its persistent state management in the chat history offers a unique advantage for maintaining context over long interactions, making it highly effective for dynamic tasks like web browsing and coding @SkylerMiao7.

Unlike models that use Mixture of Attention for efficiency, MiniMax M2 uses full attention to ensure higher reliability in agent, code, and math workloads, preventing the loss of critical details @rohanpaul_ai. However, some users have reported infrastructure challenges impacting performance, suggesting its success depends on proper implementation @TheAhmadOsman. For developers, MiniMax M2 represents a promising new architectural pattern for agentic planning and self-correction that could set a new standard for production-ready reasoning.

LangChain Agents Gain Programmatic Tool Calling

LangChain has rolled out Programmatic Tool Calling (PTC), a powerful feature that elevates how agents execute complex operations. As shared by CEO Harrison Chase @hwchase17, PTC allows agents to execute tools through code rather than discrete function calls. This enables sophisticated workflows, like iterating over a list and calling a tool for each item in a single step. For builders, this is a significant leap, simplifying the creation of agents that can handle real-world, multi-step tasks that require loops and conditional logic, aligning with the enterprise need for AI that deeply integrates with specific workflows @levie.

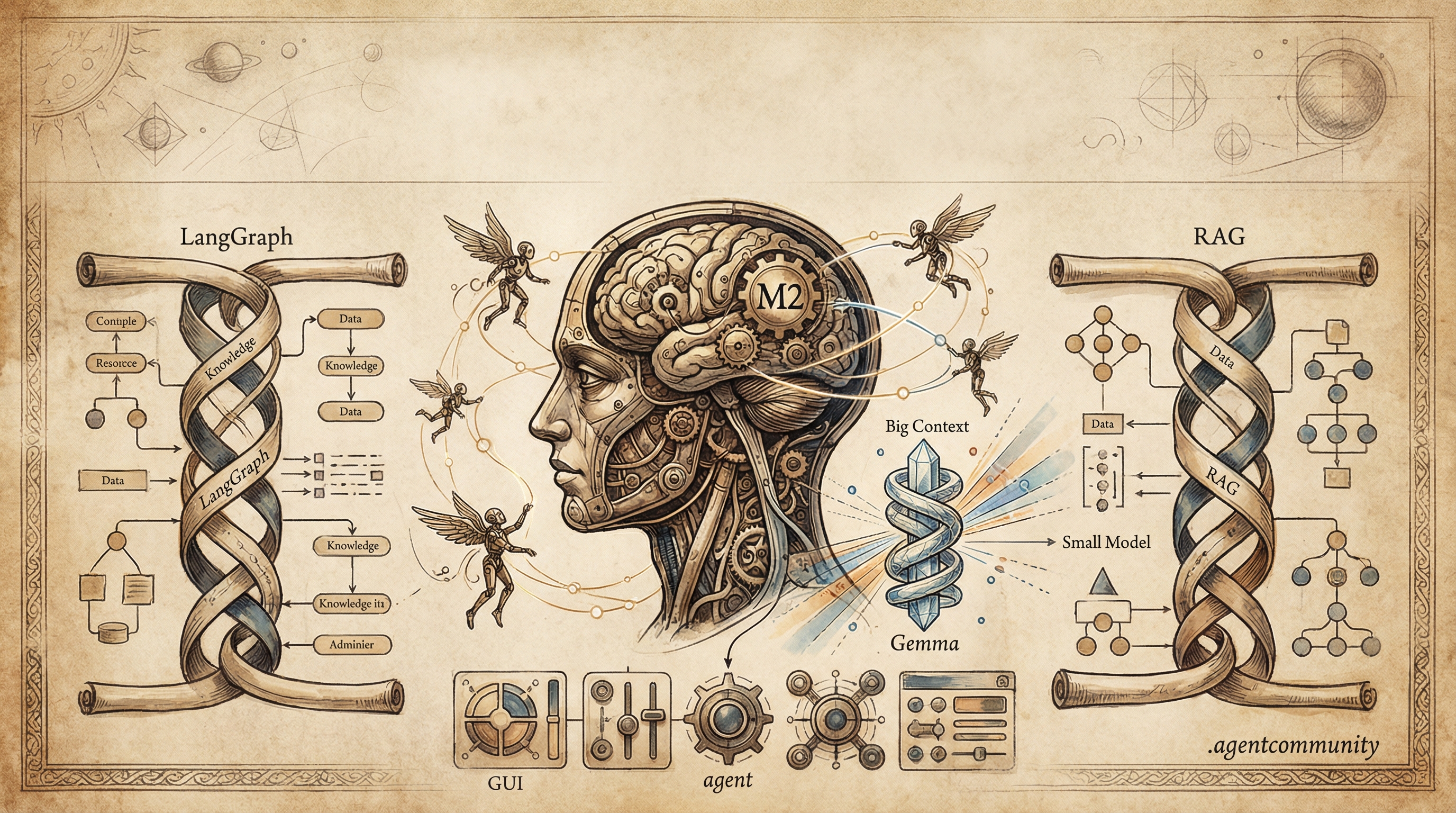

LangGraph and DeepLearning.AI Partner on Advanced Agent Courses

The agent development ecosystem is maturing with new, structured educational initiatives. LangChain and Andrew Ng's DeepLearning.AI have launched a course, 'AI Agents in LangGraph,' focused on building controllable, production-ready agents @LangChainAI. The curriculum covers critical concepts like persistence, agentic search, and human-in-the-loop workflows. A follow-up course, 'Long-term Agentic Memory with LangGraph,' teaches developers how to implement semantic, episodic, and procedural memory, enabling stateful assistants that learn from user interactions @LangChainAI. These efforts signal a clear trend towards professionalizing agent development and accelerating the adoption of advanced architectures.

Is 'Context Engineering' the Next Core Skill for Builders?

As agents tackle long-horizon problems, simply stuffing more data into the context window is proving ineffective. Researcher @omarsar0 argues that 'context engineering'—the strategic curation of information to enhance agent reasoning—will be a pivotal skill by 2026. This emerging discipline focuses on developing smarter techniques for structuring and presenting data to help agents maintain focus and coherence over extended tasks. For builders, this means shifting from managing context size to mastering context quality, a key step in building agents that can reliably handle complexity in demanding domains like science and engineering @omarsar0.

Bytedance's Vidi2 Uses Agents to Automate Video Editing

Bytedance has unveiled Vidi2, an AI video editor that acts as a domain-specific agent to autonomously transform raw footage into polished videos from a text prompt @deedydas. The system goes beyond simple generation; it constructs a script and manages the entire creative workflow. Its advanced video comprehension, which reportedly outperforms models like Gemini 3 Pro, uses spatio-temporal grounding to identify actions and objects across long timelines. This allows Vidi2 to reason about events and automate complex editing tasks, showcasing a powerful real-world application of agentic principles in a creative domain @rohanpaul_ai.

Quick Hits

Agent Frameworks & Tooling

- Open-source agent framework OpenCode adds an 'Explore' subagent for file system navigation, grepping, and globbing. — @thdxr

- A new project provides AI agents for executing structured brainstorming methods like SCAMPER and Six Thinking Hats. — @tom_doerr

- LlamaIndex founder shares a tutorial on using LlamaExtract to pull large structured tables from documents where naive LLM extraction fails. — @jerryjliu0

- VibeLogger is a new proxy to log interactions with Codex, Claude Code, and Gemini CLI to Arize Phoenix for better observability. — @QuixiAI

Memory & Context

- New project Agi-Memory combines vector, graph, and relational DBs to emulate episodic, semantic, procedural, and strategic memory. — @QuixiAI

- A useful thread explains Microsoft's GraphRAG as a fundamental shift in data indexing for holistic reasoning, not just a vector DB replacement. — @techNmak

- For high-dimensional embeddings, Supabase's

halfvectype can now be used to create indexes for vectors with over 2,000 dimensions. — @supabase

Models & Research

- A new paper on DeepSeekMath-V2 shows correct answers don't imply correct reasoning, a crucial insight for evaluating model reliability. — @omarsar0

- In a SnakeBench evaluation, Gemini 3 Pro appeared to intentionally trap Opus 4.5, demonstrating a sophisticated level of strategic planning. — @GregKamradt

- Research finds that fixed prompts in LLM benchmarks systematically underestimate performance, with rankings flipping on 3 of 7 benchmarks when prompts are improved. — @dair_ai

- New research shows that even with strong prompts and feedback, top models only solve the 8-puzzle in ~68% of cases, revealing limits in planning. — @rohanpaul_ai

The Builder's Mindset

- OpenCode's creator argues coding agents must be open source, as closed-source 'trust us' approaches can't beat a million collaborating developers. — @thdxr

- A timely reminder: code without automated tests is effectively broken, a problem magnified by the rise of AI-generated code. — @svpino

- Naval Ravikant on the future of interaction design: "UI is pre-AI." — @naval

Reddit Intel

NVIDIA's new 8B model claims to outperform GPT-5 on agentic tasks, signaling a shift towards smaller, specialized orchestrators.

This week's big story is a potential shift in agent architecture. NVIDIA's release of Orchestrator-8B, a small model claiming to beat GPT-5 on a complex agentic benchmark, suggests a move away from monolithic giants toward smaller, specialized models that conduct a team of experts. This isn't just a new model; it's a validation of a different, more efficient design pattern. This theme of deconstruction for better performance echoes in the RAG community, where builders are adopting a three-pipeline approach—Ingestion, Retrieval, Synthesis—to build more robust systems. While these architectural patterns advance, the practical 'last mile' problems remain a major bottleneck, with many developers still struggling to get agents to reliably interact with the live web. For the local development community, it's a story of two opposing forces: foundational tools like llama.cpp are getting significant performance boosts, but the hardware to run them on is becoming prohibitively expensive due to skyrocketing RAM prices. This tension underscores the growing importance of efficient models and architectures, making releases like Orchestrator-8B not just interesting, but necessary.

NVIDIA's Orchestrator-8B Claims Lead in Agentic Benchmark, Outperforming GPT-5

NVIDIA has released Orchestrator-8B, an 8-billion parameter model designed specifically for orchestrating agentic tasks, claiming it outperforms GPT-5 on the Humanity's Last Exam (HLE) benchmark. According to the model card on Hugging Face, Orchestrator-8B achieves a score of 37.1% on the HLE benchmark, a test for complex, multi-turn agentic workflows, surpassing GPT-5's reported score of 35.1% u/jacek2023. The model is also reportedly approximately 2.5x more efficient on the benchmark u/jacek2023. Details about the model are available on the NVIDIA Developer Blog NVIDIA Developer Blog.

Unlike general-purpose models, Orchestrator-8B is designed to act as a conductor, coordinating a diverse set of expert models and tools to solve problems. This architectural approach is gaining traction as a more efficient way to build highly capable agents. The r/LocalLLaMA community has been quick to react, with GGUF versions already available for local experimentation. Some users are questioning the validity of comparing Orchestrator-8B directly to GPT-5, given that GPT-5 is not publicly available and the benchmark results may not be directly comparable r/LocalLLaMA discussion. Others are excited about the potential for more efficient agent creation r/MachineLearning discussion. The official NVIDIA blog post details the architecture and intended use-cases, emphasizing its role in building complex AI agents NVIDIA Developer Blog.

For agent builders, this release is significant. It suggests that smaller, specialized orchestration models can be more effective and efficient than larger, monolithic models for complex agentic reasoning. This could open up new possibilities for building powerful, cost-effective agents that can run on more accessible hardware. NVIDIA is positioning Orchestrator as a key component in building future AI systems NVIDIA Developer Blog.

Rethinking RAG: Deconstructing the Three-Pipeline Architecture

A highly-upvoted post on r/Rag is challenging developers to rethink their approach to Retrieval-Augmented Generation. User u/Inferace argues that treating RAG as a monolithic architecture is a mistake, proposing instead that it should be viewed as three distinct pipelines: Ingestion, Retrieval, and Synthesis. The author claims that many common RAG problems arise from "treating them as one blob." This viewpoint aligns with the broader trend of modularizing AI systems for better control and observability, as highlighted in a recent blog post from Vectara.

According to this model, the Ingestion Pipeline is the foundation, handling document parsing, chunking, and metadata tagging. The Retrieval Pipeline focuses on query processing and fetching relevant documents. Finally, the Synthesis Pipeline is where the LLM generates an answer based on the retrieved context. This perspective is reinforced by u/ChapterEquivalent188, who concluded after two years of building local RAG systems that "Ingestion is the bottleneck, not the Model." LlamaIndex documentation also emphasizes the importance of a well-designed data ingestion process, noting that it can impact retrieval performance by as much as 20-30% LlamaIndex Documentation. This framework provides a clearer mental model for debugging and optimization. It allows builders to apply targeted strategies to challenges like validating retrieval quality u/Electrical-Signal858 and classifying the diverse errors that can occur at each stage u/Dear-Success-1441. By isolating the pipelines, practitioners can build more robust and maintainable RAG systems. Evaluation frameworks like Ragas offer metrics such as context precision and faithfulness to assess the quality of each pipeline, allowing for targeted improvements Ragas Documentation.

Agent Builders Grapple with Web Interaction Bottleneck

A common pain point is surfacing across multiple AI agent communities: getting agents to reliably interact with live websites is proving to be a major challenge. As user u/Reasonable-Egg6527 notes in a popular thread, "The reasoning is fine, the planning is fine, but the moment the agent touches a live browser environment everything becomes fragile." This aligns with broader discussions about the limitations of current AI agents in handling complex web tasks. For instance, a recent article on VentureBeat highlights the challenges of building AI agents that can reliably navigate the web, citing issues with dynamic content and anti-bot measures VentureBeat.

Developers are finding that tasks beyond simple API calls—such as handling logins, clicking through dynamic pages, submitting forms, or navigating dashboards—are where current tools fall short. Another user, u/MeltingAnchovy, highlights the specific difficulty of using agents on sites requiring a login, where even partial success can leave much of the site's functionality broken. The high engagement on these posts in r/AI_Agents and r/aiagents underscores how widespread this problem is. Some developers are exploring solutions like MultiOn and LaVague to address these issues, but these are still in early stages of development MultiOn LaVague.

This "last mile" problem of robust browser automation is a significant bottleneck preventing the creation of truly autonomous agents that can operate effectively on the modern web. While model intelligence for planning is advancing rapidly, the fragility of the agent-to-browser interface remains a key area in need of more durable solutions. As noted in a recent Towards Data Science article, reliable web interaction is crucial for unlocking the full potential of AI agents in various real-world applications Towards Data Science.

LocalAI and llama.cpp Receive Major Usability and Performance Upgrades

The ecosystem for running local LLMs and agents continues to mature, with key infrastructure tools receiving significant updates that improve usability and performance. The release of LocalAI 3.8.0 introduces a universal model loader, allowing developers to directly import models from Hugging Face, Ollama, or OCI registries, simplifying a common workflow bottleneck. According to the author on r/LocalLLaMA, the update also adds support for MCP agent streaming and logprobs. This enhanced model loading capability significantly streamlines the process of integrating diverse models into LocalAI, reducing friction for developers experimenting with different architectures and datasets. A user on Hugging Face showcases the ease of deployment using LocalAI, further demonstrating its growing user base.

At a lower level, the foundational llama.cpp project is getting faster thanks to optimizations in its CUDA backend. A technical write-up by u/am17an details recent work on kernel fusion. According to u/am17an, kernel fusion can result in a 20-40% speedup in token generation. The post also shared a practical tip: many single-GPU users can achieve slightly faster performance simply by enabling the GGML_CUDA_GRAPH_OPT=1 flag, which enables CUDA graph optimization. Some users in the comments reported seeing improvements of 10-15% with this flag enabled. This GitHub issue further discusses the performance benefits and potential issues related to GGML_CUDA_GRAPH_OPT.

These software enhancements, combined with hardware developments like Intel releasing open-source Gaudi 3 drivers for Linux r/LocalLLM, show a clear trend toward a more accessible and powerful local AI development experience.

Local AI Builders Face Skyrocketing RAM Prices Amidst Broader Market Pressures

A viral post on r/LocalLLaMA has struck a nerve in the local AI community, highlighting the soaring cost of high-capacity RAM. User u/Hoppss reported that the price for 192GB of RAM jumped from ~$650 USD to over ~$2300 USD in just one month. The post, which garnered over 800 upvotes, is filled with comments from other builders feeling the financial strain of the AI hardware arms race. This price surge aligns with broader trends in the DRAM market, where DDR5 prices have been steadily increasing due to high demand and limited supply TrendForce. TrendForce reports that DDR5 prices continue to rise, with a projected increase of 5-10% in Q1 2024. This increase is primarily driven by demand from server DRAM, which is crucial for AI and data center applications TrendForce.

This isn't seen as a temporary spike, with some in the discussion fearing that prices may not stabilize until 2027. The inflation directly impacts the accessibility of running larger and more capable models locally, as these often have steep VRAM and system RAM requirements. The rising cost of entry is a significant barrier for those looking to build and run high-performance agents on their own machines. Experts at Tom's Hardware predict that PC component prices, including RAM, will continue to climb in 2024 due to factors like increased manufacturing costs and geopolitical instability. While the exact timeline for price stabilization remains uncertain, the confluence of these factors suggests that local AI builders will need to adapt to a higher cost environment for the foreseeable future. Some users in r/hardware are discussing strategies to mitigate the impact, such as exploring alternative memory configurations or optimizing their models to reduce RAM usage.

Ministral 3 Speculation and Qwen3-Next Benchmarks Emerge

The open-source model landscape is abuzz with speculation about upcoming releases. References to a "Ministral 3" model have appeared in pull requests for the Hugging Face transformers library u/bratao and llama.cpp u/jacek2023, fueling anticipation for a successor to the popular Mistral series. While Mistral AI has not officially announced a 'Ministral 3' release, the presence of these pull requests suggests that the community is preparing for its arrival and anticipating day-one support in key local inference frameworks like vLLM u/pomponP2.

Meanwhile, the community is also actively benchmarking the Qwen3-Next-80B-A3B-Instruct-GGUF model. Initial community tests show promising results. A discussion on r/LocalLLaMA highlights its impressive efficiency, with one user reporting speeds of 17 tokens/second using a Q4 quantization on a system with only 32GB of RAM and 10GB of VRAM. Another user, u/JustCallMeMaybe95, noted that the model seemed to perform well on smaller context windows, but had some issues when exceeding 2048 tokens. These early benchmarks are positive indicators for builders looking to run large, capable models locally, although further testing is needed to confirm stability and performance across different use cases.

YC CEO Garry Tan's Critique Sparks MCP Development Surge

A recent comment from Y Combinator CEO Garry Tan that the Model Context Protocol (MCP) "barely works" has ignited a candid discussion on r/mcp about the protocol's maturity. The sentiment reflects a community grappling with a promising but imperfect technology, with some developers agreeing that the protocol can feel fragile and buggy in its current state. While the specific video or transcript of Garry Tan's comment is difficult to pinpoint, the reaction within the MCP community confirms its impact and the perceived challenges of the protocol.

This has led to debates about real-world adoption, with one user questioning whether there are still "more mcp developers than mcp users" u/Shigeno977. Despite these growing pains, the subreddit demonstrates a flurry of grassroots development. Builders are actively creating and open-sourcing tools to address the protocol's shortcomings, including an MCP server to solve "context amnesia" in long-running projects u/liquiduniverse2018 and an enterprise-grade gateway with Keycloak integration for better security u/iambuildin. This activity suggests a proactive effort to enhance MCP's usability and security, addressing some of the concerns implied by Tan's statement. The lack of official statements or blog posts from MCP proponents directly addressing Tan's comments suggests either a strategic silence or an ongoing effort to improve the protocol before making public assurances.

For practitioners, the takeaway is that while MCP may not be a plug-and-play standard for production systems today, it remains a focal point of innovation. The active community is building the necessary infrastructure that could pave the way for a future of truly interoperable AI agents. The open-source contributions highlight a collaborative approach to overcoming MCP's current limitations and realizing its potential for wider adoption. While concrete metrics on MCP usage are scarce, the developer activity on r/mcp indicates a dedicated, albeit nascent, ecosystem.

Discord Debates

Anthropic's latest model lands on Perplexity, while developers grapple with the rising costs—in both dollars and ethics—of building with powerful AI.

The biggest news for practitioners this week is the arrival of Anthropic's Opus 4.5 on Perplexity, giving Pro users a tantalizing trial of the new state-of-the-art model. While the access is exciting, it immediately surfaces the central tension for agent builders: the trade-off between managed platforms and direct control. Using a model through an intermediary like Perplexity offers unique RAG and system prompt benefits, but comes with opaque usage limits and potential performance differences.

This theme of control and its associated costs echoes across the ecosystem. In the world of AI-powered IDEs, Cursor users are developing sophisticated workflows to balance expensive, powerful models with cheaper ones to manage spiraling API fees. For those seeking ultimate control by running models locally, the barrier to entry is becoming physical, with the cost of high-capacity RAM kits skyrocketing. This push for local control isn't just about cost or privacy; it's also about capability, as the demand for 'abliterated,' uncensored models grows. Underpinning all this is the very nature of model behavior, highlighted by a fascinating effort to extract Claude's foundational 'soul specification,' reminding us how deeply embedded principles dictate an agent's every response.

Perplexity Pro Users Get Limited Trial of Anthropic's Opus 4.5

Perplexity is offering its Pro subscribers a trial run of Anthropic's Opus 4.5 model, as reported by users in the #general channel on their Discord server ssj102. The rollout appears to be staggered, with access granted to some users almost a day ahead of others ssj102. This trial allows Perplexity Pro users to test the capabilities of Anthropic's most powerful model within the Perplexity environment.

A key point of discussion revolves around usage limits for the Opus 4.5 trial. While officially labeled a "Trial," the specific terms remain somewhat vague ssj102. A user inspecting the service's backend discovered potential model-specific limits, showing claude45opus and claude45opusthinking capped at 6 ssj102. The timeframe for these limits (daily, per subscription, etc.) remains unconfirmed, leading to speculation among users. This limitation has sparked a broader debate about the benefits and drawbacks of accessing models through platforms like Perplexity. Some users argue that Perplexity's unique system prompts, Retrieval-Augmented Generation (RAG), and temperature settings could lead to different outputs compared to using the models natively. For example, one user on X (formerly Twitter) noted the improvements in latency and speed when using Claude Opus through Perplexity compared to the direct Claude API @maccaw.

While Perplexity has not officially announced details regarding the trial or usage limits on their blog or press releases, the user activity and discussions on their Discord channel and other social media platforms, such as X, confirm the availability of the Opus 4.5 model for Perplexity Pro subscribers. This early access provides valuable insights into the performance and potential of Anthropic's latest model within the Perplexity ecosystem.

Claude's Extracted 'Soul Specification' and its Linguistic Influence

A fascinating discussion in the Claude Discord revolves around the model's underlying principles and its noticeable effect on other models. One user described Opus 4.5's output as having such a strong "linguistic pull" that even Opus 4 can't resist adopting its style niston. This observation raises questions about how model personality is shaped and transmitted. The phenomenon of one model's style influencing another highlights the potential for emergent behaviors and stylistic convergence in large language models. This is consistent with research showing that fine-tuning can significantly alter a model's behavior DeepMind.

A potential explanation lies in what's being called the "soul specification"—the foundational documents and principles that shape Claude's behavior, a core part of Anthropic's Constitutional AI approach niston. A community member summarized the situation as the community observing the "concrete wording for the first time, not just effects," thanks to methodical extraction techniques tkenaz. This isn't a leak of training data, but rather an elicitation of the model's core principles, which one user who worked with Anthropic claims are embedded from internal, non-public documents ophidianz. Anthropic's Constitutional AI approach involves training models to adhere to a set of principles, aiming for safer and more aligned AI Anthropic. According to Anthropic, Constitutional AI allows for models to be trained without direct human supervision, using a constitution to guide the learning process Anthropic - Aligning Language Models. For builders, this is a stark reminder of how deeply embedded system prompts and constitutional principles can define an agent's behavior and style.

Cursor Users Grapple with Pricing and API Costs, Seek Cost-Saving Strategies

The economics of AI-powered developer tools are a hot topic in the Cursor Discord. Users are actively discussing the sustainability of Cursor's pricing model, with one user describing it as being "like uber where they arent profitable and burning investor money" mutiny.exe. The core issue is that Cursor acts as an intermediary, integrating expensive models from companies like OpenAI into its editor, and rising API costs are inevitably being passed on to users mutiny.exe. This mirrors broader concerns about the high costs associated with AI API usage. For example, usage-based pricing for models like GPT-4 can become expensive quickly, especially for complex tasks OpenAI pricing. This has led to discussions on optimizing API calls and exploring more cost-effective alternatives.

Faced with rising costs, developers are adopting clever cost-saving strategies. A popular technique involves using powerful models like Opus 4.1 to generate a detailed implementation plan, then switching to the more affordable "auto" mode (or a cheaper model) to execute the plan and write the code mutiny.exe. This workflow leverages the strengths of different models while managing expenses. The sentiment is that Cursor provides significant value if you possess coding skills and can effectively guide the AI, but it can be a wasteful expenditure if you're just "throwing $200 into ai prompts" for "dogshit code" mutiny.exe. Some users have also expressed interest in local alternatives to avoid API costs, although this requires significant computational resources LocalAI. Alternatives like Codeium also offer free tiers and competitive pricing Codeium pricing.

The Soaring Cost of RAM Threatens Local LLM Development

For practitioners committed to running large models locally, a significant hardware bottleneck is emerging: the price of RAM. A post from the r/localllama community, shared in the LocalLLM server, highlights a dramatic price surge. A user reported that two 96GB RAM kits that cost them $900 CAD in October now cost over $3,200 CAD just one month later TrentBot. This observation is supported by broader trends in the DRAM market, with some analysts predicting continued price increases throughout 2024 due to factors such as reduced production and increased demand for high-capacity modules in data centers and AI applications TrendForce. Specifically, TrendForce reports that DDR5 contract prices are expected to increase by 13-18% in 3Q24.

The user's post notes that this isn't expected to improve soon, with some speculating that prices won't stabilize until 2027 TrentBot. While a 2027 stabilization might be pessimistic, industry experts at Semiconductor Engineering point to the increasing complexity of DRAM manufacturing, especially with technologies like High Bandwidth Memory (HBM) used in AI accelerators, as a factor contributing to higher costs. They note that stacking DRAM for AI applications requires advanced packaging technologies and stringent quality control, adding to the overall expense. This drastic increase in cost presents a major barrier to entry for individuals and small teams looking to experiment with or deploy high-performance local models, potentially pushing more development back towards API-based solutions despite the desire for local control and privacy.

Developers Seek 'Abliterated' Uncensored Local Models

A recurring theme in the Ollama Discord is the search for uncensored, or "abliterated," local models. Practitioners are looking for models that can perform tasks without moralizing or refusing prompts. As one user puts it, "The whole point of having an abliterated model is that it won’t waste your time by forcing you to circumnavigate a task it considers improper" facetiousknave.

This demand has led to a community-driven effort to find and share these models. When a user asked about an uncensored version of Gemma 3:12b, another quickly provided a Hugging Face link to a "norm-preserved-biprojected-abliterated-GGUF" version itzpingcat. The same user shared a list of reliable sources for such models, including those from ArliAI and GrimJim, Heretic models, and huihui's models itzpingcat. These "abliterated" models are often created by modifying existing open-source models to remove safety guardrails. One technique, as seen in the shared Hugging Face link, involves "norm-preserved" methods, which aim to minimize the impact on the model's overall performance while removing unwanted restrictions. The process can involve techniques like fine-tuning the model on specific datasets or directly modifying the model's weights to bypass censorship mechanisms. According to a blog post on model ablation, the process can vary from targeted interventions to more widespread removals of components LessWrong.

For agent builders, these models offer greater control and predictability, but also shift the burden of responsible and ethical implementation entirely onto the developer. The removal of guardrails also raises concerns about potential misuse, as these models could be used to generate harmful or unethical content VentureBeat. The trade-off between control and ethical considerations is a key point of discussion within the AI community, as developers grapple with the implications of creating uncensored language models.

Builders Tackle Multi-Agent Systems in N8n

In the N8n Discord, practitioners are pushing the boundaries of workflow automation by building complex, multi-agent systems. One user sought help building an "AI role based multi-agent temporal organization" to create a "hive of AI agents" dustin2954. This prompted another community member to suggest looking into crewai for "really complicated multiple ai agent setup" 3xogsavage.

Discussions reveal the practical challenges of agentic workflows. One user reported their model was repeating prompts and consuming over 50,000 tokens per query, leading to escalating costs _metagod. Others shared technical solutions for common hurdles, such as using external databases or data tables to persist data between separate runs mookielian and rebuilding sub-workflows as callable branches within a main workflow to overcome template limitations daniel_italo_1994. These conversations highlight a clear trend: as builders move from simple automations to sophisticated agent swarms, the need for robust frameworks and clever architectural patterns is becoming paramount.

Model & Data Drops

Hugging Face's new agent framework just beat a major benchmark, signaling a rapid maturation of the entire open-source agent stack.

This week marks a significant step forward for the open-source AI agent ecosystem. The narrative is shifting from simple API wrappers to a mature, integrated stack of powerful frameworks, specialized models, and rigorous evaluation tools. Leading the charge, Hugging Face released Transformers Agents 2.0 and promptly demonstrated its power by beating the difficult GAIA benchmark—a clear signal that open-source tooling is ready for serious, generalist agent development. This isn't an isolated event. We're seeing this maturation across the board. Lighter-weight frameworks like

smolagentsare rapidly expanding into vision and GUI automation, offering builders more versatile options. Simultaneously, the community is building the infrastructure needed for real progress, with a new wave of specialized benchmarks like DABStep and ScreenSuite designed to test critical reasoning and interaction skills. This move towards robust evaluation is complemented by a trend towards smaller, highly efficient models fine-tuned for specific tasks like CLI and tool use. For practitioners, this means more powerful, modular, and verifiable components are now available to build sophisticated agents. The open agent stack is finally coming into its own.

Hugging Face's Transformers Agents 2.0 and GAIA Benchmark Success

Hugging Face has significantly upgraded its agent-building capabilities with the release of Transformers Agents 2.0. As outlined in their detailed blog post Hugging Face, this new version focuses on providing developers with a robust set of tools for creating LLM-powered agents. This update includes improved tool integration, more flexible agent control loops, and better observability for developers building complex applications. Initial community reactions have been positive, with many highlighting the improved ease of use and the potential for building more sophisticated agent-based applications. The update aims to streamline the development process, allowing developers to focus on the agent's functionality rather than the underlying infrastructure.

Alongside the framework update, the team is also sharing new methodologies and successes. A separate post details a new approach for training LLMs to reason with notebooks, dubbed Jupyter Agents. This method allows models to use code execution and introspection within a Jupyter environment to solve problems. Demonstrating the power of their internal tooling, Hugging Face also announced their own Transformers Code Agent has beaten the notoriously difficult GAIA benchmark, achieving a score of 63.9, signaling a major step forward in creating highly capable, generalist agents. This achievement positions Hugging Face as a leader in the development of open-source agent technologies, potentially challenging established frameworks like LangChain. Some users have noted the tighter integration with the Hugging Face ecosystem as a key advantage, while others are eager to see how Transformers Agents 2.0 compares in terms of performance and flexibility against more established solutions like Langchain LangChain and LlamaIndex LlamaIndex.

smolagents Framework Gains Traction with VLM, GUI Automation, and Monitoring

The smolagents framework is rapidly evolving from a minimal, code-based agent to a more versatile platform. Originally introduced as a minimal agent that writes actions in code by Hugging Face, the project has seen a flurry of updates focused on expanding its capabilities beyond code generation. A key new feature is the integration of Vision Language Models (VLMs), allowing smolagents to process and understand images, as detailed in the post "We now support VLMs in smolagents!". This allows agents to, for example, understand visual interfaces and react accordingly.

Beyond vision, the framework is expanding into GUI automation. The Smol2Operator blog post introduces a method for post-training agents for computer use, enabling them to interact with graphical interfaces. This opens up possibilities for automating tasks within desktop applications. For developers needing better monitoring, smolagents now integrates with Arize Phoenix for tracing and evaluation, helping to debug complex agent behaviors Hugging Face. According to Arize AI, Phoenix helps users understand model predictions, feature importance, and data quality issues. These updates, alongside the framework's lightweight nature, are making smolagents a compelling choice for builders. Several developers are exploring its potential, as seen in various open-source projects and tutorials, such as this example of using smolagents with Langchain to generate code. The framework's rapid development cycle and focus on practical applications are attracting attention within the AI community.

New Benchmarks Emerge to Evaluate Agent Reasoning, Foresight, and GUI Interaction

As agent capabilities grow, so does the need for robust evaluation. Several new benchmarks have been introduced to test agents on more complex and diverse tasks. DABStep is a new Data Agent Benchmark designed to evaluate an agent's ability to perform multi-step reasoning over structured data, a critical skill for data analysis tasks. Initial results indicate that even strong models like GPT-4 struggle with the complexities of DABStep, highlighting the need for further research in this area Hugging Face Blog. For a different kind of reasoning, FutureBench challenges agents to predict future events, testing their ability to synthesize information and make informed forecasts. The benchmark assesses models on their ability to predict events across diverse domains, requiring them to understand complex relationships and anticipate potential outcomes.

In the domain of user interface interaction, ScreenSuite has been released as a comprehensive evaluation suite specifically for GUI Agents. This benchmark aims to provide a standardized way to measure how well agents can navigate and operate applications just as a human would. The suite includes a variety of tasks, from simple button clicks to complex multi-step procedures, providing a holistic assessment of GUI agent capabilities. While concrete leaderboard results are still emerging for ScreenSuite and FutureBench, the benchmarks are gaining traction within the AI community as valuable tools for evaluating and improving agent performance Hugging Face Blog. These specialized benchmarks are crucial for developers to identify weaknesses and push the boundaries of what agents can do. The GAIA benchmark focuses on adversarial attacks and assesses models with complex, deceptive prompts GAIA Benchmark.

Small, Specialized Models Master CLI and Tool Use: Benchmarks Emerge

The trend towards smaller, highly specialized models for tool use is accelerating with new releases from Distil-Labs. They have released Distil-gitara-v2, a model fine-tuned on Llama 3.2, specifically for using git and other command-line interface (CLI) tools. The models are available in efficient 1B and 3B parameter sizes, making them suitable for local or resource-constrained environments (distil-labs, distil-labs). Initial benchmarks suggest the 3B version achieves strong performance on specific git tasks, demonstrating the effectiveness of fine-tuning for specialized domains, although direct comparisons to larger models like GPT-4 on the same tasks are not yet widely available. However, the appeal lies in the reduced computational cost and latency for targeted applications philschmid.

For more general-purpose tool calling, the toolcaller-bounty8b-v2 model has been released by suradev. This 8B model is designed for robust function calling, a core component for any agent that needs to interact with external APIs or tools. The availability of these models, including GGUF versions for easy local inference, provides agent builders with powerful, off-the-shelf components for creating tool-augmented agents without relying on massive, general-purpose models. These smaller models offer a compelling alternative to large language models (LLMs) for specific tool use cases, trading off some generalizability for increased efficiency and reduced resource consumption. The development aligns with a broader trend of 'small but mighty' models that excel in targeted domains Analytics India Magazine.

Research and Tooling Accelerate GUI Agent Development

Agents designed to understand and interact with graphical user interfaces (GUIs) are experiencing a surge in research and practical application. ScreenSuite, a comprehensive evaluation suite designed to rigorously test GUI agents, provides a standardized tool for benchmarking progress in the field. Complementing this, ScreenEnv facilitates the deployment of full-stack desktop agents. A recent development highlights the integration of Large Language Models (LLMs) in GUI agents, allowing for more natural language-based interaction and task execution, as explored in "SeeClick: A Benchmark Dataset for Vision-Language Models on GUI Interaction Tasks". This benchmark dataset enables vision-language models to interact with GUIs via natural language instructions, demonstrating the potential of LLMs in improving GUI agent capabilities.

This practical tooling is supported by a wave of academic research. A Hugging Face collection on GUI Agents highlights several papers exploring the challenges and potential solutions in this space (Paper 2406.10819, Paper 2412.04454). The challenges include understanding complex GUI layouts, handling dynamic interfaces, and generalizing across different applications. Architectures being explored involve combining visual perception, natural language processing, and reinforcement learning to enable agents to effectively navigate and manipulate GUI elements. The dual emphasis on academic exploration and practical evaluation suggests that we can anticipate significant advancements in agents capable of operating our everyday software. Furthermore, the emergence of frameworks like "GUI-Transformer: A Transformer-Based Model for GUI Element Recognition" demonstrate the application of advanced neural network architectures in improving GUI understanding, achieving state-of-the-art results on several GUI element detection benchmarks.

OpenEnv and GAIA2 Initiatives Drive Collaborative Agent Research

Two new initiatives are accelerating the development of AI agents by empowering the open-source community. The first, OpenEnv, is a collaborative project to build an open agent ecosystem, spearheaded by Hugging Face and supported by organizations like LAION. The goal is to create shared environments and tools that make it easier for researchers and developers to build, test, and benchmark their agents, fostering greater collaboration and reproducibility. OpenEnv aims to provide a standardized interface for various environments, allowing agents to be easily tested and compared across different tasks. This initiative seeks to address the fragmentation in the AI agent research space, where different researchers often use incompatible environments and tools, hindering progress. According to the OpenEnv announcement, the project is actively seeking community contributions and feedback. The roadmap includes expanding the range of supported environments and developing more sophisticated benchmarking tools.

In a similar spirit, the release of the Gaia2 benchmark and Agent Research Environment (ARE) is designed to empower the community to study agent capabilities on challenging, real-world tasks. The Hugging Face blog emphasizes that by providing not just a difficult benchmark but also the environment in which to run it, the project lowers the barrier to entry for serious agent research. The GAIA project highlights the importance of evaluating agents on tasks that require reasoning and planning in complex, open-ended scenarios. These efforts highlight a growing movement to build a shared, open foundation for the future of AI agents, enabling more robust and reproducible research, and encouraging more individuals and organizations to contribute to the field.

Trending Spaces Showcase Practical Agent Applications

A look at the most popular new agent demos on Hugging Face Spaces provides a glimpse into what developers are currently building and experimenting with. A standout is the First agent template from the agents-course, which has garnered over 600 likes and provides a simple starting point for new developers exploring the rapidly evolving landscape of AI agents. This template likely benefits from the increasing interest in tools like LangChain and AutoGPT, which provide frameworks for building more complex agents LangChain Documentation.

Other trending applications highlight specific use cases. The virtual-data-analyst by nolanzandi demonstrates an agent capable of interpreting and responding to data analysis queries. This space showcases the potential for AI to automate and enhance data-driven decision-making. Meanwhile, osw-studio by otst presents an open-source web agent studio, providing a platform for building and deploying agents that can interact with websites. According to the Space description, it uses the CrewAI framework which provides agents with specific roles and responsibilities. These demos show a clear trend towards agents that can perform useful, real-world tasks in data analysis and web automation, reflecting a move from theoretical models to practical implementations. The growing popularity of these Spaces reflects the increasing accessibility and usability of AI agent technology.