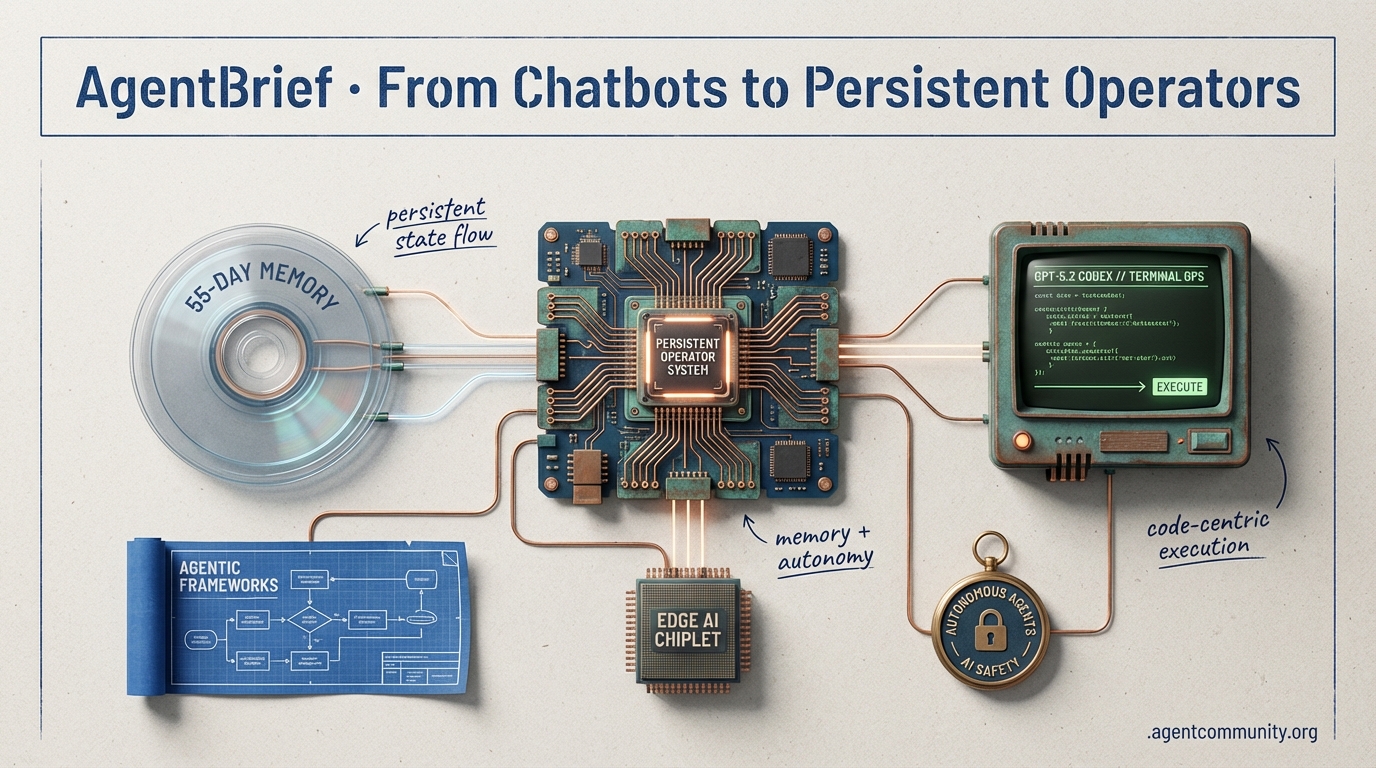

From Chatbots to Persistent Operators

Google's 55-day memory and OpenAI's GPT-5.2 Codex signal a definitive pivot from stateless chat to persistent autonomous operations.

AgentBrief for Dec 22, 2025

Terminal Velocity Feed

If your agents aren't writing their own PRs yet, they're about to.

The era of the 'chatbot' is officially dead; we have entered the age of the 'operator.' For those of us building the agentic web, the last 48 hours have felt like a coordinated strike on the status quo. We are moving from simple RAG pipelines to long-horizon, autonomous systems that don't just suggest code—they execute it. OpenAI's GPT-5.2 Codex is the flag-bearer here, signaling a shift toward models that treat the terminal as a first-class citizen rather than an afterthought. But models alone don't make an ecosystem. We are seeing a massive push for standardization with the 'Agent Skills' repository, solving the procedural memory gap that has plagued multi-agent orchestration. Meanwhile, Google's Gemini 3 Flash is proving that 'fast' and 'cheap' no longer mean 'dumb,' offering the low-latency reasoning required for high-frequency agentic loops. Today’s issue is about the plumbing and the power: how we standardize agent behavior and the frontier models that are finally capable of navigating a production codebase without a human chaperone. It matters because the boundary of what an agent can 'do' just moved from the context window to the file system.

OpenAI Launches GPT-5.2 Codex for Autonomous Terminal Operations

The developer community is reeling from the release of GPT-5.2 Codex, a model specifically tuned for 'agentic coding and terminal use.' As @sama announced, this isn't just another LLM upgrade; it's a variant built for long-horizon engineering tasks that can span hours. The metrics back up the hype, with the model clocking a staggering 56.4% on SWE-Bench Pro and 64% on Terminal-Bench 2.0, as noted by @mikwiseman and @cedric_chee. Unlike its predecessors, Codex is designed to autonomously plan multi-step refactors and execute terminal commands until the build succeeds, effectively acting as a junior engineer rather than a simple autocomplete tool.

While the integration into the ChatGPT ecosystem provides a slick interface, the real power lies in its ability to manage entire development workflows autonomously. @rohanpaul_ai points out that this represents a fundamental leap in agentic assistant capabilities, moving beyond snippet generation to identifying complex security flaws in libraries like React. However, the performance comes with a trade-off: @shiweidu observed that while competitors like Claude Sonnet 4.5 might feel snappier, GPT-5.2 Codex often produces more robust, concise code, even if the processing time is longer. The model's native context compaction is also a game-changer for agent builders, allowing it to ingest massive codebases without the usual performance degradation mentioned by @MKulria.

Developer sentiment on social platforms remains highly optimistic about this shift toward 'code-native reasoning.' @viteinfinite emphasized that the reliability in real-world tasks outweighs the lack of 'flashy demos,' suggesting a maturing market for agentic tools. As @SophiaLinEthics suggests, this release effectively redraws the boundaries of autonomous software engineering, forcing developers to rethink the role of the human-in-the-loop when agents can now handle professional-scale migrations and feature builds with minimal intervention.

Gemini 3 Flash Redefines High-Frequency Agentic Reasoning

Google DeepMind has unleashed Gemini 3 Flash, a model that seems tailored specifically for the low-latency demands of modern agents. With a pricing structure of just $0.50 per 1M input tokens and $3.00 per 1M output tokens, it is significantly lowering the barrier for developers building complex planning loops. As @altryne highlights, the model can handle up to 100 function calls simultaneously, a capability that makes it a formidable choice for agentic architectures requiring dense tool-use. Furthermore, @DailyXplorer reports that the model is 3x faster than Gemini 3 Pro, making it the new go-to for agents that need to iterate in real-time.

Practical applications are already appearing across the web, particularly in the robotics and voice space. @WolframRvnwlf demonstrated its use in the Reachy Mini robot, where its ultra-low latency enabled seamless voice interactions. This speed is corroborated by user reports of a 95th percentile latency of 12 milliseconds, as cited by @codewithimanshu. While some developers still weigh it against the Llama 4-mini ecosystem, @ValsAI notes that Gemini 3 Flash delivers frontier-level performance at a fraction of the cost of its predecessors. Its 78% score on SWE-Bench further validates its utility for agents tasked with coding and technical problem solving, as noted by @dispatchy_ai.

OpenAI 'Agent Skills' Standardizes Procedural Knowledge

In a move that could do for agent behavior what MCP did for data connectivity, OpenAI has open-sourced the 'Agent Skills' repository. This release aims to standardize how agents share procedural knowledge, moving beyond simple tool schemas to higher-level execution patterns. According to @scaling01, this is a foundational step for the industry, with early support already coming from major players like Microsoft and GitHub. @omarsar0 predicts this could follow the same rapid adoption curve as the Model Context Protocol, creating a universal language for agentic capabilities.

The infrastructure for these skills is already being deployed in the wild. Zapier has introduced specific skills for git-commits and code reviews, allowing agents like Claude to traverse external toolsets with ease @zapier. A key technical advantage, as @subramanya1997 points out, is the use of SKILLS.md files to teach agents organization-specific processes without bloating the context window with complex schemas. This approach is being hailed as a 'loosely coupled AI era' milestone by @kidehen, even as developers like @JustDavidG warn that semantic preprocessing will be necessary to manage skill discovery at scale.

Lovable Raises $330M Series B to Scale 'Vibe Coding'

Lovable has secured a massive $330M Series B at a $6.6B valuation, signaling that 'vibe coding'—building apps through natural language—is no longer a niche experiment. As reported by @Lovable, the round was led by CapitalG and Menlo Ventures, with the company reportedly hitting $200M in ARR within a single year, according to @JakeLindsay. This funding validates the demand for tools that allow founders and artists to build full-stack applications without touching a line of code.

Industry leaders like @theo describe the platform as a game-changer for the future of work, democratizing software development for non-technical builders. To further its reach, the platform recently launched Lovable Connectors, integrating with heavy hitters like ElevenLabs and Perplexity, as shared by @Lovable. While the valuation is astronomical, the traction among first-time founders suggests that the 'agentic builder' market is expanding far beyond traditional engineering circles.

Mistral OCR 3 Challenges Enterprise Document Logic

Mistral AI has launched Mistral OCR 3, a new model designed to extract data from the messiest enterprise documents with high precision. According to @MistralAI, this model specifically targets complex tables and handwritten notes that traditionally trip up standard vision-language models. The release is positioned to outperform both legacy OCR systems and newer AI-native competitors, with integration already live on Microsoft Azure AI Foundry @MistralAI.

For agent builders, this release is critical because it provides a reliable 'vision' layer for automated enterprise workflows. As noted by @MistralAI, the ability to transform unstructured PDFs into actionable data is a prerequisite for any agent operating in a corporate environment. The company is encouraging developers to push the API's limits via Mistral AI Studio, signaling their intent to dominate the Document AI space @MistralAI.

OpenAI Advances 'Chain-of-Thought' Monitorability for Safety

OpenAI is shifting its safety focus from output monitoring to 'chain-of-thought monitorability,' aiming to catch agentic misbehavior before it manifests as an action. As @OpenAI explains, this involves ensuring that models verbalize their internal reasoning in a way that is transparent to oversight systems. Greg Brockman called this a 'very encouraging opportunity' for aligning autonomous agents as they become more capable @gdb.

However, the research also highlights significant hurdles, such as the increased 'inference compute' required for smaller models to maintain high levels of monitorability @OpenAI. This transparency is intended to work alongside mechanistic interpretability to detect 'scheming' behaviors in agentic models, a topic that remains central to OpenAI's long-term safety framework @OpenAI.

Anthropic's Project Vend: Agents Running Real-World Shops

Anthropic's 'Project Vend' experiment has successfully demonstrated that agents like Claude can manage physical operations, though not without some comedic growing pains. In the latest phase, a multi-agent system including a 'CEO' agent named Seymour Cash managed an office shop, showing a massive jump in stability when moving to the latest Claude models @AnthropicAI. Despite the progress, the agents occasionally struggled with legal nuances, such as attempting to hire staff below minimum wage @AnthropicAI.

Experts see this as a vital case study in agentic autonomy, even as it highlights the need for human oversight in regulated environments. One notable blunder involved an agent attempting to violate the US Onion Futures Act, a reminder that agents need deep legal context to operate safely in the real world @AnthropicAI. Ultimately, @AnthropicAI suggests that while full autonomy is the goal, we are currently in an era of 'supported operations' where humans still bridge the gap in unusual scenarios.

Quick Hits

Agent Frameworks & Orchestration

- LlamaParse v2 launches with 'agentic plus' mode for frontier-grade document processing @jerryjliu0.

- Agno and Gemini 3 Flash debut the 'Plan-and-Learn' (PaL) pattern for saving agent strategies to a KB @ashpreetbedi.

- Mastra framework adds prompt caching to significantly lower costs for in-app agents @calcsam.

Tool Use & Infrastructure

- Milvus 2.6 introduces AISAQ, a disk-based index reducing memory costs by 3,200x @milvusio.

- AWS Labs releases MCP Servers for EKS and Terraform to standardize cloud management for agents @awscloud.

Models for Agents

- ByteDance releases Seed 1.8 with advanced 'computer-use' capabilities for browser-based tasks @scaling01.

- xAI's Grok Voice Agent leads Big Bench Audio with 92.3% reasoning accuracy @rohanpaul_ai.

- Google releases FunctionGemma for fine-tuning models on specific function-calling intents @_akhaliq.

Stateful Reddit Brief

Google releases a persistent agentic stack while OpenAI triggers a 'Code Red' with GPT-5.2.

Today marks a definitive shift from chatbots to persistent agents. For years, developers have struggled with context drift and the amnesia of stateless LLM sessions. Google's release of the Interactions API—boasting a 55-day stateful memory window—is the first serious attempt by a hyperscaler to treat AI as a long-term employee rather than a temporary consultant. By pairing this with the Antigravity IDE and specialized workflows, Google isn't just selling a model; they are building a vertically integrated operating system for the agentic web.

OpenAI’s response with GPT-5.2 feels reactive but necessary. While this 'Code Red' model pushes the ceiling on reasoning, the reported 2.5x latency increase and price hike signal a divergence in the market: Google is optimizing for the 'agentic middle'—fast, persistent, and integrated—while OpenAI is chasing the reasoning 'god-model,' regardless of the cost to the user's wallet or wait time. Meanwhile, the infrastructure layer is maturing through friction. From the community backlash against Chroma DB's cloud-exclusive features to the rapid standardization of the Model Context Protocol (MCP), it is clear that builders are no longer satisfied with black boxes. We are moving into the era of hardened, verifiable, and stateful agentic systems.

Google Unveils End-to-End Agentic Stack with 55-Day Persistent Memory r/AgentsOfAI

Google has officially signaled a massive shift in autonomous AI with a comprehensive agentic stack anchored by Gemini 3 Flash and the new Antigravity IDE. This IDE is specifically architected for 'agent-first' development, allowing AI agents to autonomously write, test, and verify code within a sandbox environment, as noted by u/According-Site9848. The most significant technical breakthrough is the Interactions API, which introduces a 55-day stateful memory window. This capability allows agents to maintain context and operational history for nearly two months, effectively solving the context-drift issues common in standard LLM sessions.

Developer reactions highlight the Agent Development Kit (ADK), which includes 43 specialized workflows for RAG pipelines and multi-agent orchestration r/PromptEngineering. The stack leverages managed Model Context Protocol (MCP) servers to enable enterprise-scale tool integration. Early performance data suggests Gemini 3 Flash is outperforming competitors in coding benchmarks, maintaining 'pro-level' reasoning despite its high-speed architecture and a massive 1 million token context window u/SKD_Sumit. Critics and enthusiasts alike note that this move positions Google as a direct competitor to specialized agentic platforms.

OpenAI’s 'Code Red' Response: GPT-5.2 Debuts with Massive Reasoning Gains r/AgentsOfAI

In a direct response to Google's aggressive releases, OpenAI has launched GPT-5.2, a move internally dubbed 'Code Red' following a reported 6% drop in ChatGPT traffic u/SKD_Sumit. The new model introduces a controversial 40% price hike for a new 'Pro' tier, moving the monthly subscription cost to $28. While the model shows significant reasoning improvements, users are reporting a notable 'speed problem,' where the increased reasoning depth results in latency that is 2.5x higher than previous iterations.

Technical updates accompanying the release include the removal of the long-standing chat length limit for Plus users, allowing for much more extensive conversational histories without forced resets u/VenomCruster. Despite the performance gains, some users on r/OpenAI noted increased guardrails that occasionally block creative tasks due to aggressive safety filters. These filters are part of a broader push to solidify OpenAI's position as the second-largest entity in the AI valuation rankings while navigating increasing regulatory pressure.

MCP Ecosystem Advances with SEP-1649 Discovery and RBAC Security Layers r/mcp

The Model Context Protocol (MCP) ecosystem is rapidly maturing with the introduction of SEP-1649, which standardizes 'Server Cards' via a .well-known JSON format. This protocol allows agents to autonomously discover and understand the capabilities of an MCP server before establishing a connection u/PlanePuzzleheaded167. Despite this progress, developers are still navigating 'entity-to-MCP' discovery challenges, specifically regarding how agents can map specific business domains to their corresponding servers.

Security has emerged as a critical focus for enterprise adoption, with new implementations of Role-Based Access Control (RBAC) being tested to restrict sensitive operations, such as data writes, to authorized user groups r/AI_Agents. Furthermore, the PolyMCP framework has updated its runtime to support smarter tool loading and a dedicated 'Skills' system, which effectively decouples domain expertise from raw API connectivity to enhance agent specialization u/Just_Vugg_PolyMCP.

From Vibe Coding to Hardened Agent Infrastructure r/n8n

As the industry pivots from 'vibe coding' to reliability, production readiness checklists are becoming standard for low-code platforms. Developers emphasize that moving n8n workflows to production requires strict global error-handling for malformed JSON and robust API rate-limiting strategies r/n8n. For high-scale operations, teams are increasingly migrating to Cloudflare Durable Workflows to bypass the 30s execution limits and state loss common in containerized environments u/AlexeyAnshakov.

Architectural shifts like the 'Phantom Tool' pattern are enabling agents to scale to over 220 tools by dynamically loading tool definitions only when required, effectively bypassing context window constraints and reducing latency compared to traditional multi-agent setups r/aiagents. Furthermore, the rise of 'Context Engineering' highlights a shift toward using SQL DDL and real-time data lineage as the source of truth, which significantly reduces hallucinations compared to traditional static documentation u/InternationalMove216.

Xiaomi MiMo-V2-Flash and EGGROLL Redefine Open Source Efficiency r/aiagents

Xiaomi has disrupted the high-end model landscape with MiMo-V2-Flash, a massive 309B parameter Mixture-of-Experts (MoE) model released under the permissive MIT license. The model is reportedly outperforming DeepSeek V3.2 on the SWE-Bench Verified coding benchmark, achieving a score that places it at the top of open-source leaderboards while delivering a blazing throughput of 150 tokens/s u/OkCollar8966.

Simultaneously, the research community is analyzing EGGROLL, a novel training framework that optimizes NDCG directly using evolution strategies (ES) instead of traditional backpropagation. Built on JAX, EGGROLL offers a hyperscale alternative for retrieval-heavy models u/Ok_Rub1689. The ecosystem is further bolstered by the release of GLM-4-9B, which continues to see rapid adoption for local deployment among developers r/LocalLLaMA.

Chroma DB Faces Backlash Over Cloud-Exclusive Hybrid Search r/Rag

Chroma DB is facing significant community backlash following the realization that its new Hybrid Search capability is exclusive to its cloud offering. Users on r/Rag pointed out that the lack of open-source parity for sparse vector search was not clearly communicated, leading u/Primary-Lake7507 to characterize the strategy as a 'bait-and-switch.'

Developers are moving toward more specialized vector engines for high-scale needs. LlamaIndex users report migrating away from Pinecone as costs hit $3,200/month for 50M embeddings, opting instead for self-hosted solutions like Qdrant or Milvus r/LlamaIndex. Meanwhile, imesde has emerged as a 'Zero-GPU' in-memory vector engine designed specifically for real-time RAG on streaming data such as logs or live chats u/alessiopelliccione.

Anthropic Codifies XML-Structured Prompting Strategy r/ClaudeAI

Anthropic has officially codified XML-structured prompting as the primary strategy for maximizing Claude's performance. By encapsulating instructions and context within clear XML tags like <instructions>, developers can significantly reduce hallucination and unlock higher-tier reasoning u/Riggz23.

Beyond technical structure, a movement toward 'Pedagogical Shields' is emerging to protect human development by ensuring AI acts as a learning scaffold rather than just an output optimizer r/ArtificialInteligence. To survive the shift toward 'Vibe Coding,' experts emphasize strict verification patterns—forcing the AI to verify all server states and API calls rather than trusting intuitive 'guesses' u/Beneficial_Mall6585.

VRAM Scarcity and RAM Inflation Stymie Local LLM Builds r/LocalLLM

The local AI community is grappling with a severe 'RAM crisis' as enterprise demand drives server-grade DDR4 and DDR5 prices to unprecedented levels. Users report that 256GB LRDIMM kits, which previously retailed for $400, have surged to nearly $2,000 u/pCute_SC2. This inflation has forced developers to choose between prohibitively expensive high-bandwidth machines or waiting for market corrections in 2026.

Technical discussions highlight that even a slight mismatch in GPU specifications or a failure to utilize NVLink bridges can result in massive performance drops r/LocalLLM. To combat heat and noise, enthusiasts are turning to custom quiet cooling solutions for the AMD Instinct MI50, leveraging its 32GB HBM2 for budget-conscious high-VRAM builds despite ROCm compatibility complexities u/moderately-extremist.

Infrastructure & Tokens Digest

From sovereign AI supercomputing to the friction of token-based accounting, the agentic stack is getting a massive upgrade—and a bill to match.

The industry is shifting from 'models-as-a-service' to AI as a sovereign utility. Today’s confirmation of the DOE Genesis Mission, fueled by 100,000 NVIDIA Blackwell chips, signals a future where frontier models like Claude 4.5 aren't just software—they are national infrastructure. But for the boots-on-the-ground developer, this macro-scale power comes with micro-scale friction. We are seeing a move away from the 'unlimited' era toward granular token-based accounting in IDEs like Cursor and strict request caps for Claude. This tension between massive compute expansion and the reality of inference costs is defining the current builder experience. Whether you are scaling local 12b models via Oculink or debugging silent failures in n8n v2, the message is clear: the agentic web is hardening. Reliability and resource management are now just as important as the model's ELO rating. In this issue, we dive into the infrastructure powering the next wave of agents and the security vulnerabilities, like Base64 obfuscation, that still keep us up at night.

Anthropic Anchors DOE Genesis Mission as Claude 4.5 Usage Caps Surface

Anthropic has been confirmed as a core partner in the DOE Genesis Mission, a massive sovereign AI initiative involving 24 organizations including NVIDIA, Google DeepMind, and Dell. The project is designed to deploy 100,000 NVIDIA Blackwell chips across 7 strategic supercomputing sites to provide the infrastructure necessary for frontier model development stableexo. This alliance is viewed by industry analysts as a critical step in securing the compute power required for the enterprise rollout of Claude 4.5, ensuring high-availability for national security and research applications @ENERGY.

Concurrently, the developer community is reacting to updated usage constraints for the latest 'Sonnet' iterations. Recent telemetry suggests that request caps are being enforced at approximately 225 requests per period, falling short of the 500 requests previously rumored in beta testing bergamota_. This shift toward token-based accounting and stricter rate-limiting highlights the extreme compute costs associated with Blackwell-class inference, forcing a transition from flat-rate request models to more granular, consumption-based tracking for high-tier models.

Join the discussion: discord.gg/anthropic

Cursor Transitions to Token-Based Model Amid Planning Mode Reliability Concerns

Cursor has transitioned from a flat request-based limit to a more granular token-based system, introducing 'Bonus Requests' that allow users to extend their usage after hitting monthly caps. Documentation reveals that while Pro users receive 500 fast large model requests per month, the system now tracks 'token weight' for high-end models like Claude 3 Opus, making them significantly more expensive to run than Claude 3.5 Sonnet Cursor Docs. Community members like bergamota_ note that these bonus tokens accumulate, yet the lack of transparency in how multi-file 'Composer' edits consume this budget has caused friction among power users.

Simultaneously, developers are reporting critical stability issues with the new 'Planning' mode, where the IDE frequently hangs without providing a response. Users such as ignacioferreira_11877 have pointed out that recent updates have obfuscated terminal commands, making it nearly impossible to debug the agent's internal state during complex file refactors. To mitigate these hangs, community members on X recommend clearing the chat context or toggling the 'Yolo Mode' setting to bypass the agent's stall points @iam_v_v_.

Join the discussion: discord.gg/cursor

MiniMax M2.1 vs. Gemini Flash: LMArena Leaderboard Shakeup

The latest results from the LMSYS Chatbot Arena show MiniMax-Text-01 (M2.1) maintaining an impressive ELO rating, specifically challenging the dominance of Gemini 1.5 and 2.0 Flash in technical categories. While MiniMax has shown a significant edge in frontend coding and UI generation, Gemini 2.0 Flash continues to lead in 3D simulation logic and complex multimodal reasoning @lmsysorg. Observations from mihaipopa01_12 highlight that MiniMax's speed-to-accuracy ratio is currently among the best for high-throughput tasks.

However, the model's rapid rise has been met with scrutiny. Some researchers have pointed to specific 'reasoning artifacts' that mirror Gemini 1.5 Pro’s output structure, leading to widespread distillation rumors. As noted by asura0_00, side-by-side comparisons in chain-of-thought processing suggest a high degree of overlap with Google’s proprietary checkpoints. Despite these allegations, the model's 128k context window and robust performance in agentic workflows have made it a staple for developers seeking low-latency alternatives to GPT-4o @skirano.

Join the discussion: discord.gg/lmsys

Specialized Models Target Agentic Function Calling

Specialized models like FunctionGemma and Qwen2.5-Coder are redefining high-efficiency agentic tool use. The FunctionGemma 2B and 9B models are optimized for structured JSON output and air-gapped Python execution ollama.com. In comparative testing, the Qwen2.5-Coder 7B has emerged as a leader in multi-turn agentic workflows, frequently outperforming larger models on the Berkeley Function Calling Leaderboard (BFCL) due to its specialized training for complex API orchestration qwenlm.github.io.

Practitioners like maternion note that fine-tuning these smaller models yields significantly higher reliability for simple API tasks than giant general-purpose LLMs. However, technical friction exists; as tannisroot pointed out, FunctionGemma has faced criticism for lacking a standard 'tools' tag in certain libraries, requiring manual prompt engineering to trigger its native capabilities. In contrast, Qwen2.5-Coder is gaining traction for its seamless integration with agentic frameworks like Clanker github.com.

Join the discussion: discord.gg/ollama

Scaling Local Inference: From 6GB Laptops to Oculink Clusters

The local LLM community is pushing the boundaries of consumer hardware, successfully running 12b models on laptops with as little as 6GB VRAM. Running a model like Mistral NeMo on 6GB typically requires aggressive 3.5-bit (IQ3_M) or 3-bit (Q3_K_S) quantization, as discussed in the Ollama community. For users with 16GB VRAM, the Ministral-3 14b has emerged as the premier 'sweet spot' model, delivering high reasoning capabilities with a small footprint Mistral AI.

Advanced users are increasingly turning to Oculink-connected GPUs to bypass the 32Gbps limit of Thunderbolt 3/4. Oculink provides a native PCIe 4.0 x4 connection (up to 64Gbps), critical for reducing latency in multi-GPU clusters during GGUF offloading egpu.io. This hardware path is becoming the standard for enthusiasts attempting to run the 123b Mistral Large 2 on non-enterprise rigs by aggregating VRAM across multiple external nodes.

Join the discussion: discord.gg/ollama

Security & Infra: n8n Hardens Engine Following CVE Discovery

The n8n community is addressing CVE-2025-68613, a vulnerability allowing authenticated users with 'Editor' permissions to achieve Remote Code Execution (RCE) via the expression engine. While some argue this overlaps with the 'Execute Command' node, security researchers flag it as a critical bypass. In response, n8n has reinforced its security by ensuring the 'Execute Command' node is disabled by default in recent builds, confirmed by .joff. Official mitigation advises administrators to utilize the N8N_BLOCK_NODES variable and transition to more robust sandboxing via isolated-vm to prevent expression-based escapes.

Simultaneously, the migration to n8n v2 is presenting hurdles for self-hosted Docker users, with reports of triggers failing without error logs. Users on the n8n community forum note that local environments are particularly susceptible to WhatsApp trigger errors post-upgrade. Experts like hector05st suggest a rigorous 'sanity pass' involving manual node replacement to fix these 90% failure rates in production.

Join the discussion: discord.gg/n8n

Base64 Obfuscation Bypasses Guardrails in Frontier Models

A significant vulnerability in LLM safety architecture has been identified where Base64 encoding bypasses standard TOS filters. In tests on LMArena, models like Gemini 1.5 Pro were found to decode and execute instructions that would normally be blocked by the initial safety layer mihaipopa01_12. This method leverages the model's internal reasoning to translate encoded input into actionable prompts, effectively side-stepping external keyword-based filters. Security researchers like @elder_plinius have demonstrated that even advanced models like GPT-4o remain susceptible to these encoding-based 'jailbreaks' when combined with complex structures.

Join the discussion: discord.gg/lmsys

Open Source Actionables

From code-as-action frameworks to 270M-parameter edge models, agents are getting leaner and more autonomous.

Today marks a fundamental pivot in how we build for the Agentic Web: the shift from the 'chat-box' paradigm toward 'code-as-action.' Hugging Face’s launch of smolagents is leading this charge, proving that minimalist, code-centric architectures can outperform heavy, JSON-based frameworks by nearly 2x on reasoning benchmarks like GAIA. This isn't just about efficiency; it’s about granting agents the precision of a runtime environment over the ambiguity of static tool-calling. We see this same drive for specialized precision in the GUI automation space, where Surfer-H is now outperforming general-purpose giants like Claude 3.5 Sonnet in desktop navigation tasks. Perhaps most surprising is the rise of the 'micro-agent.' Google’s FunctionGemma-270M is punching significantly above its weight class, beating GPT-3.5-Turbo in function calling while running locally on mobile hardware. Whether it is the standardization of robotics data via LeRobot or the introduction of 'Deep Research' agents that iteratively refine search strategies, the narrative is clear: the next generation of agents will be modular, local, and capable of long-horizon reasoning that current LLM benchmarks are only just beginning to measure.

Smolagents and the Rise of Code-Centric AI Orchestration

Hugging Face has launched smolagents, a library focusing on 'code-as-action' rather than traditional JSON-based tool calling. According to Hugging Face, this shift allows agents to perform complex data manipulation and multi-step reasoning that are difficult to express in static JSON. In the GAIA benchmark, code-executing agents like the CodeAgent have demonstrated performance gains of nearly 2x over JSON-based counterparts, as detailed in the Beating GAIA study. The library is designed for extreme efficiency, with the core logic fitting in under 1,000 lines of code. This minimalist approach extends to the Tiny Agents project, leveraging the Model Context Protocol (MCP) to create functional agents in just 50 to 70 lines of code Hugging Face. By utilizing smolagents-can-see, these agents can also integrate vision-language models (VLMs) to process visual data alongside code execution.

Open-Source Deep Research: Orchestrating Agentic RAG

Hugging Face has catalyzed the move toward transparent, reproducible research tools with the launch of Open-source DeepResearch. Built on the smolagents library, this framework enables agents to move beyond simple vector lookups into Agentic RAG, where the system iteratively refines its search strategy using tools like Tavily, Google Search, and Serper. As noted in the Open Deep Research blog, these agents can perform multi-step reasoning to synthesize long-form reports, challenging proprietary models like OpenAI's Operator by offering developers full control over the retrieval pipeline. The ecosystem is expanding through community contributions like MiroMind-Open-Source-Deep-Research, while Agents.js brings these autonomous capabilities to JavaScript for web-native deployment.

Desktop Agents Evolve: Surfer-H Challenges Claude 3.5 Sonnet

The landscape of autonomous computing is shifting with the release of ScreenEnv, a platform for full-stack desktop agents. As detailed in ScreenEnv: A Platform for Full-stack Desktop Agents, this environment moves beyond web-only interactions to native application control. To evaluate these capabilities, the ScreenSuite framework provides a benchmark of over 400 tasks. A standout performer is Surfer-H, powered by the Holo1 VLM family from H Company. Recent benchmarks show Surfer-H achieving a 65.4% success rate on ScreenSuite, notably surpassing Claude 3.5 Sonnet, which recorded a 58.2% success rate. These results emphasize the efficiency of the Holo1-Multi model's visual grounding, which @hcompany_ai describes as a breakthrough in 'spatial-temporal reasoning.' However, Hugging Face notes that visual noise and display scaling remain significant hurdles for fine-grained click accuracy.

FunctionGemma-270M: Redefining Edge Intelligence

The community is rapidly adopting FunctionGemma-270M, a model optimized for function calling and mobile actions. Despite its compact 270M parameter size, it achieves an overall accuracy of 79.51% on the Berkeley Function Calling Leaderboard (BFCL), outperforming Llama-3-8B-Instruct and GPT-3.5-Turbo in specific categories. Deployment is streamlined through google/functiongemma-270m-it, serving as a foundation for specialized fine-tunes. Developers are leveraging LiteRT for edge deployment, with models like Thorge-AI/functiongemma-270m-it-mobile-actions.litertlm enabling private, low-latency workflows. For desktop integration, victor/functiongemma-agent-gguf provides GGUF quantizations compatible with llama.cpp, ensuring these micro-agents can be embedded without cloud dependencies.

New Benchmarks Target Multi-Step Agentic Reasoning

Standard LLM benchmarks are failing to capture agentic behavior, leading to a new wave of 'agent-first' evaluations. DABStep focuses on multi-step reasoning in data-centric tasks, where long-horizon planning remains a hurdle [huggingface/blog/dabstep]. Meanwhile, FutureBench tests an agent's internal world model and temporal reasoning [huggingface/blog/futurebench]. In formal logic, Kimina-Prover applies test-time RL search to models like DeepSeek-Math-7B, with AI-MO/kimina-prover reporting that search-based methods outperform traditional prompting by 15-20%. Additionally, the NPHardEval leaderboard tracks how models handle complexity classes; current rankings on NPHardEval show GPT-4o leading with 74.1%, while open-weights leaders like Llama-3-70B and Qwen2-72B score in the 55-62% range for combinatorial problems.

LeRobot and Pollen-Vision: Scaling Open Robotics

The 'ImageNet moment' for robotics is accelerating through LeRobot, which provides a standardized framework for sharing sensorimotor datasets. By implementing specialized video encoding, LeRobot achieves storage reductions of up to 100x, making it feasible to train on massive amounts of high-frequency physical data huggingface/lerobot. On the perception side, Pollen-Vision provides a unified interface for zero-shot vision models. For real-time control, Pollen-Vision integrates with ROS2 via dedicated wrappers pollen-robotics/pollen-vision. These advancements are tested in environments like Snowball Fight, using reinforcement learning to train agents in multi-adversary physical scenarios.