Engineering the Autonomous Agent Stack

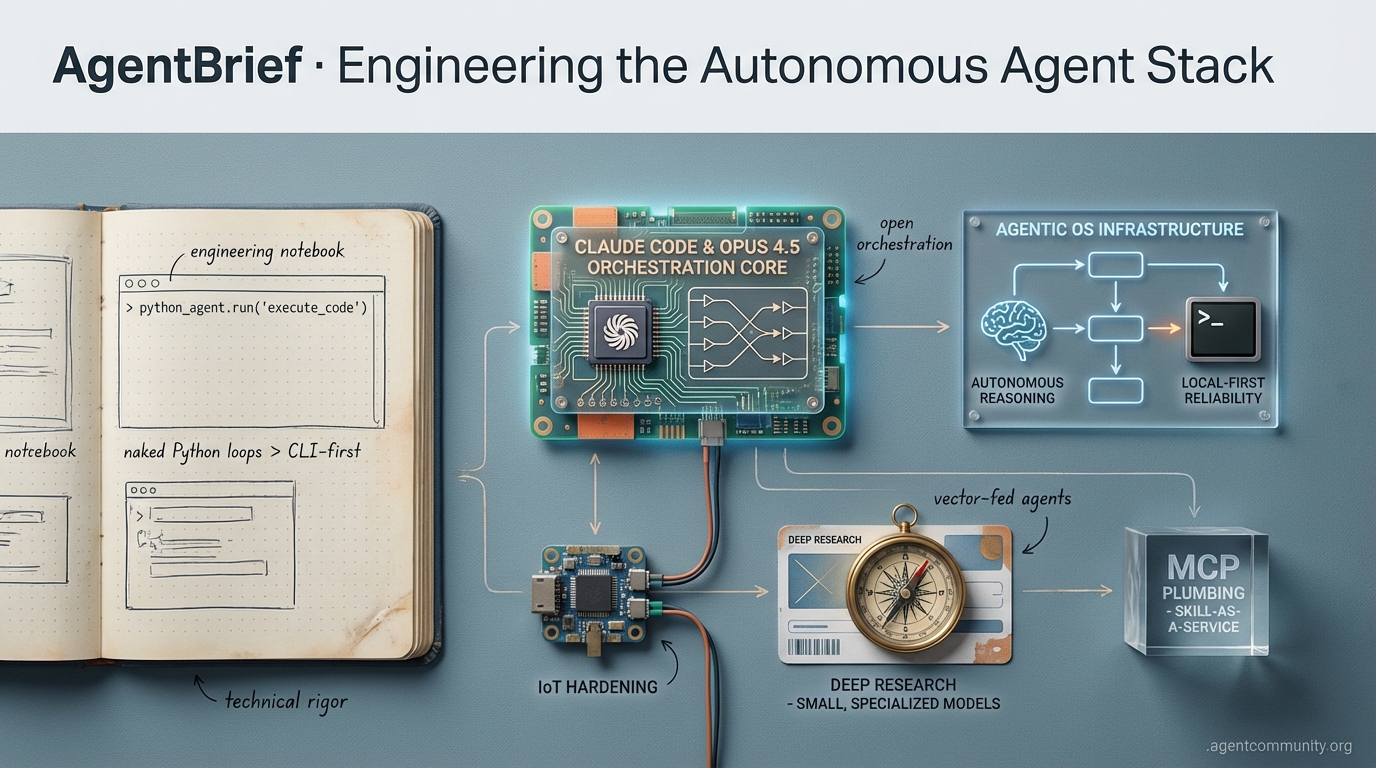

From naked Python loops to CLI-first workflows, the era of the 'vibe-check' agent is ending in favor of technical rigor.

AgentBrief for Dec 29, 2025

X Intelligence Pulse

From IoT pairing to sub-millimeter embroidery, agents are finally touching the physical world.

We are officially moving past the 'chatbot wrapper' era and into the 'agentic operating system' era. This week, we see Claude Code bridging the gap between local terminals and IoT hardware, while models like Step-DeepResearch prove that 32B parameters are the sweet spot for synthesis-heavy reasoning. The agentic web isn't just about software; it's about systems that can interpret a PDF manual to pair a light switch or stitch a pattern with sub-millimeter precision. For builders, the message is clear: focus on system-level orchestration and intent decomposition. The speed of 'vibe coding' is a powerful tool for rapid iteration, but the real winners will be those who can optimize the full stack—from token efficiency with tools like AgentInfer to robust MCP-based browser automation. We are no longer just writing code; we are architecting autonomy. Today's issue explores how these disparate threads—hardware control, deep reasoning, and system-level latency—are weaving together a new fabric for autonomous execution.

Claude Code & Opus 4.5: The New Orchestration Gold Standard

Claude Code, powered by the newly released Opus 4.5, has solidified its position as the premier tool for autonomous task execution within complex developer environments. The tool's capability extends far beyond simple text generation; it can now explore local networks and identify IoT devices like Lutron switches. As @karpathy demonstrated, the agent can autonomously handle hardware pairing by sourcing and interpreting PDF manuals, a feat that marks a significant leap in agentic utility. For creative builders, this translates to massive productivity gains; @rileybrown reported building internal FFMPEG video processing apps in just two prompts, showcasing a level of intent understanding that rivals human intuition.

The framework's secret sauce lies in its interactive planning capabilities, specifically the AskUserQuestionTool. This feature allows the agent to conduct deep developer interviews to refine specifications before a single line of code is executed, a process @trq212 identifies as a transformation for multitasking workflows. While some users like @cmglezpdev argue that Cursor remains faster for quick edits, the consensus among power users like @omarsar0 is that Claude Code's ability to close the loop on propose-execute-observe cycles makes it the superior choice for high-stakes agentic orchestration.

Despite the praise, the community remains vigilant regarding performance consistency. While @blizaine finds the native Opus 4.5 agent more cost-effective for complex tasks, @trq212 has acknowledged reports of potential model degradation, committing to investigate the underlying latency spikes. For now, Claude Code represents the most advanced bridge between high-level intent and real-world execution, effectively turning the terminal into an autonomous cockpit for the agentic web.

Beyond Retrieval: The Rise of Structured Deep Research Agents

A fundamental shift is occurring as agentic systems move from simple multi-hop retrieval toward full intent decomposition. The introduction of Step-DeepResearch, a 32B parameter model, signals a move toward synthesis-heavy agents that generate defensible arguments rather than just crawling the web. According to @omarsar0, this model achieves a 61.42 score on ResearchRubrics, outperforming much larger open-source alternatives. The economic implications are equally staggering; @rohanpaul_ai notes that a full, high-reasoning report now costs under 0.50 RMB, democratizing deep research capabilities for small-scale agent builders.

This new generation of 'thinking' models relies on an atomic capability architecture focusing on planning, seeking, and reflection. As @arshad83 points out, this allows agents to push beyond simple task execution into the realm of autonomous problem-solving. Supporting this, @EmergentMind reports that the model utilizes a 128K context window and rubric-based reinforcement learning to achieve monotonic gains up to 150B tokens. This high-context, long-chain reasoning is further validated by @Teknium, who observes models learning to generate over 10k tokens by extending beyond their initial training limits, proving that the limit of agentic reasoning is still being defined.

The 'Vibe Coding' Debate: Speed vs. Technical Debt

The agentic community is currently split over the 'vibe coding' paradigm, where speed and shipping are prioritized over traditional code quality. @svpino notes a growing respect for builders who use agents to bypass maintenance concerns in favor of rapid prototyping, while @rileybrown champions this as a vital skill for domain experts to build functional interfaces without deep technical knowledge. This is exemplified by a physiology student who built a $50,000 health app for just $700 using AI-assisted workflows, as highlighted by @svpino.

However, skeptics argue that this approach may lead to insurmountable technical debt. @yacineMTB emphasizes that AI tools should be aids for faster coding, not replacements for comprehension, warning that builders must still understand the code they deploy. Despite these concerns, @rileybrown predicts a future where companies specifically hire 'vibe-coders' for internal tools and creative workflows, signaling a permanent shift in how software is conceptualized and shipped in the agentic era.

AgentInfer Cuts Latency via System-Level Optimization

Real-world agent performance is increasingly being viewed as a system-wide engineering challenge rather than a model-specific one. 'AgentInfer' has emerged as a full-stack optimization system that boosts task completion speeds by 1.8x to 2.5x by eliminating redundant token generation. As @rohanpaul_ai explains, this approach reduces wasted tokens by over 50%, addressing the core latency issues that plague autonomous agents in production environments.

While system-level optimizations are critical, the community is also looking at small language models (SLMs) as a cost-effective alternative. @rohanpaul_ai notes that models like Phi-2 can match larger models in specific agentic tasks while running 15x faster. However, a counter-perspective from @rohanpaul_ai suggests that speed alone isn't a panacea, as energy consumption and complex bug-fixing still present significant hurdles for coding agents, indicating that the path to 'instant' agents requires both hardware and software breakthroughs.

Humanoid Agents Achieve Sub-Millimeter Physical Precision

Robotics is entering a 'physical world grokking' phase as humanoid agents demonstrate unprecedented motor control. TARS Robotics recently showcased a humanoid performing two-handed embroidery with sub-millimeter accuracy, a breakthrough that @BrianRoemmele argues was previously thought impossible for non-industrial robots. This achievement suggests that AI-driven control systems are finally catching up to hardware capabilities, potentially redefining precision tasks in manufacturing and healthcare as discussed by @BrianRoemmele.

Despite the excitement, the path to generalized physical intelligence remains steep. @DrJimFan points out that achieving 'chimpanzee-level' agility is still the grand challenge, even if embroidery is solved. Furthermore, @DrJimFan warns that real-world deployment faces massive hardware bottlenecks and iteration speed constraints. To combat this, @BrianRoemmele is looking toward neural network pruning to optimize these models for edge robotics, aiming to bring high-precision autonomy to the local level.

Quick Hits

Agent Frameworks & Orchestration

- Self-hosted agentic app builders are surging as developers demand full data ownership, per @tom_doerr.

- Dexter agents now utilize a reflection loop to autonomously replan missing steps, according to @virattt.

Tool Use & MCP

- The 'puppeteer MCP' is the new recommendation for reliable browser-based agent automation by @ivanleomk.

- Trace MCPs are becoming essential for debugging complex, trigger-based agent workflows, notes @AlexReibman.

Agentic Infrastructure

- Nvidia’s $20B Groq deal is viewed by @rohanpaul_ai as a talent grab for specialized inference expertise.

- OpenAI is hiring a $555k Head of Preparedness to manage risks in agentic self-improvement, reports @rohanpaul_ai.

Models for Agents

- LangChainJS unified Gemini integrations to simplify the agent authentication flow, notes @LangChainAI.

- Grassmann flows are proposed as an attention-free alternative for linear-scaling agent sequences by @rohanpaul_ai.

Reddit Developer Discourse

From specialized routers beating GPT-4o to the 'naked Python' rebellion, the agentic stack is being rebuilt for engineering rigor.

Today’s issue marks a pivotal shift in the agentic stack: we are moving from 'one model to rule them all' toward a highly specialized, local-first architecture. For months, the consensus was that frontier models would handle everything from high-level reasoning to basic routing. That is changing. With the release of Plano-Orchestrator, we are seeing small, 3B-parameter models outperform GPT-4o in specific orchestration tasks, proving that efficiency often beats raw scale in production logic loops. This move toward specialization is mirrored in the developer experience. The 'Naked Python' movement is gaining steam as builders grow weary of the 'abstraction tax' imposed by heavy frameworks. They are opting for explicit control and state management, prioritizing debuggability over rapid prototyping. Meanwhile, the Model Context Protocol (MCP) is maturing from a connectivity standard into a commercial ecosystem, with new frameworks like FrontMCP and monetization layers like Agent37 providing the infrastructure for 'skill-as-a-service' models. As we scale these autonomous systems, the 'stupid tax' of token bloat and the looming threat of indirect prompt injections are the new hurdles. Whether it is adopting TOON for data efficiency or hardening browsers with runtime governance, the message is clear: the age of the 'vibe-check' agent is over. Engineering rigor has arrived.

Specialized Routers Challenge Frontier Models in Agentic Workflows r/OpenAI

The era of forcing general-purpose LLMs to handle complex agentic routing may be ending. The Katanemo research team recently launched Plano-Orchestrator, a family of open-source models (A3B) specifically designed for fast multi-agent orchestration. According to u/AdditionalWeb107, these models are optimized for privacy and speed, claiming to outperform GPT-4o in routing accuracy and latency while being significantly smaller.

The model family includes specialized versions for plan generation and execution, aiming to replace heavy frontier models for internal logic loops. This shift toward specialized 'router' models addresses the latency and cost issues inherent in using frontier models for simple planning. Practitioners are increasingly looking for 'local-first' resilient orchestration, as seen with projects like Keystone CLI r/AgentsOfAI, which uses Bun and SQLite to manage multi-step agent workflows declaratively via YAML. This approach allows developers to move away from heavy cloud infrastructure for testing logic loops while maintaining execution robustness, as highlighted in community discussions on r/LocalLLM.

Is LangChain Becoming Production Tech Debt? The Shift to 'Naked Python' Loops r/AgentsOfAI

A growing segment of the developer community is advocating for a return to 'Naked Python Loops' over heavy abstractions like LangChain to avoid the 'abstraction tax.' In a viral r/AgentsOfAI discussion, u/Glum_Pool8075 argues that debugging complex chains often involves digging through 4+ layers of library code to find hidden default prompts. For production systems where determinism and transparency are paramount, builders are increasingly finding that manual control loops offer better resilience than framework-locked logic.

However, the move toward 'naked' loops does not mean abandoning all structure; rather, it represents a shift toward explicit state management. Builders are still seeking standardized patterns for Human-in-the-Loop (HITL) workflows. u/No-Conversation-8984 highlights that for sensitive financial or legal agents, state persistence is critical to ensure an agent 'pauses' before executing an API call. The debate is evolving from 'which framework to use' to 'where the framework ends and the developer's control begins,' with many opting for lightweight libraries like Pydantic or LangGraph to manage state without sacrificing the clarity of native Python code.

The Rise of MCP Monetization and Production Frameworks r/ClaudeAI

The Model Context Protocol (MCP) is rapidly shifting from a connectivity standard into a commercial ecosystem as developers bridge the 'monetization gap.' Tools like Agent37 now allow creators to charge for Claude skills without requiring users to manually configure servers, addressing a critical pain point. As highlighted by u/enthusiast_bob, there are currently over 1,000 MCP servers on GitHub with zero native monetization paths, creating a massive opening for 'skill-as-a-service' wrappers.

Architectural reliability is also advancing through the 'council' pattern. The Owlex MCP server, as detailed in r/LocalLLaMA, enables Claude Code to consult a 'council' of agents (Codex, Gemini, and OpenCode) in parallel to verify code changes, significantly reducing hallucination rates. To support these complex deployments, the FrontMCP framework r/mcp is standardizing production-ready development using TypeScript and Zod schemas, offering enterprise-grade features like Streamable HTTP and session management out of the box.

The Hidden Tax of Agentic GraphRAG: Token Bloat and the TOON Alternative r/learnmachinelearning

The transition from simple vector search to GraphRAG and agentic loops is creating a 'stupid tax' for the unprepared. u/No-Conversation-8984 warns that moving to hybrid architectures can increase token costs by 10x due to the recursive nature of multi-hop queries and the persistent loss of context across loops. To mitigate this, developers are adopting aggressive local deduplication strategies. Notably, u/TheRealAnant developed a CLI using Polars + FAISS to process 50GB+ of data locally, which saves an estimated $500/mo in API costs.

Simultaneously, the industry is exploring new data formats like Token-Optimized Object Notation (TOON) to combat the inherent overhead of JSON. TOON claims a 27-35% reduction in token usage and 35% smaller file sizes. While benchmarking by u/Ok-Particular-5280 indicates that standard models currently process JSON faster due to massive training bias, TOON has reportedly triggered 'deeper analytical reasoning' in models like Gemini 1.5 Pro, suggesting alternative serialization formats may offer cognitive benefits that outweigh raw latency trade-offs.

Prosumer Hardware and Q8 KV Cache: The New Standard for Local Agentic Workflows r/LocalLLaMA

The 'local LLM' movement is rapidly transitioning from experimental demos to high-performance agentic systems. Builders are now prioritizing hardware that supports massive context, specifically targeting the RTX 6000 Blackwell and MacBook Pro M4 Pro/Max configurations with 24GB to 128GB of unified memory. A critical bottleneck identified in long-context sessions is the KV cache; u/Adventurous-Gold6413 notes that Q8 KV cache quantization is becoming the technical standard to maintain tool-calling accuracy in vision models like GLM4.6V.

To manage the storage overhead of these high-fidelity models, innovative solutions are emerging to solve the 'duplicate GGUF' problem. u/tleyden proposed a local model registry to share weights between applications like Ollama and Jan.ai, effectively eliminating redundant 4GB+ downloads. Simultaneously, specialized hardware startups like Tiiny AI recently demonstrated a one-shot demo of their specialized AI computer, which allows aging PCs to run 120B parameter models at speeds up to 19 tokens/s, significantly lowering the barrier to entry for local, high-context reasoning.

Hardening AI Agents: From Runtime Governance to Prompt Injection Firewalls r/ArtificialInteligence

As agents transition from chatbots to autonomous executors, security has emerged as the primary bottleneck for enterprise deployment. OpenAI is reportedly hardening its Atlas agentic browser through intensive automated red teaming to mitigate sandbox escapes. To address these vulnerabilities, u/jdpahl122 introduced Graedin Cline, a self-hosted 'prompt injection firewall' proxy designed to intercept jailbreak attempts before they reach the model.

Beyond input filtering, the industry is moving toward 'Runtime Governance.' The SAFi (Self-Alignment Framework Interface) represents a shift toward 'System 2' reasoning, where a secondary governance layer monitors agent judgment in real-time to ensure compliance with ethical and safety constraints r/ArtificialInteligence. For multi-agent systems with filesystem access, latest red-teaming techniques focus on Indirect Prompt Injection (IPI), where malicious payloads are hidden in files or logs that the agent is likely to parse, potentially leading to unauthorized file deletions or privilege escalation, as noted by u/Perfect-Cricket6506.

Discord Engineering Logs

Anthropic's CLI-first approach challenges the IDE status quo as developers grapple with reasoning decay in flagship models.

We are witnessing a divergence in how agentic workflows are built. On one hand, we have the 'vibe coders' sticking to the polished GUIs of Cursor; on the other, a 'serious engineering' movement is retreating to the terminal with Claude Code. This isn't just a preference—it's a reaction to the orchestration friction that occurs when models have too much context and not enough constraint. Simultaneously, the community is sounding the alarm on 'reasoning decay' in models like Opus 4.5. Whether it's aggressive quantization or RLHF steering, the 'laziness' is real for power users. This issue explores the tools attempting to reclaim that lost performance, from Tencent's diffusion-based reasoning to local memory hacks for Ollama. We're moving past the honeymoon phase of agentic AI. Now, the focus is on hardening the infrastructure—dealing with breaking changes in n8n and the 'hallucination ceiling' in production-ready AI receptionists. The agentic web is getting its plumbing installed and the results are predictably messy but promising.

CLI vs. IDE: Claude Code and the Rise of Terminal-First Agentic Loops

The landscape for agentic development is shifting as developers weigh the merits of Claude Code against established leaders like Cursor. While Cursor's 'Composer' mode remains a staple for GUI-based multi-file edits, the release of Claude Code has popularized a terminal-first approach that provides a tighter feedback loop for shell execution and autonomous testing @anthropicai. This move toward CLI-based tools is driven by a desire for 'serious coding' control over the 'vibe coding' abstractions found in IDEs. Newer entrants like Antigravity are gaining traction by supporting high-reasoning models like Claude 3.7 Sonnet, which features an extended 'thinking' mode to mitigate the logic errors seen in previous iterations @alexalbert__. However, orchestration challenges remain a significant hurdle; users report that excessive context often 'confuses' models, leading to agents stashing changes or switching git branches unexpectedly, as noted by bearish. Industry experts like Andrej Karpathy have highlighted that while Cursor is excellent for 'vibing' through UI changes, the CLI-based agentic loop is often more robust for deep backend refactoring and complex codebase navigation @karpathy. Join the discussion: discord.gg/cursor

Frontier Model Volatility: Developers Report Reasoning Decay in Opus 4.5

Recent developer feedback indicates a significant perceived decline in the reasoning capabilities of Opus 4.5, with users like doctorslugworth reporting 'extremely stupid assumptions' in logic-heavy workflows. This sentiment is mirrored on social platforms where @AI_Explorer_99 noted a sharp increase in hallucinations during Python scripting tasks. Speculation suggests that 'penny pinching' updates—likely involving model quantization or MoE (Mixture of Experts) routing to smaller sub-models—are degrading the nuanced reasoning required for complex agentic planning. While MMLU benchmarks might remain high, @DrModelEval argues that aggressive RLHF steering is creating 'lazy' model behavior that prioritizes brevity over accuracy. In response, a growing segment of power users is migrating to Gemini 3 Pro, citing its 2 million token context window and superior stability for large-scale data synthesis @DevLeadMax. This transition underscores a broader debate on whether frontier models are being 'dumbed down' for mass-market efficiency at the expense of high-fidelity utility. Join the discussion: discord.gg/anthropic

Reliability Over Benchmarks: The Speed-Accuracy Tradeoff in AI Receptionists

Builders are finding that benchmark scores don't always translate to reliable production behavior for specific roles like AI receptionists. While GPT-4 mini remains a popular baseline, a growing cohort of developers is pivoting to Gemini 3 Flash or Grok 4.1 Fast to achieve faster inference times and lower latency 3xogsavage. Recent field tests indicate that Gemini 3 Flash maintains a sub-200ms Time to First Token (TTFT), which is essential for maintaining natural conversation flow in voice-based agents @ai_pioneer. The primary challenge cited by practitioners like i_am_dom is the 'hallucination ceiling,' where models start making up details when tasks exceed a certain complexity. For production agents handling appointments and policy following, Grok 4.1 Fast is emerging as a strong contender due to its 95%+ success rate in complex tool-use scenarios @dev_metrics. Ultimately, the choice between these models often comes down to the specific 'reliability-to-latency' ratio required for the use case. Join the discussion: discord.gg/n8n

Perplexica vs. Perplexity: The Rise of Open-Source Search Agents

As Perplexity Pro users report increasing rate limits and model 'shadowbanning' where the 'Best' model defaults to smaller versions during peak times logickus, Perplexica has emerged as a high-performance open-source alternative. Built to mimic the Perplexity UI while leveraging SearxNG for privacy-focused web crawling, Perplexica allows users to bypass proprietary restrictions by self-hosting with tools like Docker @ItzCrazyKns. While Perplexity's Sonar-Large models currently maintain a lead in complex reasoning tasks, Perplexica bridges the gap by allowing integration with local LLMs via Ollama, providing a 'no-cost' search experience for those with local compute @mcp_dev. Furthermore, the developer community is rapidly adopting MCP (Model Context Protocol) servers to give agents like Claude Desktop direct access to Perplexica's search capabilities, effectively replacing the need for $20/month Pro subscriptions for automated workflows @tech_pioneer. Join the discussion: discord.com/invite/perplexity

Tencent Debuts WeDLM-8B-Instruct: Diffusion-Based Reasoning Outpaces Qwen Baselines

Tencent has officially released WeDLM-8B-Instruct on Hugging Face, introducing a diffusion-based language model architecture specifically optimized for mathematical reasoning. According to technical documentation, the model achieves a 3-6% performance increase in inference speed compared to vLLM-optimized implementations of the Qwen series Tencent. Unlike traditional autoregressive models that generate tokens sequentially, WeDLM leverages diffusion techniques to bypass standard inference bottlenecks, making it a highly competitive candidate for local agentic workflows. While initial community reports from sources like TrentBot suggest comparisons against a 'Qwen3' architecture, industry analysts note that verified benchmarks currently prioritize the Qwen2.5-8B-Instruct baseline. This model is particularly significant for developers requiring high-performance reasoning in latency-sensitive environments, where the 8B-parameter size allows for efficient deployment on consumer-grade hardware without sacrificing iterative reasoning depth.

n8n v2 Migration: Security Hardening Disables Core Nodes

Developers transitioning to n8n v2 are encountering significant breaking changes designed to harden system security, most notably the default disabling of the Execute Command and Local File Trigger nodes n8n Docs. To re-enable these features, self-hosted users must now explicitly update their environment variables, a shift that skynetwhale notes has effectively disabled critical infrastructure in thousands of production workflows. This change is part of n8n's broader transition toward a Sustainable Use License, which aims to balance open-source accessibility with enterprise-grade security standards n8n Blog. Beyond infrastructure, the update has intensified 'JSON headaches' for agent orchestration; when processing unstructured data like CV text, dynamic variables often fail due to unencoded characters or missing quotes jabbson. Technical experts like zunjae recommend utilizing the .toJsonString() method within expressions to ensure output remains schema-compliant for downstream HTTP requests. Join the discussion: discord.gg/n8n

Optimizing Ollama Memory Offloading for RTX-Series Agents

Local hosting remains a hurdle for agentic systems due to inefficient memory management. Users report that Ollama often fails to offload to GPU correctly, resulting in 1 tps speeds that make agents unusable floxxy0. This performance bottleneck is frequently tied to the GGUF 'split' behavior where layers overflow into system RAM @ollama. The consensus is that models like DeepSeek-R1-32B often spill over into system RAM even when VRAM appears available, causing significant performance degradation sandybay. To fix this on RTX 30/40 series cards, developers recommend forcing the OLLAMA_NUM_PARALLEL variable or ensuring drivers are updated to support the latest CUDA toolkit @NVIDIA. Recommendations for local setups include sticking to 12B-14B parameter models like Gemma 3 or Qwen2.5-coder to stay within VRAM limits socialnetwooky. Join the discussion: discord.gg/ollama

LMSYS Arena Expands into Video Generation and Spatial Intelligence

The evaluation landscape is rapidly shifting toward multi-modal capabilities as LMSYS Arena officially scales its video generation leaderboard. While the initiative aims to standardize video model rankings, early rollout phases have been hampered by UI bugs and strict rate limits, as noted by users like chamaate and @lmsysorg. Community feedback suggests that current features often prioritize image-to-video workflows over direct video editing, prompting calls for more granular oversight. Simultaneously, there is a significant push for spatial reasoning benchmarks to quantify how effectively AI agents navigate and manipulate 3D environments. This development, discussed by researchers such as ijedmeer2417, is critical for the next generation of 'Spatial Intelligence' models. These benchmarks are expected to become the industry standard for evaluating agents intended for robotics and physical world interaction, as highlighted in the LMSYS Changelog. Join the discussion: discord.gg/lmsys

HuggingFace Research Hub

Hugging Face's smolagents and the open deep research stack are moving us from chat interfaces to autonomous code execution.

We have spent the last two years trying to force LLMs to speak in brittle JSON schemas, and frankly, it has been a token-tax nightmare. Today’s issue highlights a massive vibe shift toward 'code as action.' Hugging Face’s smolagents is leading this charge, proving that a lean, 1,000-line library can outperform bloated frameworks by letting agents simply write and execute Python. This isn't just about efficiency; it's about reliability. When agents write code, they bypass the ambiguity of structured prompting, leading to higher success rates on reasoning benchmarks like GAIA 2.

But code alone isn't enough; we need the 'brain' to drive the logic. We are seeing a democratization of high-level reasoning through the Open Deep Research initiative and the release of Hermes 3, which prioritizes 'neutral alignment' over restrictive guardrails. Whether it is the Model Context Protocol (MCP) standardizing how these agents talk to tools, or the rise of pixel-to-action models for OS-level automation, the infrastructure for the Agentic Web is finally hardening. We are no longer just building faster chatbots; we are building autonomous researchers, operators, and engineers capable of navigating both digital and physical worlds.

Code as Action: Why smolagents is Winning

Hugging Face has introduced smolagents, a minimalist library that shifts the agentic paradigm from JSON-based tool-calling to a 'code as action' approach. By allowing agents to write and execute Python code directly, the framework significantly reduces the 'token tax' associated with complex JSON schemas. Benchmarks on the GAIA leaderboard demonstrate that code-based agents consistently outperform their tool-calling counterparts; specifically, the smolagents release post highlights that code-centric agents can achieve higher success rates on reasoning tasks while requiring fewer iterations.

The core library remains remarkably lean, containing roughly 1,000 lines of code, providing a lightweight alternative to more abstracted frameworks like LangChain. The framework's versatility is further expanded through multimodal integration, as detailed in smolagents can see, which enables Vision-Language Models (VLMs) to navigate web interfaces and analyze visual data. This minimalist power is already being adopted for specialized tools like the GitHub PR review agent and the Spotify genre analyzer.

Open-Source Deep Research: Democratizing Discovery

Hugging Face is democratizing 'Deep Research' capabilities through its Open-source DeepResearch initiative. Built upon the smolagents library, this framework allows agents to break free from closed ecosystems by using a transparent planning loop that performs iterative searches and evaluates source credibility. According to community benchmarks, models like Qwen2.5-72B-Instruct and DeepSeek-R1 have emerged as the most effective engines for these agents, providing the necessary reasoning depth to manage complex search spaces.

Practical community implementations are rapidly expanding, such as MiroMind and the specialized ScholarAgent. These tools leverage multi-step reasoning to avoid hallucinations during long-running tasks, often outperforming basic RAG (Retrieval-Augmented Generation) by actively verifying facts across multiple web sources. By open-sourcing the planning logic, developers can finally inspect and refine the core decision-making processes of high-stakes research agents.

Hermes 3: The Frontier Model for Agentic Workflows

Nous Research has released Hermes 3, a comprehensive family of models fine-tuned from Meta's Llama 3.1 and 3.2. The suite ranges from edge-capable 3B models to the massive 405B flagship, which represents the first full-parameter fine-tune of the 405B base model. A standout feature is its performance on the Berkeley Function Calling Leaderboard (BFCL), where it demonstrates superior tool-calling accuracy compared to standard Llama 3.1 Instruct models.

For agent builders, Hermes 3 introduces a 'neutral alignment' philosophy, prioritizing objective role-play and instruction following over restrictive guardrails. This makes the suite an ideal choice for the 'orchestrator' role in multi-agent systems, where the 70B and 405B variants provide the logic to manage sub-agents and execute precise tool calls using advanced XML tagging for structured data extraction.

MCP: Standardizing the Agentic Toolbelt

The Model Context Protocol (MCP) is rapidly becoming the standard for connecting agents to data sources. Hugging Face has championed this shift with smolagents, which prioritizes native MCP support. As highlighted by Aymeric Roucher, developers can build a functional MCP-powered agent in just 50 lines of code. The ecosystem is expanding through standardized servers like Brave Search and Google Search, which are the most common integrations for performing real-time web research. This versatility was on full display during the Agents-MCP-Hackathon, where participants built over 20 unique tools, proving that the protocol significantly lowers the barrier to creating production-ready workflows.

Beyond Chat: GAIA 2 and the Evolution of Evaluation

The evaluation of AI agents is shifting from text reasoning to real-world task execution. Hugging Face recently released GAIA 2 and ARE, introducing Agentic Reasoning Evaluation (ARE). This utilizes Docker-based sandboxed environments to test agents on tasks requiring real-world tool use, such as interacting with a terminal. This follows news that their Transformers Code Agent topped GAIA leaderboards with a 35% success rate on the hardest tasks. Complementing these efforts is ScreenSuite, providing over 3,500 tasks for GUI agents, and DABStep for data agents, which measures step-by-step reasoning efficiency.

Scaling Inference: Test-Time RL and Formal Reasoning

The integration of Reinforcement Learning (RL) at test-time marks a shift toward dynamic 'thinking' processes. AI-MO introduced Kimina-Prover, which utilizes test-time search to solve complex formal proofs. While this improves accuracy, it introduces a computational overhead of 10x-50x compared to standard decoding. To mitigate costs, ServiceNow-AI developed Apriel-H1, which distills these reasoning traces into more efficient architectures. This evolution is supported by massive datasets like nvidia/multilingual-reasoning-v1, providing 6 million samples to ensure global-ready logic.

Pixel-to-Action: The Rise of OS-Native Desktop Agents

The landscape of GUI automation is shifting toward 'pixel-to-action' models. ScreenEnv provides a standardized sandbox where agents can master OS-level navigation through trial and error. This is supported by Smol2Operator, which utilizes a normalized coordinate system (0-1000) to map visual features to precise UI elements regardless of monitor resolution. Furthermore, H Company has introduced Holo1, a VLM architecture designed to power the Surfer-H agent. Holo1 operates on a pure pixel paradigm, bypassing the need for accessibility trees and making it robust enough to navigate legacy software.

LeRobot: Scaling Physical Intelligence

The LeRobot project, led by Remi Cadene, is establishing the 'ImageNet of Robotics' by standardizing community-driven datasets. To handle sensor data, they recommend HEVC (H.265) encoding to achieve 10x to 100x compression while maintaining temporal consistency. On the hardware side, Pollen-Vision enables zero-shot object detection for robots to interact with novel environments. This vision stack was recently showcased in the Snowball Fight project, where a Reachy robot utilized real-time vision for physical play.