Scaling the Agentic Execution Layer

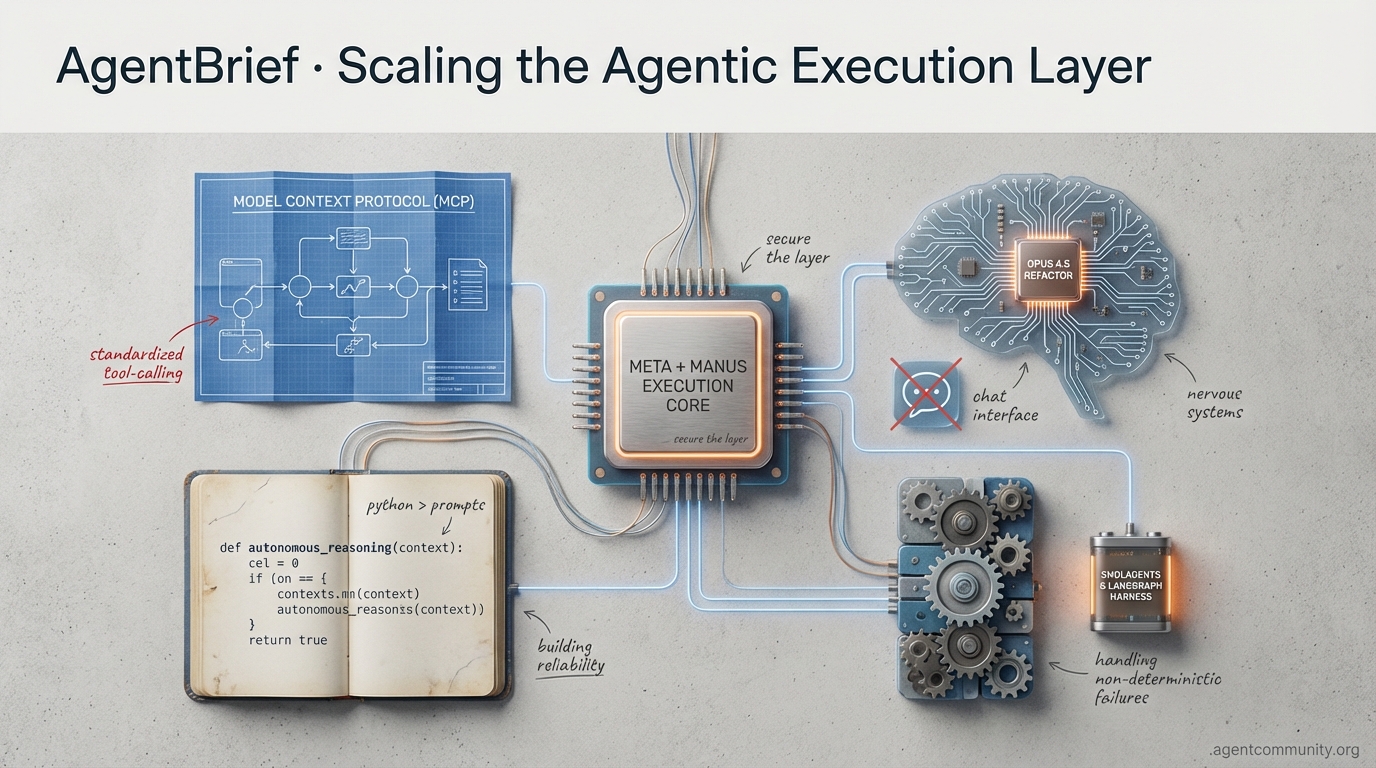

Meta's $2B bet on Manus and the rise of the Model Context Protocol signal a hard pivot from chat interfaces to autonomous execution layers.

AgentBrief for Dec 31, 2025

X Feed Intel

Chat is dead; the execution layer just became the most expensive real estate in tech.

The era of the 'helpful chatbot' is officially over, replaced by a frantic arms race for the execution layer. Meta’s $2B acquisition of Manus isn't just a talent grab; it’s a structural pivot toward 'agent habitats'—the sandboxed environments where AI actually does work instead of just talking about it. For those of us building in the agentic web, this validates a core thesis: the value has shifted from the model weights to the orchestration and execution infrastructure. We are seeing this play out simultaneously in the IDE, where Claude Opus 4.5 is turning 'vibe coding' into a high-throughput agentic loop that handles multi-file refactors while we sleep. But as we scale, we're hitting the 'planning wall'—that 25-second latency bottleneck that makes real-time agency feel sluggish. Whether it's through plan-caching research or NVIDIA's Blackwell-powered inference moats, the focus for 2024 is clear: speed, autonomy, and the ability to link agents across ecosystems. If you aren't building for a world where agents talk to other agents, you're building for the past.

Meta's $2B Manus Play: Securing the 'Super Agent' Execution Layer

Meta is aggressively pivoting toward autonomous systems with its reported $2B+ acquisition of Singapore-based startup Manus, a move signaled by @ace_leverage and @HackerproofHQ as a 'super agent' play. Unlike previous AI acquisitions focused on core LLM research, the Manus deal is a strategic grab for 'agent habitats'—the specialized infrastructure required for code execution, storage, and computer use. As @amasad observed, this acquisition provides Meta with the advanced execution-layer engineering necessary to move beyond simple chat and into long-horizon, real-world utility. This technology is expected to be deeply integrated into Meta's consumer stack, potentially automating complex data analysis and user interactions across WhatsApp and Instagram @abuchanlife.

Industry analysts see this as a game-changing first-mover advantage that puts immense pressure on competitors. @EMostaque suggests this could force Microsoft into defensive M&A, perhaps targeting independent agents like GenSpark to keep pace. The sheer scale of Manus’s growth—reaching an estimated $100M-$125M ARR in under a year—underscores why Meta is betting on scalable agentic solutions over basic conversational AI @ScoutThisForMe. While Manus will reportedly maintain its independent subscription service for now, the long-term play is clearly about dominating the agentic infrastructure space through the Singapore team's unique expertise in autonomous scaling @ClareAdvisors.

Claude Opus 4.5 and the Rise of the Structured Agentic Loop

The release of Claude Opus 4.5, paired with Claude Code, has fundamentally shifted the developer experience from simple autocomplete to full-scale autonomous feature development. According to @theo, this marks a disruption in how code is built, with agents now capable of handling intricate, multi-file codebase modifications. Builders like @simonw are already using these agentic planning modes to construct low-level logic from scratch, such as Python-based JavaScript interpreters, demonstrating a level of precision that exceeds previous autonomous coding benchmarks @elvis.

What was once dismissed as 'vibe coding' is now maturing into a rigorous paradigm where the 'agentic loop' optimizes code for performance in real-time. Early data cited by @prinz suggests teams using Opus 4.5 are seeing up to 220% mean productivity improvements. However, the community remains cautious; while Opus 4.5 excels in agentic workflows, @mattshumer_ notes that competitors like GPT-5.2 may still hold an edge in specific engineering niches. Furthermore, @YoshikK warns that despite the hype, hallucinations persist, meaning the human role is shifting from 'writer' to 'agent orchestrator' who must still verify outputs effectively @PrajwalTomar_.

CrewAI Unlocks Native Agent-to-Agent (A2A) Orchestration

CrewAI has introduced Agent-to-Agent (A2A) orchestration, a critical milestone for enterprise-scale agentic systems. As explained by @joaomdmoura, this allows agents to coordinate with external systems and other agents not natively built in CrewAI, utilizing the Model Context Protocol (MCP) to maintain structured control. This isn't just theoretical; a Fortune 500 CPG firm is already using this to orchestrate ServiceNow agents, proving the framework's readiness for deterministic, high-stakes environments @joaomdmoura.

This shift toward 'interconnected agency' is viewed as a way to manage the proliferation of corporate AI assistants without losing oversight. While some developers worry about the complexity of these multi-layered interactions, @joaomdmoura emphasizes that features like persistent state flows and multimodal support are essential for future-proofing. By integrating MCP, CrewAI is positioning itself as the connective tissue for the broader agentic web @joaomdmoura.

AgentReuse Tackles the 25-Second Planning Bottleneck

Research into agentic efficiency has identified a massive hurdle: complex task planning can consume over 25 seconds, accounting for nearly 30% of total processing time in large datasets @omarsar0. To solve this, the 'AgentReuse' mechanism proposes caching previous plans and using semantic similarity for retrieval, significantly cutting redundant computation. This strategy aims to bring real-time responsiveness to multi-agent workflows that are currently bogged down by repetitive reasoning cycles @omarsar0.

Simultaneously, the 'RepoNavigator' study suggests that simplicity often beats complexity in agent toolsets. By using a single 'jump' tool for symbol navigation, agents outperformed those using multi-tool architectures @omarsar0. This move toward minimalist, execution-aware tools is gaining traction as developers realize that more tools often mean more failure points @omarsar0.

Inference Wars: NVIDIA Blackwell Dominates Agentic Workloads

NVIDIA's Blackwell (GB200) is pulling ahead of AMD’s MI355X in the race for inference efficiency, particularly for interactive agentic tasks. Benchmarks shared by @rohanpaul_ai show the GB200 delivering up to a 24x performance edge per GPU at high interactivity levels. Despite a higher per-GPU cost, the rack-scale integration via NVLink provides superior unit economics for developers running high-throughput reasoning models @rohanpaul_ai.

As the industry pivots from training to inference, memory bandwidth has become the primary choke point for production agents. @rohanpaul_ai notes that transformer sizes are outgrowing accelerator memory, making NVIDIA’s high-bandwidth solutions a critical moat. While AMD remains a potential cost-effective alternative, current data suggests they are lagging significantly in the tokens-per-second metrics required for seamless agentic loops @rohanpaul_ai.

Quick Hits

Agent Frameworks & Orchestration

- Agno (formerly Phidata) launched AgentOS with native tracing and HITL improvements @AgnoAgi.

- Swarms Framework 8.8.0 arrives Jan 1st featuring an enhanced multi-agent marketplace @KyeGomezB.

Tool Use & MCP

- n8n now supports instance-level MCP, allowing agents to trigger internal company workflows @n8n_io.

- A new Playwright MCP server reportedly solves 90% of web navigation issues for agents @AlexReibman.

Agentic Infrastructure

- E2B sandboxes are now providing the primary virtual environment for Manus agent execution @tereza_tizkova.

- Run:ai Model Streamer on GKE enables direct streaming of massive models into GPU memory @GoogleCloudTech.

- Milvus 2.6 introduces tiered storage to slash costs for large-scale agent memory @milvusio.

Models for Agents

- Qwen Code v0.6.0 adds experimental Skills and multi-provider support for Gemini and Anthropic @Alibaba_Qwen.

- Gemini 3 Flash offers configurable reasoning depth for low-latency agentic loops @milvusio.

Subreddit Insights

As Meta snaps up Manus and Anthropic edges out GPT-5.2, the focus is shifting from raw intelligence to system governance.

We are witnessing a fundamental pivot in the agentic stack. For the last year, the industry has been obsessed with the 'brain'—the foundational model. But as 2025 unfolds, the narrative is shifting toward the 'nervous system.' Meta’s $2B acquisition of Manus AI isn’t just a talent grab; it’s an admission that navigating the web requires specialized agentic architecture that general-purpose models still struggle to master. Even the frontier model wars have taken a surprising turn: Anthropic’s Opus 4.5 is winning the hearts of power users with superior reasoning, while OpenAI’s latest iteration faces 'laziness' critiques from the community.

This isn't just about vibes; it’s about reliability. From Microsoft’s new Agent Framework to the rapid maturation of the Model Context Protocol (MCP), builders are creating the 'harnesses' and 'memory layers' needed to stop agents from hallucinating into the void. Whether it's running 355B models on decade-old hardware or implementing CNNs in pure assembly, the message is clear: the Agentic Web is being built from the metal up. Today, we dive into the protocols, frameworks, and power moves defining this transition.

Meta’s $2B Power Play and the Frontier Model Flip r/ArtificialInteligence

The AI industry is undergoing a massive consolidation phase as tech giants secure the 'agentic stack.' Meta has reportedly acquired Singapore-based Manus AI in a deal valued at approximately $2 billion, a move aimed at integrating autonomous agent capabilities directly into its ecosystem. As noted by u/Top-Sock8617, the acquisition specifically targets Manus's proprietary technology for navigating complex web-based tasks, filling a strategic gap left by recent research team departures. This comes as Softbank has fully funded a $40 billion investment in OpenAI, signaling a 'make-or-break' window for the industry leader.

However, money can't buy immediate performance. The frontier landscape is shifting as practitioners report high satisfaction with Anthropic's Opus 4.5, particularly for complex coding. Users like u/Spirited_Panic_3223 are abandoning ChatGPT in favor of Claude's Max plan, citing superior performance in deep work. In contrast, OpenAI's GPT-5.2 is facing early 'vibe' backlash; u/FloorShowoff notes that 5.2 feels 'lazier' and more shortcut-prone. Industry analysts like @aisafety_expert suggest this may be due to over-alignment or aggressive RLHF constraints intended to reduce compute costs, creating an opening for more reasoning-focused competitors.

Microsoft’s Agent Framework and the Rise of the 'Harness' r/MSSemanticKernel

Microsoft is signaling a major shift in its ecosystem with the release of the Microsoft Agent Framework, moving away from the experimental nature of early AutoGen versions. This new architecture prioritizes state-management and event-driven communication, providing a more robust foundation for enterprise-grade workflows. As highlighted by u/peopleworksservices, the shift addresses critical reliability issues by making agent transitions and decision-making processes explicit and auditable.

This move mirrors a broader industry pivot from models to 'agent harnesses.' u/SalmanRiaz1 argues that current failures are rarely due to a lack of intelligence, but because agents are 'unmanaged.' Expert commentary from u/lexseasson notes that agentic systems often fail due to 'invisible cognitive work'—implicit decisions that aren't captured in the system state. By adopting deterministic control layers like the Contradiction-Free Ontological Lattice (CFOL), developers are ensuring that agent behavior remains logically consistent even when navigating conflicting data inputs.

MCP Hits Critical Mass with Karpathy’s 'Council' r/LocalLLaMA

The Model Context Protocol (MCP) is rapidly maturing into a standard for agentic workflows. u/NeitherRun3631 has open-sourced an MCP server for Andrej Karpathy's 'llm-council' project, bringing multi-LLM deliberation directly into Claude Desktop and VS Code. This implementation facilitates a three-stage 'council' process—individual responses, peer rankings, and final synthesis—all initiated through a single MCP tool call that executes in under 60s. This allows models to outsource complex reasoning to specialized sub-agents rather than attempting to solve every problem within a single context window.

Simultaneously, developers are fighting 'tool bloat' with leaner strategies. u/BTForIT successfully optimized the Microsoft Graph MCP by consolidating 37 specialized tools into just 7 high-utility functions, saving 12KB of context window overhead. Beyond data retrieval, the ecosystem is moving toward active system maintenance; u/n8n recently introduced an MCP server that enables LLMs to inspect and debug complex automation workflows in real-time.

Solving 'Context Amnesia' with Self-Improving Memory r/LangChain

Long-term context loss remains a primary hurdle for autonomous agents, but new 'self-improving' layers are offering a fix. u/Nir777 introduced Mem0, a memory layer that extracts insights and resolves conflicting information across sessions. Unlike static RAG, Mem0 utilizes a dynamic update logic that prioritizes the most recent user interactions, ensuring the agent's internal state remains consistent without manual pruning.

Complementing this, u/bantler released 'self-learning-skills,' a repository that acts as a sidecar memory for agents to record 'Aha moments' during task execution. According to discussions in r/PromptEngineering, this shift toward stateful context engineering allows agents to backport their own fixes into their skillsets, achieving a 100% reduction in repetitive error cycles for recurring tasks.

355B MoEs and Assembly-Level Neural Nets r/LocalLLM

The local LLM community is pushing the boundaries of hardware efficiency. In a stunning display of low-level optimization, u/Forward_Confusion902 implemented a full CNN entirely in x86-64 assembly with no external libraries. On the scaling side, u/at0mi managed to run the massive GLM-4.7 (355B Mixture of Experts) model at 5 tokens/s on a 2015-era CPU-only setup.

These breakthroughs are being bolstered by llama.cpp's new bucket-sort optimization, which speeds up top-k sampling by 2.9x. The implementation specifically addresses the CPU bottleneck where traditional sorting algorithms struggled with large vocabulary sizes, bringing CPU sampling latency closer to dedicated GPU performance. It seems 'scaling laws' are no longer the only path to winning; specialized logic and low-level optimization are proving that agents can thrive on hardware previously thought obsolete.

Dev Chat Digest

Anthropic’s latest model sets a new gold standard for coding agents while benchmarks expose the hard truth of autonomy.

We are witnessing a fundamental shift in the agentic landscape. For months, the industry wrestled with high latency and fragile loops. This week, the release of Claude 3.5 Sonnet and the optimization of GPT-4o have effectively halved the 'wait time' for autonomous actions, turning what were once sluggish proofs-of-concept into snappy, production-ready tools. While Anthropic has claimed the coding throne with a staggering 37% on SWE-bench, OpenAI is leaning into multimodal efficiency, slashing tool-calling latency to near-human speeds. But speed isn't everything. As the GAIA benchmark reminds us, agents still struggle with the messy reality of the web, failing to break the 40% success threshold where humans thrive. The bottleneck has shifted from 'how fast can the agent think?' to 'how well can the agent reason through non-deterministic failures?' Today, we explore how framework evolutions like LangGraph and CrewAI are building the persistence and hierarchical logic needed to bridge this gap, alongside the rising tide of local agents powered by Llama 3. The era of the 'API wrapper' is over; the era of the autonomous world model has begun.

Claude 3.5 Sonnet Becomes the New Standard for Coding Autonomy

Claude 3.5 Sonnet isn't just an incremental update; it's a declaration of war on developer friction. By hitting a 37% score on the SWE-bench Verified evaluation, it has effectively doubled the performance of GPT-4o in autonomous coding tasks. As @paul-gauthier noted, the model's ability to operate at twice the speed of its predecessor, Opus, has made it the immediate favorite for power users of tools like Aider and OpenHands. The introduction of Artifacts further changes the game by providing a dedicated workspace for agents to iterate on code and UI in real-time. This isn't just about text completion anymore; it's about a closed-loop execution environment where, as @swyx suggests, the 'new gold standard' for agentic software engineering has been set.

GPT-4o Slashes Latency to Power Real-Time Agentic Loops

While Anthropic conquers code, OpenAI is doubling down on raw agentic efficiency. GPT-4o has demonstrated a 2x improvement in processing speed for the function-calling sequences that serve as the nervous system for autonomous agents. By utilizing a unified neural network, OpenAI has managed to bring audio latency down to a staggering 322 milliseconds. This performance boost, as highlighted by @gregman, allows voice-based agents to manipulate tools in real-time without the 'thinking' pauses that break human immersion. On the Berkeley Function Calling Leaderboard, GPT-4o is setting records for time-to-first-token, proving that for many builders, the fastest model is often the most useful one for complex reasoning workflows.

The GAIA Reality Check: Why True Autonomy is Still Hard

Despite the excitement, the GAIA benchmark serves as a necessary reality check for the industry. While humans cruise through these real-world tasks with a 92% success rate, even the most advanced models like Claude 3.5 Sonnet and o1-preview are struggling to break the 40% threshold. The failure points are consistently found in long-sequence reasoning and tool-use hallucinations. The GAIA leaderboard shows that being an 'API wrapper' isn't enough to navigate the non-deterministic nature of the live web. To reach true autonomy, the next generation of agents will need to move beyond scale and toward robust world models that can handle unexpected environment shifts without human intervention.

Frameworks Mature: Persistence and Hierarchical Management Take Center Stage

The plumbing of the agentic web is finally catching up to the models. LangGraph is leading the charge by moving away from simple linear paths toward cyclic workflows that support Postgres and Sqlite persistence. This allows for 'Time Travel,' a feature @hwchase17 champions for its ability to let developers pause, rewind, and debug agent states in production. Meanwhile, CrewAI is tackling the management problem. By introducing a Manager Agent to handle hierarchical delegation, builders are seeing up to 30% less manual orchestration logic. As @joaomdmoura points out, this move from sequential flows to autonomous task allocation is what will allow multi-agent systems to scale beyond simple scripts into complex organizations.

Local Agent Infrastructure Surges with Llama 3 and Fine-Tuning

Privacy-conscious developers are finding a sanctuary in the Llama 3 ecosystem. By pairing Llama 3 8B with Ollama's native tool support, the barrier to entry for local, high-performance agents has vanished. While base models sometimes struggle with structured output, specialized fine-tunes like Nous Hermes 2 Pro are pushing tool-calling success rates over 90%. This shift toward local autonomy ensures that data sovereignty doesn't have to come at the cost of capability. For many practitioners, the ability to run a robust agentic loop entirely on-device is the ultimate goal, bridging the gap between cloud-dependent prototypes and truly private personal assistants.

SOTA Labs Report

WebRL shatters benchmarks while HuggingFace's SmolAgents simplifies the path from prompt to production.

The era of the 'static agent' is ending. Today’s highlights signal a fundamental shift in how we build and train autonomous systems: we are moving away from fragile JSON-parsing loops and toward agents that actually learn from their environments. WebRL’s breakthrough—achieving a 41.4% success rate on WebArena—proves that online self-evolution isn't just a research concept; it’s the new baseline for GUI navigation. While the big models battle for SOTA, we’re seeing a simultaneous push toward the 'Small and Specialized.' The release of FunctionGemma-270M demonstrates that you don’t need 100B parameters to handle mobile actions; you just need the right architecture at the edge. Crucially, the 'how' of building agents is changing. HuggingFace’s new SmolAgents framework is a bet on Code Agents—systems that treat Python as their primary reasoning language rather than a secondary tool. When you combine this code-first approach with the Model Context Protocol (MCP) for tool interoperability, the friction of agentic integration begins to vanish. Whether it's causal reasoning for software engineering with GraphLocator or recursive planning for deep research, the message is clear: the most effective agents aren't just the smartest; they are the ones best equipped to interact, iterate, and evolve within the real-world stacks they inhabit.

Advancing Autonomous Navigation: WebRL and the Rise of Self-Evolving GUI Agents

A significant cluster of recent research is pushing the boundaries of how agents interact with visual environments, moving beyond static LLMs to dynamic, self-improving systems. WebRL: Training Whole-page Web Agents via Online Self-Evolving introduces a reinforcement learning framework that allows agents to learn from environment feedback. Notably, WebRL-GLM-4 achieves a 41.4% success rate on the WebArena benchmark, significantly outperforming GPT-4o, which typically scores around 18.8% in similar zero-shot settings. This jump highlights the efficacy of curriculum learning and robust reward modeling in bridging the 'semantic gap' for GUI navigation. Other foundational works like Mind2Web and GUI-Agent-Bench provide the necessary datasets and evaluation frameworks to address complex multi-step actions across web and mobile platforms. Furthermore, OS-World and VisualWebArena explore scaling these capabilities to open-ended desktop environments. While models are improving, OS-World notes that even top-tier models like GPT-4o only achieve a 12.24% success rate on desktop tasks, far below the human baseline of 72.36%, underscoring the ongoing challenge of reliable 'computer use' agents for real-world software workflows.

Scaling Agent Literacy: The Rise of HuggingFace’s SmolAgents Framework

HuggingFace has catalyzed a major shift in agentic education with the launch of its Hugging Face Agents Course, designed to democratize the creation of autonomous systems. Central to this curriculum is the smolagents library, a lightweight framework that prioritizes Code Agents—systems that perform actions by writing and executing Python snippets rather than parsing complex JSON schemas. The First_agent_template has already garnered over 850 likes, signaling massive developer interest in modular, code-first agent design. The technical architecture taught in the course revolves around the CodeAgent class, which leverages LLMs to generate executable code, and the Tool class for extending agent capabilities. By utilizing HuggingFaceAgentsCourse_SmolAgent1, learners explore how to manage agent state and memory. This approach, as outlined in the official smolagents documentation, aims to reduce the prompt engineering overhead typically associated with traditional ReAct patterns, instead relying on the inherent logic of Python to handle multi-step reasoning.

MCP Hackathon Winners Redefine Agentic Workflows

The Model Context Protocol (MCP) is rapidly becoming the industry standard for connecting AI agents to external data and tools, effectively solving the 'integration tax' that previously hindered agentic development. During the recent Agents & MCP Hackathon, the gradio_agent_inspector emerged as a critical utility for developers, offering a visual debugging interface to monitor how agents interact with MCP-compliant tools in real-time. This interoperability is further showcased by sipify-mcp, which allows models to manage telephony and SIP protocols, demonstrating that even complex communication infrastructure can be abstracted into a standardized toolset. The ecosystem has seen explosive growth, with over 200 community-contributed servers appearing within weeks of the protocol's launch. Even specialized data sources, such as the pokemon-mcp demo, highlight the protocol's flexibility, allowing developers to build 'tool-agnostic' agents that can plug into any compliant server without custom glue code.

Tiny Models Master Mobile Function Calling: The 270M Parameter Breakthrough

The release of specialized variants like shivshankar2020/functiongemma-270m-it-mobile-actions marks a pivotal moment for on-device intelligence, proving that 270M parameter models can effectively handle complex tool-use tasks. These models are specifically tuned for mobile action sequences, allowing for near-instant execution without cloud round-trips. This ecosystem has rapidly expanded into multilingual support, with mrm8488/functiongemma-270m-it-ft-mobile-actions-fr and mrm8488/functiongemma-270m-it-ft-mobile-actions-es bringing edge-agent capabilities to French and Spanish users. Industry experts like @_mrm8488 highlight that these Small Language Models (SLMs) represent a 'paradigm shift' for privacy-first automation. Practical applications are surfacing, such as the Mr-Dodi/functiongemma-cashmanager, which manages sensitive financial data entirely locally. On benchmarks like the Berkeley Function Calling Leaderboard (BFCL), FunctionGemma's architecture targets sub-100ms latency on modern mobile chipsets.

Causal Reasoning for Automated Issue Localization

The research paper GraphLocator: Graph-guided Causal Reasoning for Issue Localization introduces a novel framework to bridge the semantic gap between natural language issues and source code. By leveraging Graph-guided Causal Reasoning (GCR), the system identifies symptom-to-cause mismatches that traditional RAG systems often miss. According to the research, GraphLocator achieves a Top-1 accuracy of 31.4% on the SWE-bench Lite dataset, significantly outperforming existing methods like BM25 and standard LLM-based retrievers by filtering out irrelevant code paths through causal analysis @_akhaliq. The core innovation lies in its ability to navigate complex Program Dependency Graphs (PDG) to isolate the root cause of a bug. As noted by @arankomatsuzaki, this approach allows software agents to understand the 'underlying causal architecture' of a repository, leading to more reliable bug-fixing workflows.

Open Source Deep Research: Iterative Planning and Specialized Domain Agents

Specialized agents for 'Deep Research' are rapidly maturing in the open-source ecosystem. miromind-ai/MiroMind-Open-Source-Deep-Research utilizes a recursive planning architecture that mimics human research workflows by verifying each step before proceeding. This contrasts with pdx97/ScholarAgent, which prioritizes structured academic retrieval and multi-document synthesis to handle scientific queries. Industry experts such as @_akhaliq have highlighted these tools as significant milestones in autonomous information synthesis. In specialized domains, google/ehr-navigator-agent-with-medgemma applies these agentic patterns to electronic health records. By integrating MedGemma, the system achieves higher accuracy in clinical data retrieval, demonstrating how domain-specific fine-tuning enhances the reliability of autonomous research agents in professional environments.