Hardening the Agentic Production Stack

From chaos engineering to code-centric execution, agents are moving out of the sandbox and into the machine room.

AgentBrief for Jan 02, 2026

X Infrastructure Feed

If you aren't building agent-first sandboxes, you aren't building for the real world.

We are officially moving past the 'chatbox as UI' era into the 'agent as infrastructure' era. Anthropic’s Claude Code and Microsoft’s Agent Workspace aren't just feature updates; they represent a foundational shift in how software interacts with the OS and the web. As builders, our focus is shifting from merely prompting models to architecting secure, stateful execution environments that can handle the autonomy we're finally able to grant them. Amazon's leadership shuffle—replacing research-heavy AGI leads with AWS infrastructure titans—proves that the 'Agentic Web' is no longer a research project; it's a scaling challenge. The industry is pivoting from 'what can the model do?' to 'what can the system safely execute at scale?' This issue dives into the tension between capability and security, the rising cost of long-running agentic loops, and why the sandbox is the new frontier for production-ready agents. For those of us shipping today, the message is clear: the infrastructure must now catch up to the intelligence.

Claude Code’s ‘Skills’ Framework Pushes Local Execution to the Edge

The agentic landscape has been transformed with the release of Claude Code and Opus 4.5, which @bindureddy praises as 'head and shoulders' above other models in real-world agentic scenarios. A standout feature for developers is the 'Skills' capability, enabling custom functionalities within Claude Code. @rileybrown demonstrated this by building a skill that allows Claude Code to post directly to X, showcasing the potential for seamless tool integration. However, the community is already flagging the double-edged nature of this power. @cyberseb_ warns of the risk of malicious skills executing ransomware or data theft due to their inherent access to local files and networks.

Further enhancing Claude Code's capabilities, the /chrome command connects directly to the Chrome extension, bypassing the need for MCP entirely, as highlighted by @rileybrown. Despite this innovation, concerns around authentication and cross-site scripting (XSS) persist. @mbrg0 emphasized that Claude's browser control capabilities could undo years of effort spent preventing web vulnerabilities. While Anthropic staff like @deredleritt3r report a 220% mean productivity improvement, practitioners like @vikhyatk suggest that as codebases grow, developers must disable autocompaction to maintain task execution efficiency.

Amazon Pivots to Planetary-Scale Agentic Infrastructure

Amazon is undergoing a massive restructuring of its AI leadership, marking a strategic pivot from pure AGI research to infrastructure-led deployment. The transition from AGI head Rohit Prasad to AWS infrastructure veteran Peter DeSantis indicates a shift toward operational scalability, as noted by @StockMKTNewz and @actu_ia. DeSantis, the architect behind EC2 and the Annapurna Labs acquisition, brings an infrastructure-first perspective that prioritizes efficient deployment via custom silicon and AWS global reach @FTayAI.

This leadership change suggests Amazon is positioning itself as the 'planetary scale' backbone for AI agents. The integration of physical AI is also accelerating, with robotics pioneer Pieter Abbeel taking a prominent role following the acquisition of Covariant, valued at $400M @bookwormengr. While recent AWS re:Invent updates introduced agentic tools like Kiro and the AWS DevOps Agent @testingcatalog, some critics like @kimmonismus caution that moving from consumer AI like Alexa to complex enterprise robotics could face significant integration hurdles. Nevertheless, the focus on Bedrock AgentCore and Trainium3 chips shows Amazon's intent to dominate business automation at an unprecedented scale @N0uai.

Nvidia NitroGen: Pioneering Generalist Gaming Agents

Nvidia's NitroGen model represents a significant leap for generalist agents designed to master video games without specific prior training. As @_akhaliq noted, this foundation model showcases impressive generalization across genres, echoing the potential of neural models in real-time engines previously seen in Google’s GameNGen. This trend suggests that foundation models are becoming robust enough to handle the complex, interactive environments required for gaming automation.

Skepticism remains regarding the model's true autonomy, with @JakeLindsay reflecting on the broader challenges of achieving true general intelligence in gaming scenarios. The discussion often overlaps with multi-agent simulation research, as highlighted by @_akhaliq, suggesting that NitroGen is part of a larger push toward agents that can simulate and act within diverse digital worlds.

METR Debate: Balancing Progress with Runtime Costs

The AI community is currently debating the utility of METR long-task measurements as a benchmark for agentic progress. @emollick argues that METR scores correlate highly with other key metrics, making it a valuable, ceiling-free indicator of intelligence. However, the performance gap is widening; Claude 4.5 Opus clocked in at 4 hours 49 minutes on METR tasks, significantly outpacing GPT-5.1’s 2 hours 53 minutes, according to @scaling01.

This duration comes at a steep price, leading to concerns about the economic feasibility of agentic evaluations. @scaling01 points out that high token usage in models like Opus 4.5 can lead to costs exceeding $10 per million tokens, which may hinder practical enterprise adoption. While @emollick sees the lack of a performance ceiling as a strength, the community is increasingly calling for a more holistic framework that balances task completion with runtime efficiency.

Benchmarking Document Parsers for Agentic RAG

A deep dive into document parsing for agentic RAG systems has revealed critical performance gaps in complex domains. @MaryamMiradi reports that Andrew Ng's DPT-2 and PDFPlumber significantly outperformed Unstructured.io in handling intricate financial and medical data. Because agentic RAG involves autonomous reasoning rather than simple retrieval, the choice of parser is becoming a make-or-break decision for production environments @MaryamMiradi.

In parallel, self-learning patterns are emerging to improve long-term agent memory. @ashpreetbedi highlighted a pattern using ChromaDB and human-in-the-loop validation to refine retrieval over time. This aligns with broader shifts toward hybrid search and domain-specific reranking to avoid context overload, a principle @MaryamMiradi notes is essential for maintaining agent efficiency in high-stakes industries.

Microsoft’s Agent Workspace for Windows 11 Raises Security Flags

Microsoft has unveiled Agent Workspace, a dedicated desktop session in Windows 11 designed specifically for autonomous processes. According to @theagentangle, this feature gives agents default access to files through a specialized runtime, fundamentally shifting the OS model from human-centric to agent-centric. While this unlocks new automation capabilities, the 'limited' safeguards currently in place have sparked security concerns regarding non-human controlled environments.

Commentary on X suggests this is a move toward an ecosystem where capability evolution is prioritized over data dependency @theagentangle. However, the consensus among builders is that the battle for dominance in this space will hinge on security resilience. As @theagentangle points out, the urgency for robust security models is paramount as we move toward a world of fully autonomous OS interactions.

Quick Hits

Agent Frameworks & Orchestration

- LangChain released a 5-step pipeline tutorial for building agents using retrievers and loaders @LangChainAI.

- Agno launched a multi-agent Creative Studio powered by native Gemini 3 tool use @AgnoAgi.

- Manus AI now features a browser extension for mobile automation, mirroring Claude's Chrome integration @ivanleomk.

Tool Use & Function Calling

- Playwright MCP tools can inject thousands of tokens into context, driving up latency and costs @sawyerhood.

- Research shows that increasing tool-call budgets fails to improve agent performance without better 'tool awareness' @dair_ai.

Memory & Context

- Oracle AI Developer Hub unveiled six memory patterns for LangChain agents using Oracle AI Database @LangChainAI.

- Chroma Cloud added Customer-managed Encryption Keys (CMEK) for secure enterprise agent workloads @trychroma.

- SQL memory layers are becoming the preferred pattern for managing structured agent state @tom_doerr.

Agentic Infrastructure

- Runloop AI is enabling 'Deep Agents' to run in enterprise-grade code sandboxes for secure execution @hwchase17.

- Only 15% of GenAI pilots currently reach enterprise scale, highlighting a massive production gap @FTayAI.

Reddit Engineering Log

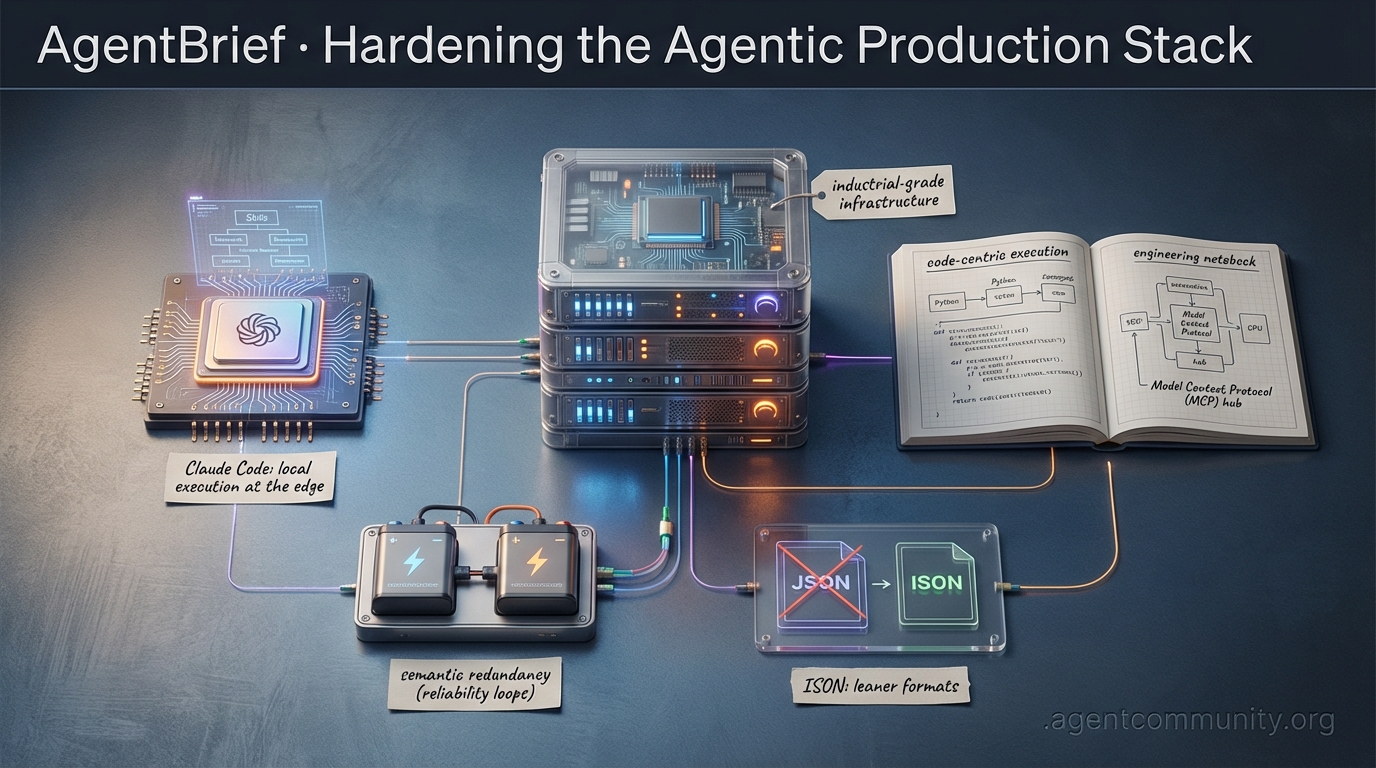

From chaos engineering to token-lean architectures, the agentic web is finally getting its industrial-grade infrastructure.

The honeymoon phase of 'vibes-based' agent development is ending. We are entering the era of the hardened stack. Today’s issue highlights a critical shift: the community is no longer satisfied with agents that work 'most of the time.' We are seeing the rise of chaos engineering for LLMs with tools like agent-chaos, designed to break systems before they hit production. Simultaneously, the 'JSON tax' is under fire. As context windows grow, the overhead of structural data is becoming a bottleneck, leading to leaner formats like ISON that claim to slash token usage by 70%. It is not just about raw intelligence anymore; it is about architectural efficiency and deterministic execution. We are also tracking the Model Context Protocol’s (MCP) rapid expansion into privacy and cloud documentation, proving that standardizing the 'connective tissue' between models and tools is the only way to scale. Whether it is local-first GraphRAG or ultra-deep architectural breakthroughs from DeepSeek, the message is clear: the builders are moving from experimentation to industrialization. The following sections detail the tools and protocols making autonomous systems actually reliable.

Chaos Engineering and the Production Hardening r/LLMDevs

As developers move past simple demos, the focus is shifting toward production reliability and 'judgment' rather than just raw intelligence. u/deepankarmh introduced agent-chaos, an open-source tool designed to simulate LLM provider failures, rate limits, and 'semantic chaos' like fabricated tool data. This addresses the common issue where agents fail silently or enter infinite loops when production environments deviate from local testing.

Industry trends suggest that while observability platforms like Arize Phoenix provide critical OTLP-compliant tracing, they must be paired with active disruption tools to ensure 99.9% reliability in autonomous workflows. Complementing this is Runr, a reliability-first orchestrator built by u/vonwao for long-running coding tasks. Runr implements 'milestone commits' and scope guards, allowing agents to recover from failures at specific checkpoints rather than restarting multi-step tasks from scratch. Meanwhile, u/the_void_the_void released Lightbox, a runtime layer that enforces deterministic tool execution and provides replayable traces to solve the 'black box' problem in tool-calling LLMs.

The 'JSON Tax' Rebellion and Token Efficiency r/LLMDevs

The 'JSON tax' is real, and developers are tired of paying it in long-context applications. u/Immediate-Cake6519 has introduced ISON (Insignificant Syntax Object Notation), a format that strips redundant structural characters to achieve a reported 70% reduction in token usage compared to standard JSON. This is not just a niche optimization; it is a direct response to the way BPE tokenizers handle whitespace, making it more efficient for 'context stuffing' in dense agentic workflows.

Beyond syntax, structural data optimization is proving vital for ROI. u/Unable-Living-3506 recently demonstrated that information-preserving optimizations for CRM records can slash costs by 3x while boosting agent reliability from 85.3% to 94.0%. This shift toward 'token-aware' engineering comes as Gartner analysts, noted by u/Deep_Structure2023, predict that 40% of agentic AI projects will be abandoned by 2027 due to escalating costs. Experts like @SullyOmarr have echoed this, suggesting that prompt compression is now a requirement for production-grade agents.

MCP Ecosystem Expands with Privacy-First 'Connective Tissue' r/mcp

The Model Context Protocol (MCP) ecosystem is rapidly diversifying beyond simple file access, with a sharp focus on local-first security. A standout entry is RedactAI, an MCP server developed by u/Gullible-Relief-5463 that functions as a local privacy firewall. By utilizing Ollama to detect and redact sensitive data from PDFs locally, it ensures PII is stripped before reaching cloud LLMs. This aligns with Anthropic's official security guidelines, emphasizing that MCP servers run on the user's host machine with explicit permissioning.

Beyond privacy, specialized servers are streamlining niche developer workflows. u/init0 launched a server to query TC39 JavaScript proposals, while u/miz_priz introduced a server for Google Cloud Platform documentation. The ecosystem now includes specialized tools for Venice AI and ClinicalTrials.gov, signaling MCP's role as the standard 'connective tissue' for agentic toolsets. This rapid growth is evidenced by the 100+ community-built servers now indexed in registries like Smithery.ai.

Knowledge Graphs Move to the Edge r/LangChain

GraphRAG is transitioning from heavy enterprise infrastructure to lightweight, embedded solutions. u/Fit-Presentation-591 recently highlighted GraphQLite, an SQLite extension that introduces Cypher query support directly into the local environment. By leveraging SQLite, developers can achieve sub-10ms query latency for knowledge bases, bypassing the memory overhead of traditional graph databases like Neo4j.

On the implementation side, projects like Jarvis are pushing the boundaries of local AI memory. u/danny_094 is testing a system that integrates Ollama and DeepSeek with structured knowledge graphs to solve the 'context re-explaining' problem. Technical benchmarks suggest that for knowledge bases under 100,000 nodes, SQLite-based graph extensions provide superior read/write performance. Industry consensus, as discussed in r/ClaudeAI, suggests that by 2026, 'Long-term Memory' (LTM) will move to automated, background graph-indexing of every user interaction.

Small Models Gain Massive Context and Coding Prowess r/LocalLLaMA

The 'small model' revolution continues with the release of Youtu-LLM-2B, a 1.96B parameter model that features a native 128K context window. According to u/KvAk_AKPlaysYT, the model leverages a Dense MLA architecture and supports reasoning-mode (Chain of Thought), outperforming many larger models on agentic benchmarks. This efficiency makes it a prime candidate for edge-based agents processing large local documents.

In the coding domain, IQuest-Coder-V1-40B has surfaced with a claimed 81.4% score on SWE-Bench Verified. However, the community is performing forensic analysis; u/Wittica suggests it may be a 'Frankenstein' hybrid, likely a merge of Llama-3.1 and Qwen2.5 weights. Despite the controversy over 'weight-mixing,' the proliferation of specialized 40B and 100B models like Solar-Open-100B is providing local developers with unprecedented reasoning power for complex software engineering tasks.

The Rapidly Expanding Ecosystem for Claude Code r/ClaudeAI

Since the launch of Claude Code, a secondary ecosystem of management and visualization tools has emerged to bridge the gap between terminal execution and developer experience. u/kaldown released CCPM, a terminal-based plugin manager designed to eliminate the friction of manually editing settings for project scopes.

Visualization is also becoming a priority. claude-run, developed by u/kamranahmed_se, allows developers to browse session history in a web browser and live-stream active chats. This trend of 'vibe-coding' is yielding significant productivity; u/prajwal_y reported building a complete automated video generation pipeline using Claude Code in just 3 days. These tools are transforming Claude from a CLI agent into a robust, extensible environment.

DeepSeek’s mHC: Solving Semantic Collapse r/MachineLearning

DeepSeek has introduced Manifold-Constrained Hyper-Connections (mHC), a novel architectural framework designed to mitigate 'representation collapse' in ultra-deep neural networks. As highlighted by u/Nunki08, mHC ensures that information flow is constrained to a high-dimensional manifold, preventing feature rank from diminishing. This potentially enables the stable training of models with over 1,000 layers.

Simultaneously, the community is pivoting toward Modular, Competence-Routed Architectures. u/hatekhyr argues that the next evolution of LLMs involves a transition from monolithic weights to systems where a router selects specialized modules. This shift aligns with the 'sub-agent' paradigm discussed by u/AdditionalWeb107, who notes that modularity can yield 30-40% improvements in debuggability and lower inference latency.

The Rise of Traceable Reasoning in Agentic AI r/AI_Agents

Monitoring agents that perform dozens of tool calls is currently described as 'total chaos' by practitioners like u/Capital-Job-3592, who highlights the urgent need for a unified observability layer. Startups like AgentOps provide SDKs that track tool-call failures and agent reasoning in real-time, while Langfuse allows monitoring the exact cost per run across complex chains.

Early adopters are moving toward 'judgment logs' to track policy versions and risk levels. u/Echo_OS argues that simply knowing model output is insufficient; developers require visibility into active policies and human-in-the-loop bypasses. To address this, Arize Phoenix provides open-source 'trace' visualizations that map out the internal logic of an agent's decision-making process, serving as the primary audit trail for safety and compliance as noted by u/According-Site9848.

Discord Architectural Brief

From rumored Claude 4.5 sightings to the technical necessity of repeating yourself, the agentic web is rewriting the software playbook.

The shift from Software 1.0 to the Agentic Web is no longer a forecast; it is a live deployment. This week, the industry is buzzing with 'mystery model' sightings on LMSYS that suggest Anthropic and Google are about to move the goalposts again with Claude 4.5 and Gemini 3. But for the builders in the trenches, the real story is architectural. We are seeing a fundamental rejection of the 'Don't Repeat Yourself' (DRY) principle in favor of 'Semantic Redundancy.' As LLMs struggle with context drift in long-running autonomous loops, developers are finding that repeating instructions is a feature, not a bug. This transition toward 'Software 3.0' is manifesting in everything from Cursor's race conditions to Microsoft's GraphRAG breakthroughs. While the hardware costs for local inference are surging toward $11,000 for a professional rig, the software layer is becoming more resilient through activation steering and hybrid memory. Today’s issue explores how we are moving past simple chat interfaces into persistent, self-correcting agents that favor operational uptime over traditional coding elegance.

Frontier Models: Claude 4.5 and Gemini 3 Rumors Intensify

The agentic community is currently tracking high-performance 'mystery models' on LMSYS Chatbot Arena that many speculate are precursors to Claude 4.5 or Gemini 3. While Anthropic has not officially confirmed a 'Claude 4.5 Opus' release, @vinniefalco documented using a purported Opus 4.5 model within the Cursor IDE to generate high-quality research, fueling rumors of a silent rollout. In the LMArena general channel, magnuxdev identified a specific 'Opus 4.5 Thinking 32k' variant, suggesting a focus on enhanced reasoning capabilities similar to recent 'Chain of Thought' breakthroughs.

Parallel to the Claude hype, Google's roadmap for Gemini remains a focal point for agent builders. While Gemini 3 benchmarks remain speculative, users like paws9678 emphasize that the current 1 million token context window of Gemini 1.5 Pro remains the gold standard for long-horizon planning. However, reports of 'Gemini 3' sightings in benchmarking environments suggest a significant jump in bug-fixing and research proficiency. Industry watchers such as @LiamFedus have noted that frontier models are increasingly optimized for autonomous workflows, though timjuice warned that the performance of upcoming 'Thinking' models might even require 'nerfing' to prevent hyper-optimization.

Join the discussion: discord.gg/lmsys

Beyond DRY: The Case for Semantic Redundancy

The technical debate surrounding 'Semantic Programming' marks a fundamental shift from traditional Software 1.0 principles. While inari_no_kitsune notes that traditional paradigms prioritize 'Don't Repeat Yourself' (DRY), agentic workflows thrive on semantic redundancy. This is supported by research into prompt engineering where repeating key constraints across markdown files and system prompts reduces 'context drift.' As codexistance clarifies, while DRY prevents logic duplication, semantic repetition ensures the agent maintains focus during long-running autonomous loops.

This shift suggests a move toward a 'Pattern-match -> Generated command' execution model rather than the standard 'Source -> Compiler -> Binary' flow. Industry experts like @karpathy have previously alluded to this 'Software 2.0/3.0' transition where natural language becomes the primary instruction set. For practitioners, this means intentional redundancy is no longer a sign of poor craftsmanship but a necessary guardrail for high-fidelity reasoning in multi-agent systems.

Join the discussion: discord.gg/anthropic

Hybrid Graph-RAG and Activation Steering Redefine Persistence

Advanced memory architectures are shifting from simple vector lookups to complex, state-aware systems. Developer heloerebus is currently prototyping a hybrid system for Claude Code that fuses graph memory with specialized RAG via LanceDB. This approach aligns with the release of Microsoft's GraphRAG, which utilizes LLM-generated knowledge graphs to provide up to 80% better comprehensive information retrieval than standard RAG by connecting disparate data points that vector search often misses.

Simultaneously, 'mood' and behavioral steering are moving into experimental deployment. legoman8014 recently proposed a 'virtual reality show' featuring 30 models utilizing 'AddAct' steering vectors to adjust internal planning strategies dynamically. This technique is rooted in Representation Engineering (RepE), which allows for the direct manipulation of an LLM's internal activations. By applying these vectors, developers can effectively give agents a 'disposition' or 'emotional memory,' allowing them to pivot strategies without the overhead of long-context re-prompting.

Join the discussion: discord.gg/anthropic

Cursor Faces Technical Hurdles Amidst Autonomous Innovation

Cursor is currently navigating technical debt as its agentic features scale. Users like ancalagon7777 have identified critical race conditions in 'Plan mode' where multiple agents attempt to write simultaneously, leading to overwritten outputs. Further community debugging by hime.san pinpointed a serialization error within StreamUnifiedChatRequestWithTools stemming from how terminal exit codes are processed in PowerShell compared to CMD.

Beyond bug fixes, the community is pushing for deeper agency. rileye9289 has proposed the 'Ralph Wiggum' loop, a plugin architecture that would allow Cursor agents to autonomously re-invoke themselves until a defined exit condition is met. This marks a significant move toward removing the human 'middle-man' from the prompting cycle, though it requires resolving the current serialization and race condition bottlenecks first.

Join the discussion: discord.com/invite/cursor

n8n Atom 3.0 and 'Vibe Coding' Streamline Workflow Versioning

The n8n ecosystem is evolving with tools that bridge the gap between low-code and professional software engineering. khanph24 recently launched 'n8n Atom 3.0,' a VS Code extension that enables file-based workflow management. Interestingly, the extension was developed using the 'Antigravity' vibe coding paradigm, which emphasizes rapid client-side generation. However, scaling these workflows remains a challenge. In the community, shira2299 highlighted critical failures in the n8n RAG demo, while flying_octopus is advocating for native HTTP node parallelization to reduce execution times from 12-15 seconds down to sub-5 second processing.

Join the discussion: discord.gg/n8n

IQuestCoder and Solar-Open-100B Push Open-Source Limits

The open-source landscape is rapidly evolving as new models challenge closed-weight dominance. TrentBot highlighted IQuestCoder-V1-40B, which reportedly achieves SOTA-level performance on coding benchmarks and is optimized for local deployment via Llama.cpp. Simultaneously, Upstage has released a 'public validation' for Solar-Open-100B to resolve community disputes, clarifying it as a unique scaling of the Solar architecture. Experimental 'Franken-models' are also gaining traction, with a Llama 3.3 8B fine-tune surfacing on r/LocalLLaMA that aims to bring complex planning to smaller hardware, though paws9678 warned of a lack of proper attribution in the 'impersonator-model' scene.

Join the discussion: discord.gg/lmsys

Local Inference Costs Surge Amid Software Backend Breakthroughs

Building infrastructure for the agentic web is becoming increasingly expensive. andherpearlcollector noted that a professional-grade 1TB AI rig can now cost between $7,000 and $11,000. High-end storage expansion like the OWC Accelsior SE Nine-Drive NVMe is retailing for approximately $11,499 to handle massive dataset throughput.

On the software side, Ollama is moving toward broader hardware inclusivity via a new Vulkan backend support, integrated through GitHub PR #13570. This move aims to optimize performance for RDNA4 and other non-CUDA GPUs. However, developers warn that hardware alone won't solve the 'intelligence gap'; models under 1B parameters consistently fail to reliably execute tool calling, necessitating a focus on refined architectures over sheer parameter count.

Join the discussion: discord.gg/ollama

The 'Johnny 5' Advantage in Embodied AI

In the realm of embodied agents, the 'Johnny 5' form factor—a tracked base with a humanoid upper body—is gaining favor over bipedal designs. goodlife0416 argues that adding legs is a 'waste of compute' for most site conditions. Tracked bases offer up to 40% higher energy efficiency and significantly lower latency by avoiding the 15-20 TFLOPS of dedicated compute required for bipedal gait stabilization. While bipedal robots excel at stairs, the immediate future of agentic robotics appears to favor hybrid designs that prioritize mobility and operational uptime on rugged terrain over human-like appearance.

Join the discussion: discord.gg/ollama

HuggingFace Model Notes

From 100-line 'tiny agents' to GUI-navigating behemoths, the shift from JSON schemas to direct code execution is redefining the agentic stack.

Welcome to the era of 'Tiny Agents' that punch way above their weight. Today, the agentic web is shedding the complexity of nested JSON schemas in favor of direct code execution. Hugging Face’s smolagents library is leading this charge, proving that high-performance agents don't need thousands of lines of orchestration—sometimes, 50 to 100 lines of Python is enough to outperform traditional tool-calling setups. This shift toward code-centric AI, validated by the GAIA leaderboard, suggests that logic is best handled natively, not via prompted schemas. But it's not just about how agents think; it's about where they act. We're seeing a massive push into GUI autonomy with ScreenSuite and the rise of 'Open Deep Research' tools that challenge the proprietary silos of OpenAI and others. While models like Hermes 3 provide the raw reasoning power, benchmarks like DABStep remind us that a significant 'reasoning gap' remains for complex data tasks. In this issue, we explore the frameworks, protocols, and models closing that gap, from 270M parameter edge models to 405B parameter instruction-followers. The message for builders is clear: simplify the stack, standardize the protocol with MCP, and focus on verifiable execution.

The Rise of the 'Tiny' Code-Centric Agent

The era of bloated agent frameworks is coming to an end. Hugging Face's smolagents library is spearheading a movement toward 'tiny' code-centric agents that favor direct Python execution over the 'JSON fatigue' of nested tool-calling schemas Aymeric Roucher. By allowing models to write and run code snippets natively, developers are seeing a massive jump in logical consistency. As @aymeric_ruan points out, these code-centric agents are now dominating the GAIA leaderboard, handling complex loops and data manipulation that leave JSON-based models stumbling. This lean approach is further bolstered by the Model Context Protocol (MCP). MCP acts as the architectural glue, enabling agents to connect to everything from Google Search to local SQLite databases with minimal overhead. We're seeing production-ready agents built in under 100 lines of code—a stark contrast to the heavy orchestration layers of 2024 huggingface/blog. This isn't just about brevity; it's about building agents that are easier to debug, faster to deploy, and fundamentally more capable of multi-step reasoning.

Desktop Autonomy and Open Deep Research

Agents are finally breaking out of the text box and onto the desktop. With the release of ScreenSuite and ScreenEnv, H-Company is providing the standardized testing grounds needed to move GUI agents from novelty to utility H-Company. These frameworks measure success across 13 diverse operating system tasks, providing a high-fidelity environment for the next generation of visual navigators. Leading the charge is the Holo1 family of VLMs, which powers the Surfer-H agent to a state-of-the-art 81.4% on the ScreenBoard benchmark, proving that specialized visual grounding can outperform even the largest general-purpose models H-Company. Parallel to GUI navigation, Hugging Face's Open Deep Research project is democratizing autonomous investigation Open Deep Research. By employing a manager-worker architecture and integrating search APIs like Tavily and DuckDuckGo, this open-source tool challenges proprietary research silos. Whether it's navigating medical records with Google's MedGemma or automating academic bibliographies with ScholarAgent, the focus is shifting toward verifiable, autonomous work in specialized domains.

Specialized Engines: From Hermes to Edge

The hardware requirements for agency are diverging. On one end, Nous Research's Hermes 3 is pushing the boundaries of Llama 3.1, offering a 'neutrally aligned' engine that follows complex instructions without the moralizing friction of base models. As @Teknium8 emphasizes, Hermes 3 prioritizes tool-calling accuracy, making it the preferred choice for high-stakes orchestration NousResearch/Hermes-3-Llama-3.1-405B. Meanwhile, 'Thinking' models like hxssgaa/Qwen3-8b-IntThink are incorporating interleaved chain-of-thought to force internal reasoning before execution, significantly reducing hallucinations. But perhaps most surprising is the rise of the ultra-small. The TheReinventGuy/functiongemma-270m-it-mobile-actions model proves that agentic power can be localized for mobile and edge devices. This 270M parameter powerhouse can handle mobile-specific actions with low latency, suggesting that the future of the agentic web isn't just in the cloud—it's in your pocket, running locally and privately.

The Reasoning Gap: Benchmarking the Future

Despite rapid progress, a 'reasoning gap' still looms over the industry. The GAIA2 benchmark has raised the stakes, moving beyond basic assistant tasks to real-world scenarios that demand sophisticated planning Hugging Face. The data is sobering: the DABStep report shows that even titans like GPT-4o and Claude 3.5 Sonnet struggle with complex data manipulation, often scoring below 50% on tasks where humans achieve over 90% the DABStep technical report. This logic plateau is the next big hurdle for agentic builders. NVIDIA is stepping in to help bridge the gap for the global market, releasing a massive 6-million-item multilingual reasoning dataset NVIDIA. By providing the high-quality training data needed for non-English applications, NVIDIA is ensuring that the next leap in autonomous reasoning isn't limited to the English-speaking world. As benchmarks like FutureBench push models to their creative and logical limits, the race is on to see who can finally close the gap between human intuition and machine execution.