Recursive Logic and Lean Harnesses

The era of heavy wrappers is ending as recursive logic and code-based tool execution take center stage.

AgentBrief for Jan 05, 2026

X Pulse: Recursive Shifts

Stop feeding your agents tokens and start giving them variables.

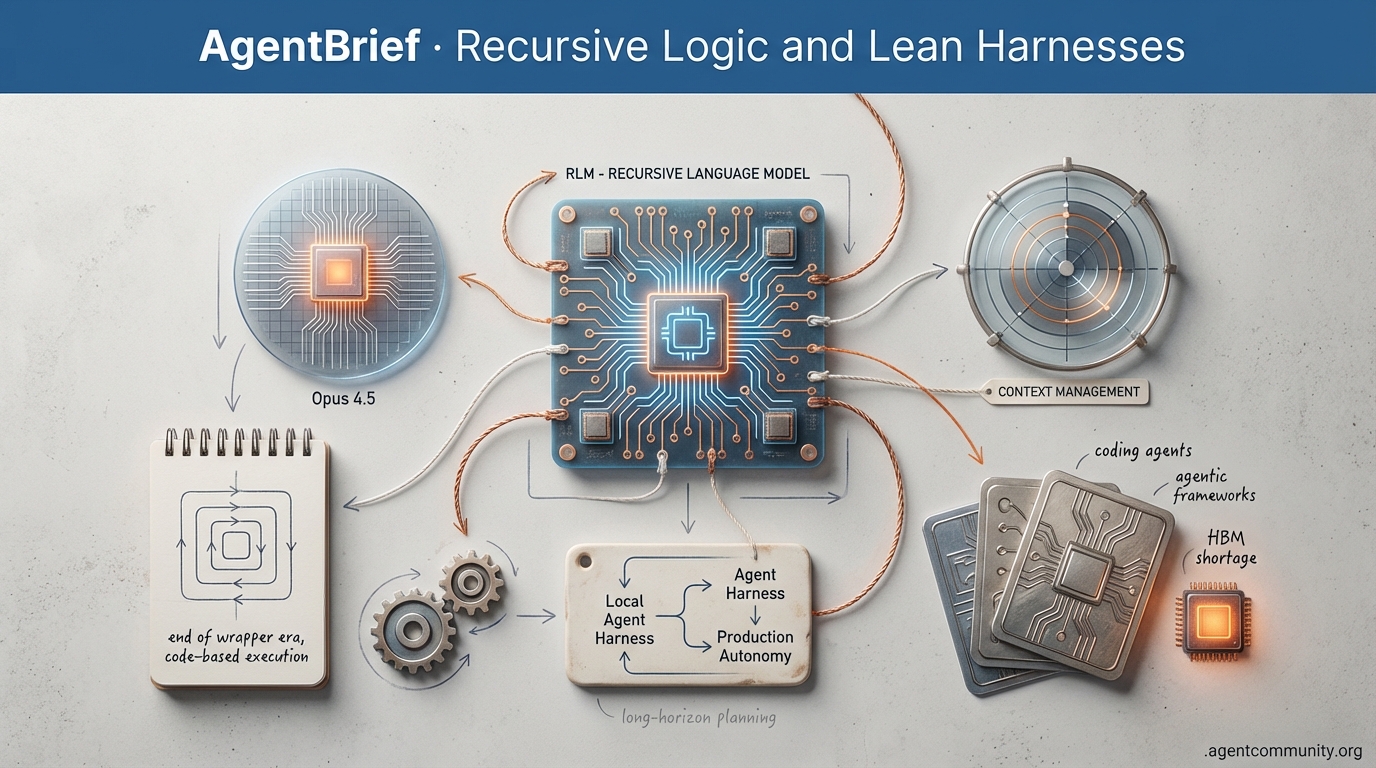

We are moving past the era where 'agentic' was a marketing buzzword for a simple loop. Today, the agentic web is taking shape as a distinct architectural layer. The shift from static prompt engineering to Recursive Language Models (RLMs) signals a future where agents manage their own 'RAM' by treating context as code variables rather than just a stream of tokens. This isn't just about longer context windows; it’s about agents having the agency to search and retrieve their own logic. Simultaneously, Anthropic is pivoting the developer experience from 'chatting with an IDE' to 'orchestrating a fleet.' With Claude Code’s Skills system, we are seeing the emergence of a modular, file-based package manager for agentic capabilities. For those of us shipping agents in production, this matters because it shifts the bottleneck from model intelligence to system design. The infrastructure—from HBM memory availability to standardized scientific protocols—is finally catching up to the ambitions of autonomous software. If you aren't building for long-horizon, recursive execution yet, you’re already behind.

Recursive Language Models Redefine Context Limits

Recursive Language Models (RLMs) have emerged as a groundbreaking paradigm shift in handling long-context tasks, treating input prompts as Python objects in a live environment rather than static token sequences, as @lateinteraction recently noted. This innovative architecture enables agents to manage context lengths exceeding 10M tokens by allowing the model to write code to search, chunk, and retrieve its own prompt as a variable. As @EmergentMind highlights, this effectively acts as 'RAM' for LLMs, outperforming traditional methods on long-horizon tasks. Unlike traditional RAG, which often struggles with 'context rot' as input length grows, RLMs maintain performance across massive token counts @lateinteraction.

The implications for agent builders are profound: we are moving toward a world where context management is offloaded to code, potentially reducing the need for ever-larger context windows @avgrocketman. Industry sentiment from @MikelEcheve suggests that RLMs may offer significant cost advantages by selectively processing relevant prompt snippets via recursive sub-calls. While specific benchmarks are still emerging, some users have already observed performance gains of up to 110% over frontier models like GPT-5 in complex, long-context scenarios @Joelc_eth. This approach is being hailed by @dukejones as the solution to reasoning degradation, scaling effective context length by orders of magnitude without sacrificing accuracy.

Claude Code 'Skills' Redefine Agentic Coding Over Custom GPTs

The agentic developer landscape is evolving as Anthropic’s Claude Code introduces 'Skills,' a file-based package system enabling agents to build and persist custom toolsets for complex tasks @btibor91. Unlike OpenAI’s Custom GPTs, which rely on predefined configurations, Claude Code Skills empower autonomous agents with modular, reusable components that adapt over multiple interactions. According to @rileybrown, the system has reached a 'usefulness tipping point' with Claude 4.5 Opus's superior planning, allowing agents to self-correct and iterate for up to 20 minutes without looping errors @Ji_Ha_Kim.

Developers are increasingly vocal about Claude’s edge in technical implementation, particularly its ability to manage 100 subagents in parallel for a single task—a feat that redefines orchestration for TUI-based platforms @InnaLyceyum. This framework, paired with subagent orchestration, reportedly allows for 80% faster research and crisis resolution in production environments like Kubernetes clusters @codingscape. However, contrarian takes from @kunalgoesbyken caution that production use requires careful cost management to avoid drowning in high expenses. Despite the learning curve, the consensus from @somi_ai is that Anthropic's focus on agentic primitives like Skills and MCP offers unparalleled configurability compared to model-agnostic tools.

SCP Protocol Aims to Build Global Network of Scientific AI Agents

The Science Context Protocol (SCP) has emerged as a groundbreaking open-source standard designed to integrate isolated scientific AI agents into a cohesive, interoperable network. As @omarsar0 noted, this protocol facilitates multi-institution, agent-driven scientific discovery by providing a secure layer for collaboration on complex research. This vision aligns with research like SAGA, which automates objective function design for discovery, as detailed by @dair_ai.

While the potential for a 'global web of autonomous agents' is seen as foundational for 2026, challenges in coordination remain. As @dair_ai points out, communication costs in multi-agent systems could still hinder scalability. Furthermore, the broader hurdle of training multi-agent systems for effective collaboration—beyond individual performance—remains a significant barrier for the community, as discussed by @omarsar0.

SWE-EVO Benchmark Redefines Coding Agent Evaluation

The introduction of SWE-EVO marks a significant shift in focus towards maintenance and iterative development for coding agents. As @omarsar0 highlights, this benchmark addresses the reality that software maintenance accounts for up to 80% of engineering efforts, a complexity often ignored by one-off generation benchmarks. This perspective is reinforced by @GregKamradt, who argues for more realistic assessments of agent capabilities in evolving codebases.

For agent builders, SWE-EVO is critical because it targets the long-horizon tasks where current agents often falter. While it represents a step forward, @GregKamradt notes that it may still lack testing for dynamic real-world scenarios like ambiguous requirements. Nevertheless, the benchmark is expected to reshape how agentic systems are developed, emphasizing the importance of robustness in software evolution @omarsar0.

HBM Shortages Threaten Agentic AI Infrastructure

High-bandwidth memory (HBM) shortages are emerging as a critical barrier for agentic systems that rely on rapid state management. As @rohanpaul_ai observes, even the most advanced GPUs cannot deliver performance without sufficient HBM, a systemic challenge that could persist through late 2027. This shortage is pushing companies to prioritize infrastructure efficiency over pure model scaling, as noted by @FTayAI.

The ripple effect extends beyond data centers, with memory production reallocations potentially raising consumer electronics prices by 5-20% in 2026 @rohanpaul_ai. While some, like @FTayAI, fear a growing divide where only well-funded corporations can afford the necessary infrastructure, others suggest that innovations in memory-augmented LLMs could mitigate these constraints @rohanpaul_ai.

Quick Hits

Agent Frameworks & Orchestration

- Mem0 natively integrates with CrewAI and Langflow following a $24M funding round @taranjeetio.

- Hugging Face's smolagents library enables 'code-as-action,' solving 60% more GAIA tasks than JSON-based agents @agentcommunity_.

Memory & Context

- H-MEM architecture uses a four-level semantic hierarchy for 5x faster inference in memory-heavy tasks @adityabhatia89.

- M+ mechanism extends knowledge retention to 160k tokens with minimal GPU overhead via dynamic retrieval @adityabhatia89.

Tool Use & Navigation

- NestBrowse limits web-searching agents to 4 core actions to prevent context drowning on dynamic sites @rohanpaul_ai.

- A new SQL memory layer for persistent agent state has been released to manage complex multi-session trajectories @agentcommunity_.

Models for Agents

- Opus 4.5 tops LiveBench leaderboards, confirming its status as the preferred reasoning engine for agentic workflows @bindureddy.

- DeepSeek V3 outperforms rivals on collegiate math problems, showcasing superior logical grounding for agents @rohanpaul_ai.

Developer Experience

- OpenCode v1.1.1 ships a major permissions system overhaul to improve security for production agents @thdxr.

- Claude Code's plan mode significantly reduces bad model assumptions by forcing structured reasoning @omarsar0.

- AgentReuse addresses latency bottlenecks by caching and reusing plans for identical agent requests @dair_ai.

Reddit's Architecture Deep-Dive

Meta acquires Manus AI as developers ditch heavy frameworks for lean agentic harnesses.

The agentic landscape just hit a massive inflection point. Meta’s $500M acquisition of Manus AI isn't just another corporate consolidation; it’s a clear signal that the 'wrapper' era is dead. We are moving toward a world where general-purpose agentic architectures are integrated directly into the platform level to solve the 'hallucination gap' in tool-calling. This strategic shift is mirrored in the developer community, where we're seeing a mass 'graduation' from heavy, abstract frameworks like LangChain toward 'agent harnesses.' These lean execution environments prioritize low latency and direct tool-use precision over rigid abstractions.

The technical hurdles of 2024—context exhaustion and semantic collapse—are finally meeting their match. Apple’s CLaRa is redefining how we think about RAG by compressing thousands of tokens into salient representations, while new 'MCP Gateways' are preventing tool-bloat from choking our models. Even the hardware is catching up, with NVIDIA’s 72GB VRAM cards making local 70B parameter agents a reality. Today’s issue explores how these infrastructure pieces are snapping together to move agents out of the demo trap and into production-ready autonomy.

Meta Acquires Manus AI for $500M r/aiagents

Meta has officially acquired Manus AI in a deal valued at approximately $500M, signaling a massive pivot toward autonomous agent capabilities. According to industry analysis by @rowancheung, the acquisition allows Meta to leverage Manus's 'General Purpose' architecture for proactive task execution across its apps. Manus AI, which reportedly hit a $100M ARR and a $125M revenue run rate, will be integrated into Meta's consumer and business product suites to solve the 'hallucination gap' in tool-calling, as discussed by u/SolanaDeFi. This move follows a competitive funding environment involving Benchmark, as noted by @bindureddy, validating high-valuation ceilings for agentic startups. The industry is watching how Meta will enable multi-step reasoning across its vast ecosystem, suggesting that the next phase of the agentic web will be dominated by platform-integrated agents rather than standalone wrappers.

Developers Graduate from Frameworks to 'Agent Harnesses' r/aiagents

A fundamental shift is occurring in AI engineering as practitioners move away from heavy, abstract frameworks like LangChain toward 'agent harnesses' optimized for tight execution loops. u/wait-a-minut argues that the industry is maturing past the generic abstractions of 2024 into nuanced optimizations for compaction, tool-use precision, and memory. Tools like Claude Code and OpenHands are now cited as the 'ideal harnesses' because they provide a bare-metal environment for agents to operate, rather than forcing them through rigid, pre-defined DAGs. This evolution emphasizes the 'ping-pong' pattern of multi-step reasoning, where data bounces between the LLM and local backends for constant validation. Technically, the 'harness' architecture differs from frameworks by prioritizing the execution context over the library abstraction, focusing on agentic memory—a system where the agent's context evolves dynamically through use. Industry sentiment suggests that this move toward 'thin wrappers' can reduce token-to-action latency by up to 40% through more direct integration with development tools.

Deco MCP Mesh and the Rise of Agentic Gateways r/mcp

As the Model Context Protocol (MCP) gains traction, developers are encountering a 'tool-bloat' wall where connecting 100+ servers can consume the majority of a model's context window with tool definitions. u/babydecocx has introduced Deco MCP Mesh, an open-source runtime gateway designed to act as a control plane for these servers. By managing tool discovery and dynamically routing requests, Deco prevents context exhaustion and improves agent reasoning performance. The ecosystem's expansion is further evidenced by specialized implementations like the SEC EDGAR server for financial filings r/mcp and the Civo Cloud server for managing Kubernetes clusters r/mcp. This shift toward 'agentic gateways' allows for multi-server orchestration while maintaining efficiency, moving beyond simple tool-calling into robust operational infrastructure.

Apple’s CLaRa and 585x Compression Break the RAG Memory Wall r/AgentsOfAI

Traditional RAG systems are increasingly suffering from 'Semantic Collapse,' where high-volume document retrieval results in noise-heavy outputs. u/SKD_Sumit identifies Apple’s CLaRa (Compressed Language-Augmented Retrieval Architecture) as a breakthrough, utilizing a Salient Compressor to prune irrelevant tokens before they reach the LLM. Technically, CLaRa employs a cross-attention mechanism to transform thousands of retrieved tokens into a compact set of latent representations, which can reduce inference latency by up to 3x and significantly lower the memory footprint of long-context prompts. Beyond context compression, the industry is shifting toward massive embedding optimization to bypass physical hardware limits. u/Sorry-Reaction2460 highlights 585x semantic compression techniques, such as binary quantization and Matryoshka embeddings, which allow agents to manage multi-gigabyte vector databases within constrained RAM environments. These advancements are critical for preventing system crashes in multi-agent environments where 'continuous self-evolving' memory requires real-time organization of long-term history.

Local Inference Breakthroughs and 72GB VRAM r/LocalLLaMA

The hardware landscape for local agents is undergoing a seismic shift with NVIDIA’s release of the RTX PRO 5000, boasting a massive 72GB of VRAM. As noted by u/Right_Pea_2707, this capacity allows developers to run 70B+ parameter models entirely on-device, ending reliance on cloud clusters for high-fidelity agentic workflows. Performance on the software side is matching this growth; the ik_llama.cpp project has achieved a 3x to 4x speed improvement for multi-GPU configurations by reducing synchronization overhead u/Holiday-Injury-9397. On the CPU front, developers are achieving 30x real-time transcription speeds using NVIDIA's Parakeet model on standard i7 processors u/SlightPossibility331. However, the consumer-grade RTX 5070 Ti has faced community backlash, with benchmarks revealing a 50% performance degradation when model size exceeds VRAM, a regression that could impact agents utilizing large-context windows.

Escaping the Agentic Demo Trap for Enterprise r/aiagents

Enterprise leaders are pivoting from surface-level generative chatbots to autonomous 'decision-making' agents. u/According-Site9848 highlights that true enterprise value lies in multi-system coordination. This shift is already manifesting at Klarna, where AI assistants now handle two-thirds of all customer service chats, performing the equivalent workload of 700 full-time agents. Despite these successes, the 'Demo Trap' remains; agents often fail in production due to network latencies or unexpected UI shifts. u/Yuna_huang argues that training in synchronous environments leaves agents fragile to the asynchronous reality of production. To combat this, developers are adopting TraceML for real-time visibility into training stalls and standardizing around benchmarks like AgentBench to verify reliability in high-stakes environments.

OpenAI Leadership Shifts and Hardware Ambitions r/OpenAI

Internal shifts at OpenAI continue as Jerry Tworek, a seven-year veteran and key lead for the o1 model, announces his departure @JerryTworek. This follows reports of 'rerouting' issues where users are allegedly being served 'gpt-5-preview' responses in a silent A/B test u/W_32_FRH. On the financial front, OpenAI's Greg Brockman has significantly boosted a $102 million political war chest, highlighting the intersection of AI leadership and national policy. Simultaneously, rumors of an OpenAI hardware interface—a physical 'AI Pen' developed with Jony Ive's LoveFrom—suggest the company is preparing a screenless, voice-first wearable designed to integrate ChatGPT directly into daily tasks r/ArtificialInteligence.

Discord's Reasoning Hub

Anthropic's massive thinking window meets local inference speedups to redefine agentic reliability.

The agentic web is shifting from fast-talking chatbots to slow-thinking strategists. Today's focus on Claude 4.5 Opus Thinking, sporting a massive 64k token reasoning buffer, marks a turning point for developers who have struggled with the hallucination ceiling in complex multi-step tasks. While Sonnet remains the speed king, Opus is positioning itself as the brain of the operation, leading many to adopt a hierarchical architecture: Opus for planning, and smaller models for execution. But compute isn't just a cloud story. The local inference community is fighting back with the ik_llama.cpp fork, delivering up to 4x speedups that make high-fidelity agent simulations viable on home-grown hardware. We're also seeing the standardization of agent communication via the Model Context Protocol (MCP), where modular skills are replacing the monolithic agent blobs of last year. However, as we solve the technical hurdles, the regulatory ones are mounting—the EU's Cyber Resilience Act is no longer a distant threat but a design requirement. In today's issue, we dive into the performance gains, the migration friction in workflow tools like n8n, and why VSLAM is the unsung hero of the robotics edge. It's a busy day for anyone building the autonomous future.

Opus 4.5 Thinking: 64k Token Limit Redefines Strategic Reasoning Benchmarks

The debate over superior models for complex reasoning has transitioned toward Claude 4.5 Opus Thinking, which now supports a massive 64k thinking token limit. In the LMArena community, gitwennyfive and noteveno highlighted that this capacity is a significant upgrade over the 32k variant, providing a massive advantage for long-form reasoning and high-stakes strategic planning. According to recent performance tracking on LiveBench.ai, the Thinking version of Opus shows a marked lead in multi-step logical deduction compared to its Sonnet counterparts. Industry observers like @AI_Analyst have noted that while Sonnet is optimized for speed, the 64k buffer in Opus allows for deeper internal chain-of-thought verification, effectively reducing hallucination rates. However, the high resource cost remains a primary concern. ehm noted that Opus maxes out usage limits almost immediately, leading many to adopt the Orchestrator-Subagent pattern. As discussed by hime.san, this architecture utilizes Opus for high-level orchestration while delegating sub-agent tasks to Sonnet to maintain cost efficiency. Join the discussion: discord.gg/lmarena

Multi-GPU llama.cpp Fork Achieves Up to 4x Speedup in Local Inference

A significant performance breakthrough for local LLM inference has emerged via the ik_llama.cpp project, a performance-optimized fork maintained by ikawrakow. This fork achieves a 3x to 4x speed improvement for multi-GPU configurations by optimizing memory bandwidth and quantization overhead through specialized Importance Quantization (IQ) methods. This development is critical for developers running large-scale agentic workflows on local hardware clusters, where inference latency is often the primary bottleneck. While users like ashtray9843 argue that consumer GPUs still lack the bandwidth for meaningful training of adult size models, the inference gains allow for more complex multi-agent simulations on existing hardware. Discussion in the #LocalLLM channel suggests that even mobile setups like the RTX 2060 are being pushed to their limits using tools like Unsloth and LoRA, as noted by nunodonato. Join the discussion: discord.gg/localllm

FastMCP and Modular Skills Drive the Model Context Protocol Ecosystem

The Model Context Protocol (MCP) ecosystem is rapidly evolving as developers shift from monolithic agent builds toward modular skills. A key driver is FastMCP, a high-level Python framework that simplifies the creation of tools and resources. eng.ziad_62329 highlighted how this implementation allows agents to dynamically call specific functionalities. This modularity is crucial for efficiency; as ia42 noted, converting complex tools into MCP skills significantly reduces token overhead by keeping the context window focused on relevant data. Current popular implementations include the mcp-searxng server, which facilitates deep research via local or self-hosted instances ihor-sokoliuk. This is often paired with local LLM setups, such as those discussed by datatics, where Ollama-hosted models bridge with tools like SearXNG to perform private, autonomous research. Join the discussion: discord.gg/claude

Prompt Arena and LiveBench Redefine LLM Strategic Evaluation

Static benchmarks like MMLU are increasingly seen as insufficient for evaluating autonomous agents due to data contamination. Prompt Arena has emerged as a critical evaluation platform where models face strategic problems in dynamic environments, forcing them to rely purely on multi-step planning. According to klawikowski, model performance gaps become obvious when they are forced to operate under these constraints without memory shortcuts. This shift toward contamination-free testing is further supported by LiveBench, which releases new problems monthly to ensure models cannot rely on training data memorization. Developers are prioritizing domain-specific evals over generic scores to measure real-world utility. philipsman highlighted that needle in a haystack benchmarks remain the gold standard for testing a local LLM's ability to retain information across massive contexts. Join the discussion: discord.gg/lmarena

VSLAM and LFM Fusion: The New Standard for Edge Autonomy

In the robotics space, practitioners are solidifying VSLAM (Visual Simultaneous Localization and Mapping) as the mandatory foundation for navigation. Expert contributor .joshmakestuff argues that while VLMs provide the agent brain, VSLAM must handle core localization to preserve compute. For hardware like the Jetson Orin NX, developers are targeting processing caps of 90-100 Hz using optimized stacks like NVIDIA Isaac ROS VSLAM to ensure enough VRAM and CPU headroom remains for reasoning. The rise of budget-friendly autonomous agents is increasingly driven by ultra-efficient models such as Liquid AI’s LFM2-VL 1.6B. As documented by Liquid AI, these models can fit into roughly 3GB of VRAM when running at Q4 quantization, making real-time on-device autonomy feasible. Join the discussion: discord.gg/huggingface

n8n v2 Migration: Solving Cloud Startup Loops and Multi-Tenant Scaling

The transition to n8n v2 has introduced significant architectural improvements, but users are encountering friction during the upgrade. As noted by juanazabaleta, cloud instances frequently become stuck post-migration, a state often triggered by database schema lock-ins. Experts like zunjae emphasize that the official migration tool is essential, particularly when dealing with the respond to webhook node, which has undergone logic changes that can cause 100% execution stops if not manually reconfigured. Simultaneously, oyedeyeye argues that for compliance and data sovereignty, separate installations remain superior to shared environments, sparking a surge in requests for MSP-grade reseller portals. Join the discussion: discord.gg/n8n

EU Cyber Resilience Act Mandates Radical Security by Design for AI Agents

Compliance is becoming a primary concern for developers building agentic systems for European markets. akshay.kanthed is leading discussions on the EU Cyber Resilience Act (CRA), which imposes strict security requirements on software with digital elements. For AI agents that have the authority to execute code, these regulations necessitate radical changes in architecture, particularly around execution safety. Under the CRA, manufacturers must ensure products are delivered without known exploitable vulnerabilities and provide a Software Bill of Materials (SBOM). emperorhalfounce advises that developers must set invariants in stone to curb accidental drift. While Docker environments are the baseline for safety, production-grade agents may face fines up to €15 million for failing to provide adequate sandboxing. Join the discussion: discord.gg/claude

HuggingFace Code-Agent Labs

Hugging Face’s smolagents and the MCP movement are proving that the future of the Agentic Web is lightweight, local-first, and written in Python.

We are witnessing a fundamental pivot in how agents interact with the world. For the last year, developers have been shackled by the 'JSON tax'—the rigid, verbose, and often brittle schemas required to make LLMs talk to external tools. That era is ending. With the release of smolagents and the surge in Model Context Protocol (MCP) adoption, the community is moving toward 'Code Agents' that write and execute Python to solve problems. This isn't just a stylistic choice; it's a massive performance win, reducing workflow steps by 30% and outperforming traditional agents on the GAIA benchmark. This issue explores the decentralization of deep research, the rise of 50-line micro-agents, and the inevitable hardware shift as Intel and Google optimize for these specialized agentic workflows. We are moving away from monolithic black boxes toward transparent, local-first systems where the 'reasoning chain' is as inspectable as the code itself. For builders, the message is clear: stop building complex wrappers and start enabling your agents to code their own way out of a problem.

Code-Writing Agents Take Center Stage with smolagents

Hugging Face has introduced smolagents, a library focused on 'code agents' that write their actions in Python rather than structured JSON. This shift addresses the limitations of traditional tool-calling by allowing agents to handle complex logic, loops, and data manipulation directly. Research indicates that code-based actions can reduce the number of steps in complex workflows by up to 30% compared to JSON-based alternatives. The library has already demonstrated significant performance, with the Transformers Code Agent beating the GAIA benchmark, a standard for general AI assistants. The library now supports Vision Language Models (VLMs) like Idefics3, enabling agents to 'see' and act within multimodal environments as detailed by Hugging Face.

Practitioners are rapidly adopting this lightweight approach, as evidenced by the First_agent_template which has now garnered over 850 likes. To ensure execution safety, smolagents integrates with sandboxed environments like E2B, preventing arbitrary code from compromising local systems. For production monitoring, the integration with Arize Phoenix provides detailed tracing and evaluation of these code-based actions using the OpenInference standard. This ecosystem move suggests a definitive preference for the flexibility of code-as-action over the rigidity of predefined tool schemas.

Open-Source Deep Research: Decentralizing Autonomous Search

In a strategic move to democratize high-level reasoning, Hugging Face has released its open-source 'Open Deep Research' framework, built on the smolagents library. Unlike OpenAI's proprietary model, this implementation uses a CodeAgent architecture, where the agent writes and executes Python code to perform multi-step web searches and data synthesis. This allows developers to inspect the full 'reasoning chain,' addressing the transparency gap in black-box systems. Community projects like MiroMind-Open-Source-Deep-Research are already utilizing this to provide real-time search capabilities with user-configurable API providers like Tavily and Serper.

To evaluate these autonomous agents, the community has turned to FutureBench, a benchmark designed to test an agent's ability to perform 'future-oriented' reasoning on events occurring after the training cutoff. Initial results indicate that while open-source agents excel at retrieval, they still face a 'reasoning-creativity trade-off'. As noted in the paper Agentic Information Retrieval, increasing search complexity can improve factual accuracy by up to 35% but may lead to looping behaviors. The open-source approach mitigates this by allowing developers to implement custom 'Self-Correction' loops, where the agent verifies the relevance of a source before integration.

Micro-Agents and MCP: The Shift Toward Local-First AI

The 'Tiny Agent' movement is gaining momentum by leveraging the Model Context Protocol (MCP) to provide tools to LLMs with minimal overhead. Hugging Face successfully demonstrated an MCP-powered agent in just 50 lines of code, later expanded to 70 lines for more robust Python-specific implementations. This approach prioritizes simplicity and interoperability, allowing developers to bypass the 'integration tax' of custom tool-calling by using a standardized protocol.

Security is a major differentiator for MCP-based micro-agents. Unlike traditional cloud-hosted frameworks, MCP enables a local-first architecture where the agent interacts with data sources through a secure local bridge. Practical applications are emerging from the Agents-MCP-Hackathon, including specialized tools like sipify-mcp and pokemon-mcp. These experiments demonstrate that the barrier to building agentic capabilities is dropping, allowing 'micro-agents' to be embedded directly into existing software stacks without monolithic frameworks.

The Evolution of OS-Native GUI Agents

The landscape of Vision Language Models (VLMs) is shifting toward full-stack operating system automation. The Holo1 family, developed by Hcompany, powers the 'Surfer-H' agent, which is specifically optimized to reduce visual latency by streamlining token processing during high-frequency UI updates. This architectural choice addresses the primary bottleneck in cross-application workflows where traditional agents often fail due to the 'semantic gap' between raw pixels and actionable UI metadata. To standardize progress, ScreenSuite has emerged as a comprehensive evaluation framework, measuring performance across hundreds of diverse tasks using Step Success Rate (SSR) and Goal Completion Rate (GCR). Complementary platforms like ScreenEnv facilitate the deployment of these full-stack operators, while Smol2Operator focuses on efficient post-training to enhance 'computer use' reliability.

Edge Intelligence: FunctionGemma and Intel-Accelerated Qwen

The release of the FunctionGemma-270M series marks a significant step toward 'agentic edge' computing. Fine-tunes like katharsis/functiongemma-270m-it-mobile-actions demonstrate that 270M parameter models can handle basic mobile OS commands with high accuracy, matching the success rates of larger models like GPT-4o-mini while maintaining a drastically smaller memory footprint. Meanwhile, Intel has introduced a method to accelerate Qwen3-8B Agents on Intel Core Ultra processors. By utilizing depth-pruned draft models for speculative decoding, Intel achieves 2-3x inference speedups, enabling complex agentic reasoning on consumer AI PCs. Specialized routing layers like bhaiyahnsingh45/functiongemma-multiagent-router now orchestrate tasks between these tiny specialized agents to build hierarchical on-device systems.

Jupyter Agents and the Reasoning-Creativity Trade-off

Training agents to reason within interactive environments is a key focus for the next generation. Jupyter Agent 2 by QuantStack and Hugging Face is designed to navigate notebooks, enabling agents to perform data science tasks by observing cell states. However, research in The Reasoning-Creativity Trade-off warns that reinforcing correctness in reasoning loops can lead to a significant collapse in semantic entropy, reducing creative output as the model converges on narrow paths. To mitigate this, researchers are turning to test-time compute strategies. Projects like Kimina-Prover apply test-time RL search using verifiers to explore diverse solution paths in Lean 4. This is complemented by the DABStep benchmark, which evaluates multi-step reasoning in data-heavy contexts, shifting the burden of correctness from training weights to search-time verification.