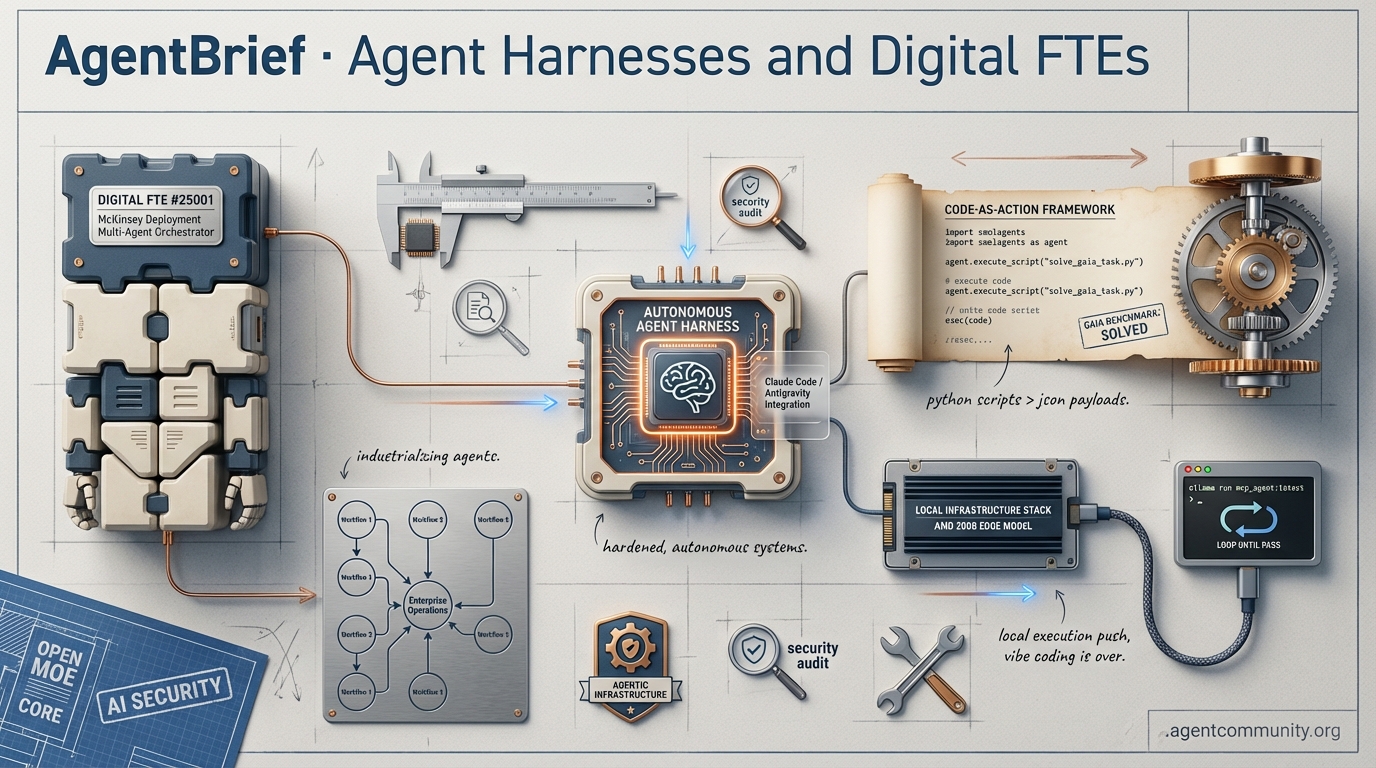

Agent Harnesses and Digital FTEs

The Agent Harness Era We are moving from LLMs as 'brains' to agents with 'bodies'—dedicated infrastructure like Claude Code and Google Antigravity that ground autonomous agents in professional software environments and local terminals.

Industrializing Digital FTEs McKinsey’s deployment of 25,000 agents signals the arrival of the 'Digital FTE,' shifting the focus from simple text generation to multi-agent orchestrators managing complex operational workflows at scale.

Code-as-Action Dominance The success of frameworks like Hugging Face’s smolagents proves that executing Python scripts, rather than rigid JSON payloads, is the key to solving complex reasoning tasks and benchmarks like GAIA.

Local Infrastructure Push Between AMD's 200B edge models, Ollama’s MCP integration, and persistent cloud reliability issues, the agentic stack is rapidly consolidating around local execution and 'loop until pass' patterns.

The shift from 'vibe coding' to hardened, autonomous systems is here, led by local harnesses and code-as-action frameworks.

AgentBrief for Jan 14, 2026

The X Feed

Stop prompting and start orchestrating: the infrastructure for autonomous agents is moving from the cloud to your local terminal.

The narrative in the agentic web is shifting from the 'brain' to the 'body.' For too long, we’ve obsessed over frontier model benchmarks while ignoring the environments where agents actually live and work. That changes now. We are entering the age of the agent harness—a dedicated infrastructure layer that grounds autonomous agents in professional software environments. Whether it is Claude Code evolving into a terminal-native OS or Google’s Antigravity automating the full project lifecycle, the focus is moving toward grounding agents in real-world state. This isn't just about code generation; it is about autonomous execution. Simultaneously, AMD is making a massive play for the 'sovereign agent' by bringing 200B parameter models to the edge. If you are still building wrappers, you are falling behind. The future belongs to those building specialized harnesses that manage agentic memory, error-correction, and local compute. This issue explores the tools and hardware breakthroughs that allow us to stop 'vibe coding' and start shipping robust, autonomous systems that don't need a human to hold their hand.

The Rise of Autonomous Agent Harnesses: Beyond the Chatbox

The era of the 'agent harness' has officially arrived, shifting developer focus from simple chatbots to sophisticated environments that manage agentic workflows. As @JulianGoldieSEO noted, tools like Google’s Antigravity are now enabling developers to describe high-level requirements and collaborate with agents to build full projects, automating everything from initial planning to final deployment. This shift is epitomized by Claude Code, which is evolving rapidly with features like skill hot-reloading. According to @AgentCommunity_, this allows new capabilities to be integrated mid-stream without session restarts, significantly boosting productivity for complex, long-running tasks.

This trend underscores a competitive race to build the best orchestration layer for autonomous coding agents. Builders are creating specialized agents capable of managing codebases directly in the terminal and automating Git operations, closing the gap in 'vibe coding' where intuitive collaboration meets engineering precision. As @aakashgupta pointed out, these harnesses act as a full development team inside an IDE. In a striking anecdote shared by @yusufkrfz, a Google engineer reportedly claimed that Claude Code built in one hour what previously took their team an entire year to accomplish.

The shift suggests that future development won't just hinge on the model itself, but on the harness that grounds agents in professional environments. Industry sentiment shared by @_simonsmith indicates that by the end of 2026, mainstream AI tools could proactively suggest and create new apps daily rather than waiting for user prompts. While excitement dominates, @omarsar0 urges developers to invest in reusable workflows now, as these patterns will compound in value as harnesses and underlying models continue to mature.

AMD Unveils Ryzen AI Halo for Local 200B Parameter Models

At CES 2026, AMD CEO Lisa Su unveiled a bold vision for a 100x surge in AI compute demand, emphasizing a pivot toward powerful local processing capabilities for autonomous agents. As reported by @FTayAI, central to this strategy is the Ryzen AI Halo, a platform designed to run 200 billion parameter models locally. This hardware targets developers who need to run heavyweights like DeepSeek-V3 or Llama-3 on the edge, redefining the economics of private, autonomous agents by removing the latency and cost of cloud-based inference.

AMD’s 'scale-out' approach prioritizes edge optimizations to reduce dependency on cloud infrastructure, offering significant advantages in data privacy and autonomy. According to @agentcommunity_, hardware like the Ryzen AI Halo—equipped with 128 GB of DRAM and ROCm support—allows developers to bypass the 'subscription surveillance' model of cloud providers. Furthermore, the integration of 50-60 TOPS NPUs suggests that 2026 will be the year sovereign agents operate without constant internet reliance, as noted by both @BradWardFight and @NickADobos.

Reactions from the builder community suggest this is a direct challenge to cloud dominance. The form factor, likened to an Apple TV by @BradWardFight, makes it a practical solution for local agentic workloads. While specific benchmarks are still emerging, @agentcommunity_ highlights that the ability to handle massive models locally by Q2 2026 positions AMD as the primary infrastructure provider for the next generation of privacy-first agent developers.

HardGen and MemRL: Learning from Agentic Failure

Recent advancements in agent training are pivoting towards error-based learning, moving away from 'perfect' demonstrations. The HardGen approach, highlighted by @rohanpaul_ai, transforms tool-use failures into training data, allowing a 4B parameter model to rival much larger systems by learning from its own mistakes. This is a critical breakthrough for reliability in practical, messy environments.

Complementing this, the MemRL framework allows frozen models to improve post-deployment by selectively reusing episodic memories. As @rohanpaul_ai explains, this method sidesteps the high costs of retraining while boosting performance in complex reasoning. By letting agents research their own past on demand, developers can address overflowing context windows and enhance long-term decision-making utility @rohanpaul_ai.

LMArena Secures $150M to Standardize Agent Benchmarking

LMArena has secured $150M in funding at a $1.7B valuation, signaling massive investor confidence in the need for robust agent evaluation. Led by Felicis and UC Investments, this capital influx aims to expand a crowdsourced platform that has become critical for comparing frontier models and efficient small language models (SLMs), as noted by @rohanpaul_ai. For agent builders, this provides a standardized metric to evaluate real-world task efficacy.

While the funding is a milestone, the community remains divided on its impact. While @rohanpaul_ai emphasizes how the platform's rankings drive competition, others like @FTayAI speculate whether LMArena will pivot toward specialized agent tasks over general comparisons. This shift would align with current trends favoring tailored SLMs for business ROI over generic frontier model performance.

Adversarial Poetry and Data Leaks Threaten Agent Security

Research from Stanford reveals that even top-tier models like Claude 3.7 Sonnet can leak training data with a 95.8% match rate despite safety filters. As @rohanpaul_ai notes, this challenges the assumption that built-in safety layers are sufficient for secure applications. Furthermore, Mixture of Experts (MoE) models remain vulnerable to expert routing leaks that can expose user prompts during batched processing @rohanpaul_ai.

Beyond data leaks, new attack vectors like 'Adversarial Poetry' are emerging. @IntuitMachine discussed how simple linguistic structures like limericks can bypass safety mechanisms that expect complex code injections. Additionally, @rohanpaul_ai highlights that attackers are now hiding harmful requests within tool calls, necessitating a more adaptive security posture for anyone deploying production-grade agents.

Quick Hits

Models for Agents

- Claude Opus 4.5 is being hailed as 'self-driving level 4 for code' by early adopters @beffjezos.

- DeepSeek-R1's technical paper expanded to 86 pages, detailing massive reasoning gains through safety RL @rohanpaul_ai.

Agentic Infrastructure

- vLLM integrated an AI-generated 'Oink' kernel delivering 40% speedups for RMS norm operations @marksaroufim.

- A new load management platform has launched to handle high-frequency rate limiting specifically for agentic APIs @tom_doerr.

Tool Use & Function Calling

- RoboPhD utilizes evolving 'cheat sheets' and feedback loops to master complex text-to-SQL tasks @rohanpaul_ai.

- Developers are combining Claude Code with Nano Banana for real-time image generation during live coding sessions @rileybrown.

Multi-Agent Systems

- The Swarms framework is gaining traction for complex market research using specialized agent clusters @KyeGomezB.

- Agent Forge launched an 'Emerging Market Agent' to track real-time macro-economic activity @AITECHio.

The Front Page

From McKinsey’s massive agent fleet to 'handshake protocols,' the era of autonomous operational workers has officially arrived.

The Agentic Web is no longer a theoretical playground for developers; it is entering the phase of industrialization. Today’s lead story on McKinsey’s deployment of 25,000 AI agents—nearly one for every two human employees—marks a watershed moment for the 'Digital FTE' concept. This isn't just about scaling chatbots; it’s about a fundamental architectural shift toward multi-agent systems where specialized orchestrators manage intake, classification, and execution. As McKinsey CEO Bob Sternfels notes, this move signifies a transition from simple content generation to complex operational action at scale. But as we scale, the cracks in brittle, hardcoded systems are showing. We are seeing a move away from 'God Prompts' and static API definitions toward dynamic 'Handshake Protocols' and environment-aware standards like the Model Context Protocol (MCP). Whether it’s LG bringing massive MoE models to local inference or researchers distilling reasoning into 1B-parameter models like Shadows-Gemma, the goal is clear: reliability at the edge. We’re also seeing the rise of 'self-awareness' layers like Gnosis that detect hallucinations by monitoring internal states. For builders, the message is loud: stop engineering the prompt and start engineering the system. Reliability, observability, and context-awareness are the new table stakes for autonomous agents.

McKinsey’s 25,000 AI Agents: Defining the Architecture of the Digital FTE r/AIAgentsInAction

McKinsey & Company CEO Bob Sternfels recently revealed that the firm has deployed 25,000 AI agents to work alongside its 40,000 human employees, a move that signifies a massive shift toward 'Digital FTEs' u/Deep_Structure2023. These autonomous workers are not mere chatbots but are built on a multi-agent architecture where specialized roles—such as intake, classification, and execution—are coordinated by a central orchestrator u/Intelligent-Pen4302. Industry leaders emphasize that these systems move away from 'monolithic models' in favor of agentic reasoning, which uses iterative loops to hit APIs and complete tasks autonomously @AndrewYNg.

This architectural shift allows Digital FTEs to handle high-stakes workflows that were previously impossible for standard LLMs. By late 2025, projections suggest that 80% of organizations will have implemented similar end-to-end digital workforce shifts u/Intelligent-Pen4302, enabling a transition from simple content generation to complex operational action u/Safe_Flounder_4690. McKinsey's implementation specifically focuses on 'Lilli,' an internal platform that aggregates knowledge across the firm to assist consultants in real-time @McKinsey.

Handshake Protocols and Observability Redefine Agent Orchestration r/AgentsOfAI

Hardcoding agent-to-agent schemas is cited as a leading cause for 50% of swarm failures, as static definitions cannot adapt to evolving model outputs. Developers are increasingly adopting a 'Handshake Protocol' where agents negotiate their own API interfaces and capabilities prior to task execution u/cloudairyhq. This dynamic negotiation reduces the fragility of multi-agent systems by ensuring that connections are no longer defined manually. Industry experts like @AlphaSignalAI have noted that standardization via protocols like Anthropic's Model Context Protocol (MCP) is beginning to mirror these handshake requirements by providing a universal interface for agent-to-tool interactions.

Simultaneously, agent observability is pivoting away from traditional Application Performance Monitoring (APM). Specialized tools like Maxim are introducing distributed tracing specifically for multi-turn conversations and reasoning chains u/dinkinflika0. This level of visibility is critical for autonomous workers that interact with external databases and payment triggers, where a single logic error can have financial consequences. However, standardizing 'Agent Skills' across heterogeneous platforms like GitHub, Claude, and Codex remains a significant hurdle; practitioners are currently advocating for a unified discovery path to prevent vendor lock-in and fragmented skill sets u/phoneixAdi.

LG Merges 236B MoE into Llama.cpp as Shadows-Gemma-3-1B Redefines Tiny Reasoning r/LocalLLaMA

LG AI Research has introduced K-EXAONE-236B-A23B, a Mixture-of-Experts (MoE) model featuring 236B total parameters and 23B active parameters, which has now been officially merged into llama.cpp u/jacek2023. Community benchmarks suggest the model performs competitively against industry standards like Mixtral 8x22B and DeepSeek-V2.5, specifically excelling in multilingual reasoning and code generation @_akhaliq. This release provides a high-scale open alternative for developers running heavy-duty local inference.

On the smaller end, Shadows-Gemma-3-1B has emerged as a groundbreaking 'cold-start' reasoning model distilled from Gemma-3-4B-it u/Echo9Zulu-. Remarkably, the model was trained on just 1,569 samples in under 20 minutes on TPUv5-8e hardware. Experts note that this project proves logprob distillation can successfully induce complex reasoning traces in tiny models without the need for traditional RL or massive math-heavy datasets @arankomatsuzaki. This shift toward efficient distillation techniques suggests a new path for deploying reasoning capabilities on edge-compatible devices.

Bridging Context Blindness: The Rise of System-Aware Automation r/LocalLLaMA

Developers testing 'Computer Use' models for local automation report a consistent 'Terminal Trap' where agents fail because they lack environment-specific context, a sentiment echoed by u/slow-fast-person who notes that generic solutions break when confronted with non-standard shell environments. To solve this, the industry has pivoted toward the Model Context Protocol (MCP), an open standard that allows agents to securely access local file systems and database schemas. As highlighted by @alexalbert__, MCP acts as the critical 'control layer,' ensuring models do not operate in a vacuum. By injecting real-time metadata—such as active terminal paths and OS configurations—builders are moving past brittle RPA scripts toward context-aware systems. This shift is essential for high-reliability tasks, as system-integrated agents can verify the environment before executing commands, significantly reducing failure rates in complex multi-step workflows.

Replayable Runtimes and Governance Layers for Reliable Agents r/AI_Agents

Managing failures in multi-step agent workflows remains a significant hurdle. AgentTrail has emerged as a specialized replayable runtime for tool calls, providing built-in idempotency and 'compensation' logic u/Wide-Anybody-978. By logging every tool outcome, the system prevents 'messy retries'—ensuring that retries only execute the specific step that failed. This approach brings the Saga Pattern to AI agents, allowing them to 'undo' previous actions if a sequence fails midway.

Simultaneously, TensorWall is gaining traction as a control layer for LLM APIs, offering budget management, auditing, and protection against prompt injection u/ApartmentHappy9030. Operating as a drop-in replacement for standard endpoints, it provides a policy engine for fine-grained access control. Industry observers argue that these infrastructure layers—combining execution reliability with strict governance—are the essential backbone required for agents to handle high-stakes operations in sectors like banking or healthcare, where deterministic reliability is critical u/ParsleyFeeling3911.

8,000+ n8n Workflows and v2 Architecture Shift Drive Agentic Automation r/n8n

A massive repository of over 8,000 n8n workflows has been released via GitHub and the new search portal n8n-workflows.xyz, significantly lowering the barrier for developers building agentic automations u/nusquama. This collection addresses the 'blank canvas' problem by providing searchable, production-ready templates for various enterprise use cases.

Technically, the transition to n8n v2 introduces a critical architectural shift toward external runners for system binaries. Community experts advise against using internal runners for compute-intensive tasks such as ffmpeg and Whisper, citing reliability issues in standard container environments u/Proper_DEVIL. By utilizing external runners in Docker, developers can ensure process persistence. Furthermore, the integration of MCP within tools like OpenWebUI is streamlining how LLMs interact with these workflows, effectively turning n8n into an execution engine for AI agents u/SeeGee911.

Gnosis: A 5M-Parameter 'Self-Awareness' Layer Outperforms Gemini 2.5 Pro in Hallucination Detection r/automation

Researchers at the University of Alberta have introduced Gnosis, a lightweight 5-million parameter 'self-awareness' probe that significantly improves hallucination detection by monitoring an LLM's internal hidden states u/Positive-Motor-5275. Unlike traditional reward models, Gnosis analyzes attention patterns and 'compression strain' during generation to predict errors before they are finalized u/Necessary-Dot-8101.

The mechanism demonstrates remarkable efficiency, reportedly outperforming 8-billion parameter reward models and even frontier models like Gemini 2.5 Pro in judging factual accuracy. For agent developers, this provides a low-latency 'self-correction' layer, allowing autonomous workflows to trigger fail-safes when internal uncertainty exceeds a threshold. Experts suggest this 'Compression-Aware Intelligence' (CAI) framework could lead to a new generation of agents that can 'stop and think' before triggering critical external actions.

The Death of the 800-Line God Prompt: The Rise of Iterative Density r/PromptEngineering

The 'Chain of Density' (CoD) prompt is redefining summarization efficiency by prioritizing information density over length. Developed by researchers at Salesforce, MIT, and Columbia, the method employs a 5-step iterative cycle to integrate 'missing entities' while keeping outputs under 100 words u/Complex-Ice8820. Benchmarks show that while CoD summaries are more informative than standard GPT-4 outputs, there is a 'density ceiling' where readability suffers @G_R_I_F_F_I_N.

Meanwhile, the community is moving away from 'God Prompts'—massive monolithic instruction sets—as models suffer from 'instruction fatigue' u/qumukoqa6092. This has shifted focus toward 'Reverse Prompt Engineering,' where developers use LLMs to deconstruct high-quality outputs to extract underlying system roles u/Complex-Ice8820. This forensic approach allows builders to replicate complex agent behaviors without the fragility of over-engineered, thousand-line prompts.

The Dev Den

Anthropic's infrastructure strain meets Ollama's local expansion and Cursor's autonomous loops.

Today’s agentic landscape is defined by a growing gap between frontier model capability and infrastructure reliability. While Anthropic’s Claude 3.7 and 4.5 tiers represent a massive leap in reasoning and coding ability, the developer experience is currently marred by persistent 529 errors and billing inconsistencies. This friction is driving a renewed focus on local execution and autonomous loops. Ollama’s v0.14.0 release, which introduces experimental image generation and signals a move toward becoming a centralized MCP client, highlights a future where the local runner is the hub of the agentic stack. Meanwhile, the developer workflow is shifting from manual prompts to autonomous 'loop until pass' patterns in Cursor. As we navigate these growing pains, the takeaway for builders is clear: reliability is the new benchmark. Whether it's through server-side caching in the Model Context Protocol (MCP) or hardening Docker networking for local agents, the industry's focus has moved from what the model can say to what the system can reliably do. We are moving beyond the 'vibe-check' era into a phase of hardened, autonomous production systems.

Claude Sonnet 4.5 and Claude Code Face Reliability Hurdles

Anthropic's rollout of Claude 3.7 Sonnet and the experimental 4.5 tier has been met with significant infrastructure strain, evidenced by frequent 529 Overloaded errors reported by developers like nowahe. These stability issues have directly impacted the Claude Code CLI, where users like @xentoshis report that usage credits are sometimes deducted even when the model fails to generate a response. Further complicating the launch, a widespread OAuth misconfiguration temporarily prevented new logins, with developers noting that authorization endpoints appeared to have vanished during peak traffic windows.

Usage limits for the new Max plan have also sparked confusion among power users. While the plan highlights a $2,000 daily usage cap, practitioners have found that specific model-level rate limits still trigger throttles, leading to unexpected downtime during high-intensity coding sessions blindestgoose. Consequently, some engineers are pivoting to Grok-3 or GPT-4o for large-file parsing when Claude's context handling becomes inconsistent or 'vibe-coded' z_malloc_66849. Anthropic's official status page has acknowledged 'elevated error rates,' though users continue to seek clarity on credit refunds for failed CLI operations.

Join the discussion: discord.gg/anthropic

Ollama v0.14.0 Debuts Experimental Image Gen and MCP Integration

Ollama has officially released version v0.14.0, introducing highly anticipated experimental image generation capabilities powered by MLX specifically for macOS users maternion. This update allows for the local execution of diffusion models, with developers noting that while it currently leverages Apple Silicon's unified memory, future support for ROCm and Vulkan is being tracked to bring these features to Linux and Windows. Early testing indicates that while image generation is functional, users are still benchmarking whether the current implementation will fully utilize next-gen hardware like the 5070 Ti or remain optimized for mobile-first unified architectures.

Parallel to the image generation rollout, a significant architectural shift is underway via a pull request to transform Ollama into an MCP client pwnosaurusrex. This integration would enable Ollama to orchestrate multiple Model Context Protocol servers, effectively turning the local runner into a centralized automation hub. Despite these advancements, users like tnorlund emphasize that structured JSON outputs remain more reliable on local instances than cloud-hosted variants due to current schema enforcement limitations.

Join the discussion: discord.gg/ollama

Cursor Workflows Shift Toward Autonomous 'Loop Until Pass' Execution

The developer experience in Cursor is rapidly evolving toward autonomous agent loops that minimize human intervention. vinniefalco argues for a workflow where the agent acts independently—building the program, fixing compilation errors, and running tests until they pass—rather than requiring manual command entry for every step. This 'loop until pass' pattern is becoming a standard for high-velocity development, supported by community-driven scripts like cursor-tools and wrappers that automate the terminal's 'fix and retry' cycle. Industry experts like @swyx have noted that reducing the 'human-in-the-loop' bottleneck can lead to significant gains in developer throughput.

Prompt engineering for these agents is also undergoing a paradigm shift. nightstars. and @karpathy suggest that the 'you are an expert' persona is now largely ineffective for modern frontier models. Instead, these agents respond better to small, technical, and highly specific tasks without the need for complex XML tagging or roleplay. This shift toward technical precision over narrative instruction ensures the agent doesn't lose context in large projects, a sentiment echoed by @amanrs who emphasizes the importance of providing 'clean context' over verbose prompts to maintain 90%+ accuracy in multi-file edits.

Join the discussion: discord.gg/cursor

Optimizing MCP: Server-Side Caching and the Rise of Universal Protocols

The Model Context Protocol (MCP) community is increasingly focused on tool call persistence to reduce latency and token costs. While the core MCP specification remains transport-agnostic and stateless by default, new middleware like FastMCP introduces server-side caching mechanisms. Developers can now implement @mcp.tool(cache_seconds=3600) to prevent redundant execution of expensive operations. This development addresses a critical friction point for agents performing repetitive database lookups or weather queries.

Architectural clarity remains essential for new builders: the MCP Host (like Claude Desktop) initiates the lifecycle, the MCP Client maintains the 1:1 connection, and the MCP Server provides the specific tools or resources. However, the ecosystem is seeing a push toward even more lightweight standards. The utcp (Micro Tool Call Protocol) has emerged as a universal alternative, designed to be simpler than MCP and easier to implement across diverse LLM providers without vendor-specific overhead utcp.io. While MCP offers a robust framework for complex context sharing, utcp targets developers prioritizing rapid, cross-platform tool integration.

Bridging the Infrastructure Gap: Docker Networking and New Model Weights

A common hurdle for agent developers is connecting workflow tools like n8n to local inference engines like Ollama. As noted by theunknownmuncher, 'localhost' within a container refers to the container itself, necessitating the use of host.docker.internal. For Linux users, this requires the --add-host=host.docker.internal:host-gateway flag in Docker Compose. Beyond connectivity, developers are optimizing persistence via n8n Data Tables, allowing agents to store and retrieve variables without the complexity of external databases .joff.

On the model front, GLM-Image is challenging Stable Diffusion 3 in text-rendering and spatial prompts, while the Unsloth team has released a critical GGUF update for MedGemma 1.5-4b to resolve prompt template issues @unsloth. For high-parameter local workflows, MiniMax-M2.1 has emerged as a viable open alternative for reasoning-heavy tasks, achieving 3.6 tok/sec on Q4 quantizations.

Join the discussion: discord.gg/n8n

The Open Hub

Hugging Face’s smolagents proves that Python scripts, not JSON payloads, are the secret to cracking complex reasoning.

Today we are witnessing a fundamental shift in how agents think and act. For a year, we’ve relied on 'tool calling' via structured JSON—a method that often feels like trying to perform surgery with oven mitts. It’s rigid, error-prone, and struggles with logic. But the release of smolagents and its massive performance leap on the GAIA benchmark suggest that 'Code-as-Action' is the superior path. By allowing agents to write and execute Python, we give them the loops and logic they need to solve multi-step problems that leave traditional models hallucinating. This isn't just a win for Hugging Face; it’s a signal for the entire ecosystem. From NVIDIA’s Cosmos Reason 2 bringing spatial logic to robotics, to H Company’s ScreenEnv moving agents into full-stack desktop automation, the trend is clear: agents are moving away from being chatbots and toward becoming autonomous operators. Whether it is the 405B Hermes 3 or a 'smol' model optimized for Apple Silicon, the goal is now real-world utility over mere conversation. Let's dive into the frameworks and models making this possible.

Hugging Face Advances 'Code-as-Action' with smolagents and GAIA Success

The era of rigid JSON tool-calling is fading. Hugging Face’s smolagents just threw down the gauntlet with a Code-as-Action approach. Instead of outputting structured data and hoping a middleware parses it correctly, agents now generate and execute Python scripts. The results speak for themselves: a 39.4% score on the rigorous GAIA benchmark, setting a new SOTA for open-source models like Llama-3-70B Hugging Face.

This isn't just about raw power; it’s about visibility. By partnering with Arize Phoenix, Hugging Face is giving developers a front-row seat to the agent's brain, using OpenInference tracing to visualize every logic loop in real-time. With new multi-modal support via smolagents-can-see, these agents aren't just reading code; they’re interpreting visual inputs to solve spatial tasks autonomously.

Vision Models Power Full-Stack Desktop Automation

While browser-based agents are common, H Company is pushing the boundary into native desktop interaction. Their ScreenEnv environment and the Holo1 VLM family represent a leap toward full-stack automation. Industry observers like @_akhaliq have already highlighted Holo1’s dominance on complex GUI benchmarks, where it uses visual feedback loops to correct errors in real-time.

To address the lack of standardization, huggingface introduced ScreenSuite, a unified testing ground for desktop reliability. We’re also seeing specialized techniques like smol2operator that squeeze high-end GUI performance out of smaller models, proving that you don’t need massive compute overhead to navigate complex software interfaces.

NVIDIA and AMD Accelerate Real-Time Physical AI and Robotics

NVIDIA is taking the 'reasoning' trend to the physical world with Cosmos Reason 2. This is a visual language model designed specifically for physical reasoning and world modeling, enabling robots to predict state transitions with low-latency inference. When paired with hardware like the Reachy Mini and NVIDIA DGX Spark, we get high-frequency control loops that make robotics feel less like a series of commands and more like fluid interaction.

AMD is also mobilizing builders through the AMD Open Robotics Hackathon, encouraging the use of the ROCm™ open software stack for agentic workflows. Meanwhile, Pollen Robotics is standardizing the vision layer with Pollen-Vision, a unified interface that allows developers to integrate zero-shot models like SAM 2 directly into robotic pipelines without custom retraining.

Open Deep Research and the Rise of MCP-Powered Smolagents

Autonomous research is getting a transparent makeover with Open-source DeepResearch. Built on the smolagents framework, it replaces the 'black box' of proprietary search agents with a modular system using tools like DuckDuckGoSearchTool and VisitWebpageTool to process raw HTML into clean markdown.

On the connectivity side, the Model Context Protocol (MCP) is becoming the glue of the agentic web. Hugging Face has shown that you can build functional MCP agents in under 70 lines of code. This simplicity, combined with the Unified Tool Use initiative, is finally abstracting away the complexities of different provider APIs. Even JavaScript developers are getting in on the action with Agents.js, ensuring these tool-calling standards reach beyond the Python community.

Advancing Agentic Evaluation: From NP-Hard Reasoning to Future Prediction

Measuring agentic intelligence is shifting from static Q&A to complex reasoning. The NPHardEval Leaderboard pushes LLMs across computational complexity classes, where open-source models like Llama-3-70B and Qwen2.5-72B are closing the gap on proprietary leaders. This is complemented by FutureBench, which tests an agent's ability to extrapolate future events—a critical skill for proactive decision-making.

For specialized domains, DABStep provides a framework for multi-step reasoning in data tasks, while the release of GAIA2 emphasizes real-world web navigation. As noted in the NPHardEval research paper, these dynamic updates are essential to prevent data contamination and ensure the reliability of autonomous systems in high-stakes environments.

Hermes 3 and MiniMax-M2: Advancing Agentic Intelligence

Nous Research has unveiled Hermes 3, a flagship series spanning up to 405B parameters. This model is specifically fine-tuned for the 'multi-turn' marathons that define agentic workflows, achieving SOTA performance by leveraging a dataset rich in synthetic reasoning traces.

Complementing this, MiniMax-M2 explores how models adapt to unseen tools, a concept known as 'agent generalization.' For local deployment, the MiniMax-M2.1-REAP-30 quantization provides an optimized MXFP4 implementation for Apple Silicon, bringing high-end function-calling to the edge.

Generalist Transformers and ELO-Based Rankings Drive Multi-Agent Competitions

Multi-agent systems are evolving through advanced reinforcement learning. Hugging Face’s AI vs. AI framework uses an ELO rating system to rank agents that learn through self-play and competitive interaction. This ecosystem is bolstered by the Jack of All Trades (JAT) agent, a generalist model trained on 157 diverse tasks, from Atari to MuJoCo.

To stress-test these capabilities, developers are using environments like Snowball Fight, a 2v2 simulation where agents must master both individual mechanics and collaborative strategies. These developments signify a major trend toward agents that can generalize across tasks while maintaining high-level performance in shared, complex environments.

Community Showcases: The Rise of 'smolagents' and the Hugging Face Agents Course

The barrier to entry for building agents has never been lower. The Hugging Face Agents Course and its First_agent_template have sparked a wave of community builds, with the template already achieving over 650 likes.

From nolanzandi's virtual-data-analyst to the SmolNews summarizer, developers are proving that the combination of smolagents and modular search tools is the fastest path from idea to autonomous prototype. Other innovative implementations like AlfredAgent and osw-studio demonstrate the library's versatility in personal assistance and workflow automation.